I would like to detect the pictures suffered by Vignetting or not, but cannot find a way to measure it. I search by keywords like "Vignetting metrics, Vignetting detection, Vignetting classification", they all lead me to topics like "Create vignetting filters" or "Vignetting correction". Any metric could do that? Like score from 0 to 1, the lower the score, the more unlikely the images suffered from vignetting effect. One of the naive solution I come up is measure the Luminance channel of the image.

#include <opencv2/core.hpp>

#include <opencv2/highgui.hpp>

#include <opencv2/imgproc.hpp>

using namespace cv;

using namespace std;

int main()

{

auto img = imread("my_pic.jpg");

cvtcolor(img, img, cv::COLOR_BGR2LAB);

vector<Mat> lab_img;

split(img, lab_img);

auto const sum_val = sum(lab_img[0])[0] / lab_img[0].total();

//use sum_val as threshold

}

Another solution is trained a classifier by CNN, I could use the vignetting filter to generate images with/without vignetting effect. Please give me some suggestions, thanks.

CodePudding user response:

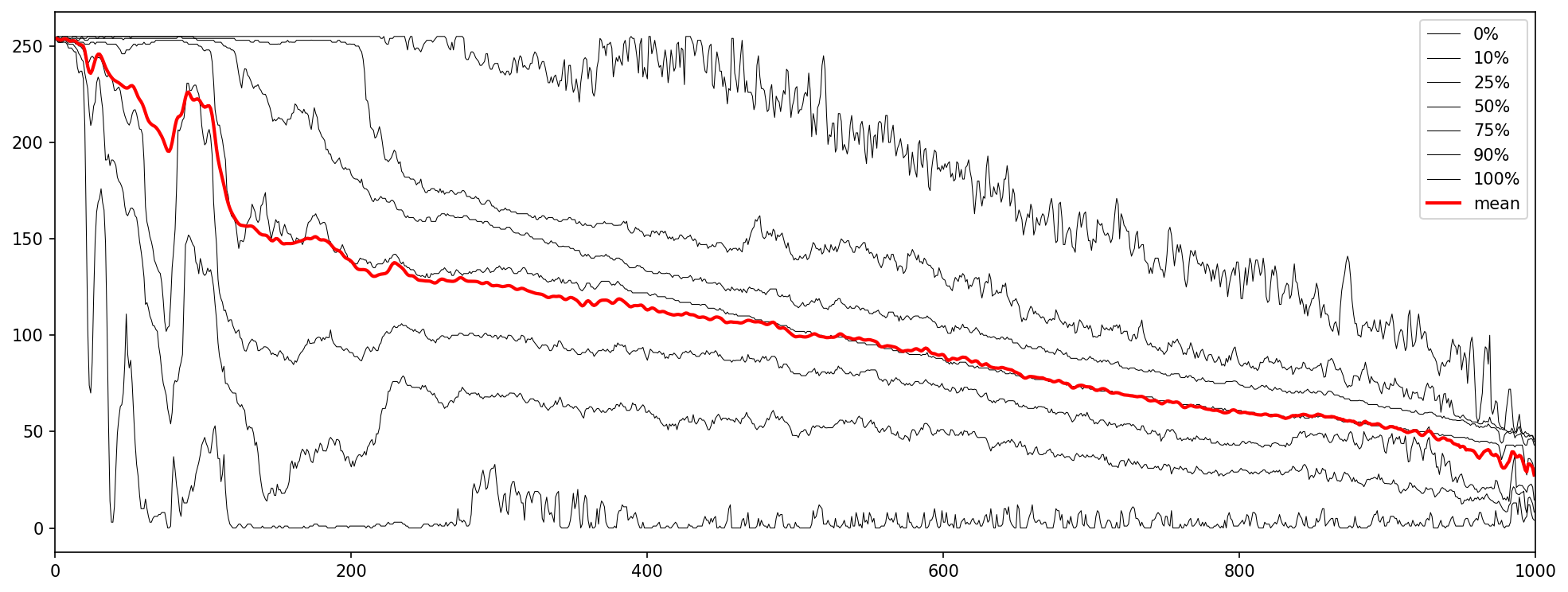

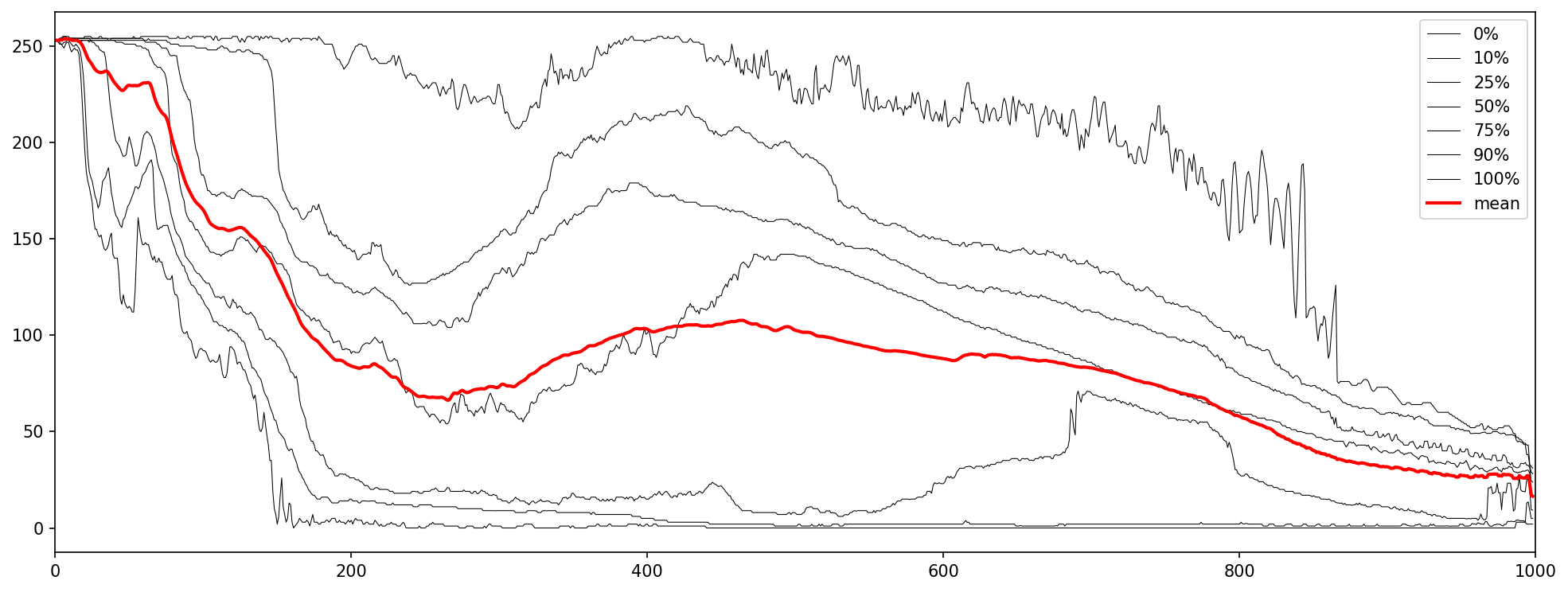

Use a polar warp and some simple statistics on the picture. You'll get a plot of the radial intensities. You'll see the characteristic attenuation of a vignette, but also picture content. This 1D signal is easier to analyze than the entire picture.

This is not guaranteed to always work. I'm not saying it should. It's an approach.

Variations are conceivable that use medians, averages, ... but then you'd have to introduce a mask too, so you know what pixels are coming from the image and which ones are just out-of-bounds black (to be ignored). You can extend the source image to 4-channel, with the fourth channel being all-255. The warp will treat that as any other color channel, so you'll get a "valid"-mask out of it that you can use.

I am confronting you with Python because it's about the idea and the APIs, and I categorically refuse to do prototyping/research in C .

(h,w) = im.shape[:2]

im = np.dstack([im, np.full((h,w), 255, dtype=np.uint8)]) # 4th channel will be "valid mask"

rmax = np.hypot(h, w) / 2

(cx, cy) = (w-1) / 2, (h-1) / 2

# dsize

dh = 360 * 2

dw = 1000

# need to explicitly initialize that because the warp does NOT initialize out-of-bounds pixels

warped = np.zeros((dh, dw, 4), dtype=np.uint8)

cv.warpPolar(dst=warped, src=im, dsize=(dw, dh), center=(cx,cy), maxRadius=int(rmax), flags=cv.INTER_LANCZOS4)

values = warped[..., 0:3]

mask = warped[..., 3]

values = cv.cvtColor(values, cv.COLOR_BGR2GRAY)

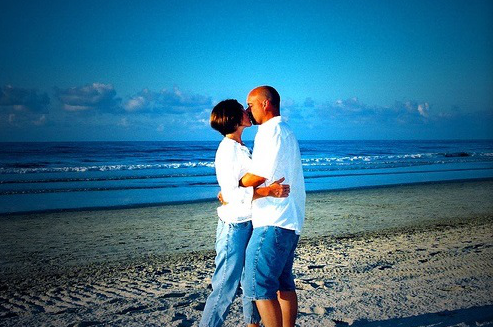

picture 1:

picture 2:

mvalues = np.ma.masked_array(values, mask=(mask == 0))

# numpy only has min/max/median for masked arrays

# need this for quantile/percentile

# this selects the valid pixels for every column

cols = (col.compressed() for col in mvalues.T)

cols = [col for col in cols if len(col) > 0]

plt.figure(figsize=(16, 6), dpi=150)

plt.xlim(0, dw)

for p in [0, 10, 25, 50, 75, 90, 100]:

plt.plot([np.percentile(col, p) for col in cols if len(col) > 0], 'k', linewidth=0.5, label=f'{p}%')

plt.plot(mvalues.mean(axis=0), 'red', linewidth=2, label='mean')

plt.legend()

plt.show()

Plot for first picture:

Plot for second picture: