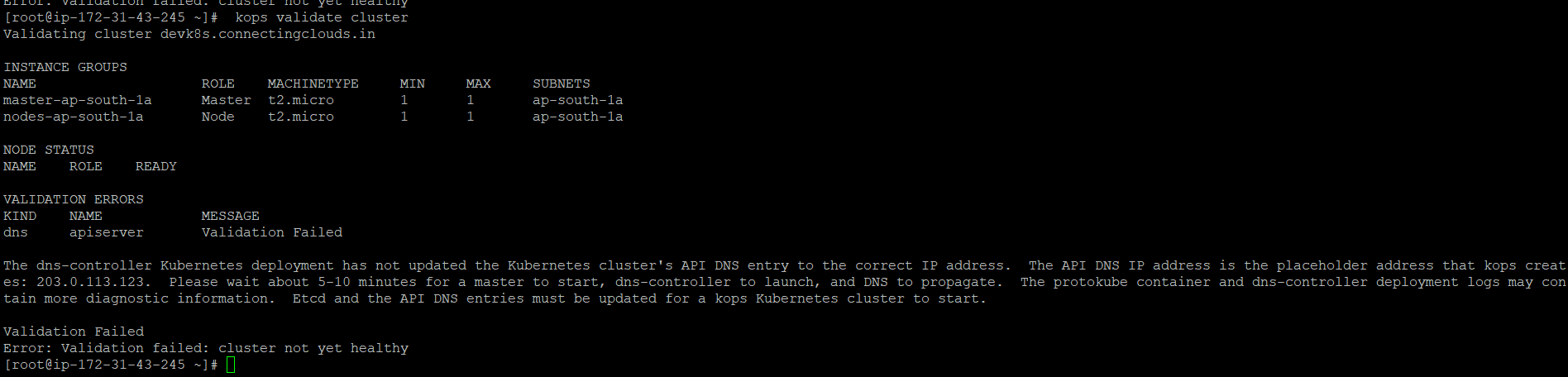

I am trying to create a k8s cluster using the kops utility, however, I am getting the below error.

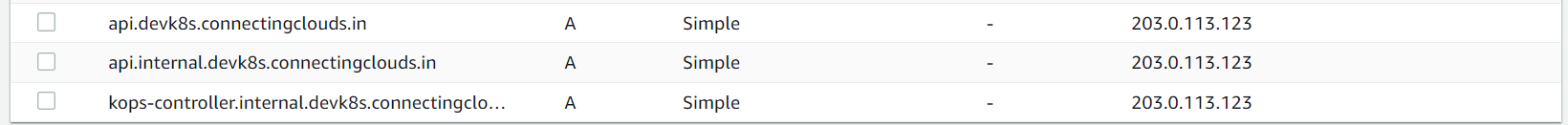

I have a public hostedzone in route53 connectingclouds.in

CodePudding user response:

Here's what works for me. Hope it helps.

Create k8s cluster using kops

production-environment/tools/kops

Pre-requisite

You should own a domain for example in this case I own domainname.com

This should create a default hosted zone as well with Hosted-Zone-ID=XXXXXXXX

$ dig ns domainname.com | egrep "ANSWER SECTION" -A 4

;; ANSWER SECTION:

domainname.com. 172532 IN NS ns-945.awsdns-54.net.

domainname.com. 172532 IN NS ns-1991.awsdns-56.co.uk.

domainname.com. 172532 IN NS ns-157.awsdns-19.com.

domainname.com. 172532 IN NS ns-1442.awsdns-52.org.

$ dig soa domainname.com | egrep "ANSWER SECTION" -A 2

;; ANSWER SECTION:

domainname.com. 820 IN SOA ns-157.awsdns-19.com. awsdns-hostmaster.amazon.com. 1 7200 900 1209600 86400

- Install binary

$ brew update && brew install kops

$ kops version

Version 1.19.1

Set IAM User

AWS_ACCESS_KEY_ID=xxxxxxxxxxxxxxxxx

AWS_SECRET_ACCESS_KEY=xxxxxxxxxxxxxxx

- Create the IAM role which gives the keys

aws configure

- Create the bucket

$ bucket_name=k8-kops-stage-test

$ aws s3api create-bucket --bucket ${bucket_name} --region us-east-1

{

"Location": "/k8-kops-stage-test"

}

- Enable versioning

$ aws s3api put-bucket-versioning --bucket ${bucket_name} --versioning-configuration Status=Enabled

- Create the cluster

$ export KOPS_CLUSTER_NAME=k8.domainname.com

$ export KOPS_STATE_STORE=s3://${bucket_name}

$ kops create cluster --node-count=1 --node-size=c5.2xlarge --master-count=1 --master-size=c5.xlarge --zones=eu-west-1a --name=${KOPS_CLUSTER_NAME} --yes

.

.

I0320 14:13:03.437182 44597 create_cluster.go:713] Using SSH public key: /Users/myusername/.ssh/id_rsa.pub

.

.

kops has set your kubectl context to k8.domainname.com

Cluster is starting. It should be ready in a few minutes.

Suggestions:

* validate cluster: kops validate cluster --wait 10m

* list nodes: kubectl get nodes --show-labels

* ssh to the master: ssh -i ~/.ssh/id_rsa [email protected]

* the ubuntu user is specific to Ubuntu. If not using Ubuntu please use the appropriate user based on your OS.

* read about installing addons at: https://kops.sigs.k8s.io/operations/addons.

- Validate the cluster

kops validate cluster --wait 10m

.

.

W0320 14:18:53.164348 44767 validate_cluster.go:173] (will retry): unexpected error during validation: unable to resolve Kubernetes cluster API URL dns: lookup api.k8.domainname.com: no such host

INSTANCE GROUPS

NAME ROLE MACHINETYPE MIN MAX SUBNETS

master-eu-west-1a Master c5.xlarge 1 1 eu-west-1a

nodes-eu-west-1a Node c5.2xlarge 1 1 eu-west-1a

NODE STATUS

NAME ROLE READY

ip-172-20-54-246.eu-west-1.compute.internal master True

ip-172-20-55-44.eu-west-1.compute.internal node True

Your cluster k8.domainname.com is ready

- Delete the cluster

kops delete cluster --name ${KOPS_CLUSTER_NAME} --yes

- Export a kubeconfig with admin priviledges, (Note this would have a TTL)

$ kops export kubecfg --admin --kubeconfig ~/workspace/kubeconfig --state=s3://${bucket_name}

CodePudding user response:

The error you see there means that the control plane is unable to update your API DNS entry. This happens when a component called dns-controller doesn't run.

You are trying to use very small instances for your control plane. Smaller instances than t3.medium, which is the default, will probably not be able to run the control plane components.