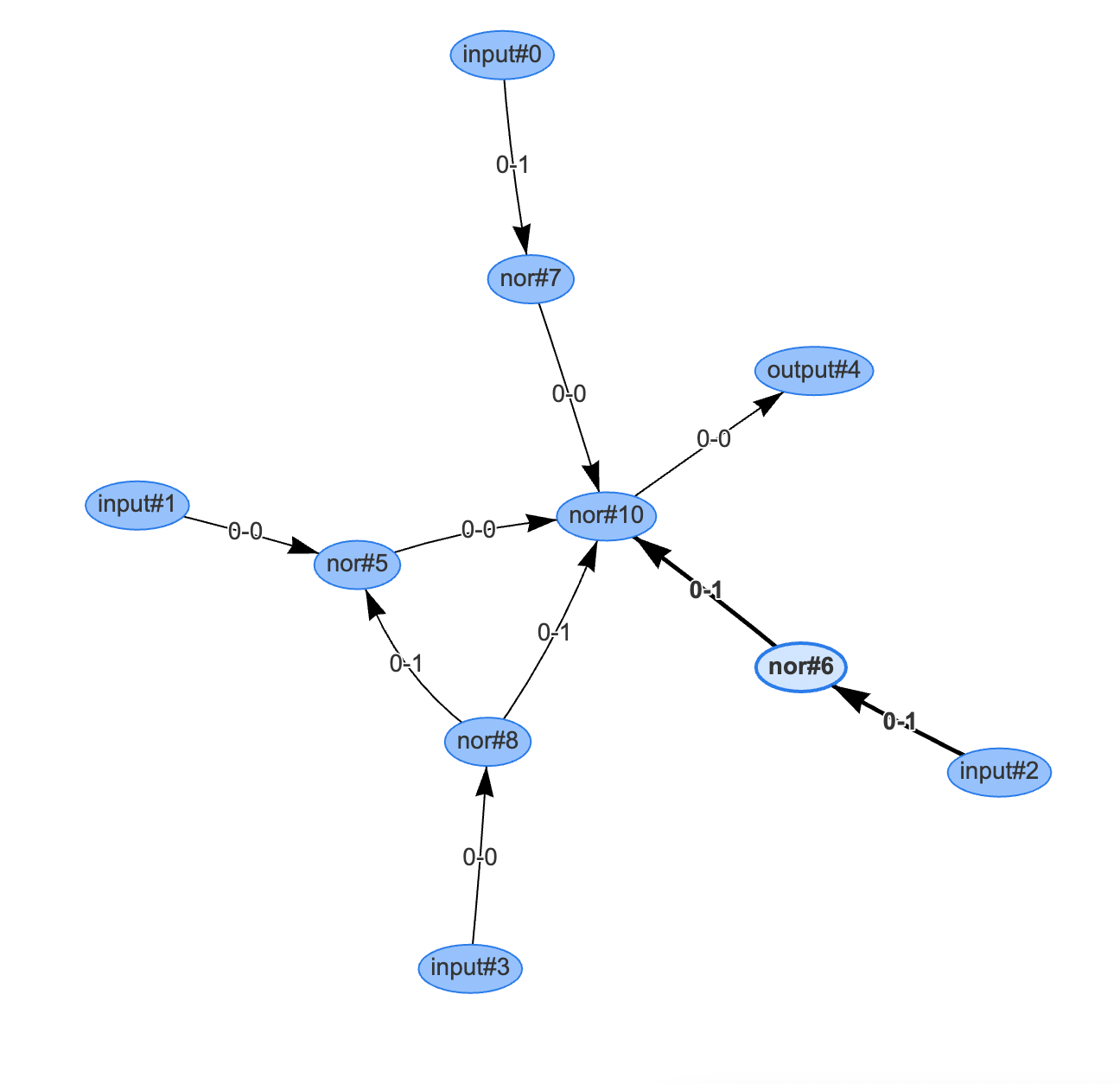

I'm developing a program that tries to build logic circuit from NOR gates given some fitness function (logic_circuit => fitness_value).

currently I'm using some kind of simulated annealing(make N random mutations to logic circuit graph and calculate fitness of new solution if fitness high enough than accept as current solution)

is there more efficient algorithms for this kind of problem?

CodePudding user response:

Multi-level logic synthesis has been a research topic for decades. To apply a sequence of local transforms for circuit improvement is a strategy pursued by the Berkeley ABC system.

A related paper is SAT-Based Logic Optimization and Resynthesis. A recent publication is Reinforcement Learning for Scalable Logic Optimization with Graph Neural Networks.

Usually, the local transforms start out with a correct circuit and try to improve it without touching its correctness. To transform some arbitrary circuit into a correct one does not look promising to me.

The truth-table of a circuit with n inputs has 2^n rows. To check the correctness, the optimizer has to check all 2^n values. The number of matches (= this fitness measure) is between 0 and 2^n. There are typically many possible ways to transform the circuits. Therefore, the tree of alternatives quickly explodes for more than a handful inputs.

A possible search approach would be to decompose the function F to be implemented into two simpler functions F1 and F2 such that

F(a, b, ....) = NOR(F1(a, b, ....), F2(a, b, ....))

This split can be optimized to minimize the complexity of the sub-functions F1 and F2.

The approach is recursive. F1 and F2 are decomposed into sub-functions. The recursion ends, if a function just represents a constant or a single input variable.

The resulting circuit is a tree of two-input NOR gates. It might be possible to re-use already synthesized sub-functions or allow NOR gates with varying number of inputs (INV, NOR2, NOR3, ...).