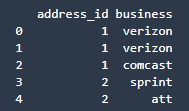

I have a small dataframe, like this.

import pandas as pd

import numpy as np

# data's stored in dictionary

details = {

'address_id': [1, 1, 1, 2, 2],

'business': ['verizon', 'verizon', 'comcast', 'sprint', 'att']

}

df = pd.DataFrame(details)

print(df)

I am trying to find out if, and when a person switched to a different cell phone service.

I tried this logic; didn't work.

df['new'] = df.Column1.isin(df.Column1) & df[~df.Column2.isin(df.Column2)]

Basically, given index row 0 and row 1, when the address_id was the same, the business was the same, but the business changed from verizon to comcast in index row 2. Also, given index row 3 and row 4, the address_id was the same, but the business changed from sprint to att in index row 4. I'd like to add a new column to the dataframe to flag these changes. How can I do that?

CodePudding user response:

UPDATE: A comment by @rickhg12hs points out that my earlier answers (see below), while detecting when a person switches to a new cell phone service, do not handle the case of a person switching back to a previous service.

To handle this possibility, we must use something like the logic in another answer (@Pranav Hosangadi), though I would do it slightly differently:

df['new'] = ( df

.groupby('address_id', sort=False)

.apply(lambda x: x.business != x.business.shift().bfill())

.reset_index(0).business )

Input:

address_id business

0 1 verizon

1 1 verizon

2 1 comcast

3 2 sprint

4 2 att

5 2 sprint

Output:

address_id business new

0 1 verizon False

1 1 verizon False

2 1 comcast True

3 2 sprint False

4 2 att True

5 2 sprint True

Performance comparison:

Here is test code for 600k rows and 5 columns, with results showing that PREVIOUS UPDATE takes about 0.1 seconds to identify 333815 rows for which new==True, while UPDATE takes about 35 seconds to find 335334 True rows, reflecting about 0.5% additional rows for which a person has switched cell phone services and then switched back.

rng = np.random.default_rng()

details = {

'address_id': rng.integers(1,100_000, size=600_000),

'business': [['verizon','comcast','sprint','att'][i] for i in rng.integers(0,3, size=600_000)],

'foo': 1,

'bar': 2

}

df = pd.DataFrame(details)

print('groupby() ...')

start = datetime.now()

x = ( df

.groupby('address_id', sort=False) )

print(f'... complete after {datetime.now() - start} time elapsed.')

print('apply() ...')

start = datetime.now()

x = ( x

.apply(lambda x: x.business != x.business.shift().bfill()) )

print(f'... complete after {datetime.now() - start} time elapsed.')

print('reset_index() ...')

start = datetime.now()

df['new'] = ( x

.reset_index(0).business )

print(f'... complete after {datetime.now() - start} time elapsed.')

print(df)

print('rows with "new" == True', df.new.sum())

df = pd.DataFrame(details)

print('PREVIOUS UPDATE() ...')

start = datetime.now()

df['new'] = df.address_id.map(df.groupby('address_id').first().business) != df.business

print(f'... complete after {datetime.now() - start} time elapsed.')

print('rows with "new" == True', df.new.sum())

Results:

groupby() ...

... complete after 0:00:00 time elapsed.

apply() ...

... complete after 0:00:33.541322 time elapsed.

reset_index() ...

... complete after 0:00:00.040942 time elapsed.

address_id business foo bar new

0 20223 sprint 1 2 False

1 29297 comcast 1 2 False

2 92489 comcast 1 2 False

3 29297 verizon 1 2 True

4 98901 comcast 1 2 False

... ... ... ... ... ...

599995 29823 comcast 1 2 True

599996 39328 comcast 1 2 True

599997 27594 comcast 1 2 False

599998 14903 sprint 1 2 True

599999 87375 verizon 1 2 True

[600000 rows x 5 columns]

rows with "new" == True 335334

PREVIOUS UPDATE() ...

... complete after 0:00:00.097930 time elapsed.

rows with "new" == True 333815

PREVIOUS UPDATE: Here is an even simpler way than my original answer using join() (see below) to do what your question asks:

df['new'] = df.address_id.map(df.groupby('address_id').first().business) != df.business

Explanation:

- Use

groupby()andfirst()to create a dataframe whosebusinesscolumn contains the first one encountered for eachaddress_id - Use

Series.map()to transform the original dataframe'saddress_idcolumn into this firstbusinessvalue - Add column

newwhich isTrueonly if this newbusinessdiffers from the originalbusinesscolumn.

ORIGINAL SOLUTION:

Here is a simple way to do what you've asked using groupby() and join():

df = df.join(df.groupby('address_id').first(), on='address_id', rsuffix='_first')

df = df.assign(new=df.business != df.business_first).drop(columns='business_first')f

Output:

address_id business new

0 1 verizon False

1 1 verizon False

2 1 comcast True

3 2 sprint False

4 2 att True

Explanation:

- Use

groupby()andfirst()to create a dataframe whosebusinesscolumn contains the first one encountered for eachaddress_id - Use

join()to add a columnbusiness_firsttodfcontaining the corresponding first business for eachaddress_id - Use

assign()to add a columnnewcontaining a boolean indicating whether the row contains a newbusinesswith an existingaddress_id - Use

drop()to eliminate thebusiness_firstcolumn.

CodePudding user response:

First, groupby on address_id.

groups = df.groupby("address_id")

Then, iterate over the groups, and find where the value of business changes:

for address_id, grp_data in groups:

changed = grp_data['business'].ne(grp_data['business'].shift().bfill())

df.loc[grp_data.index, "changed"] = changed

.shift().bfill() shifts all data one index over (0 -> 1, 1 -> 2, and so on), and then backfills the first value. For example:

>>> df["business"]

0 verizon

1 verizon

2 comcast

3 sprint

4 att

Name: business, dtype: object

>>> df["business"].shift()

0 NaN

1 verizon

2 verizon

3 comcast

4 sprint

Name: business, dtype: object

>>> df["business"].shift().bfill()

0 verizon

1 verizon

2 verizon

3 comcast

4 sprint

Name: business, dtype: object

Running the loop makes the following dataframe:

address_id business changed

0 1 verizon False

1 1 verizon False

2 1 comcast True

3 2 sprint False

4 2 att True