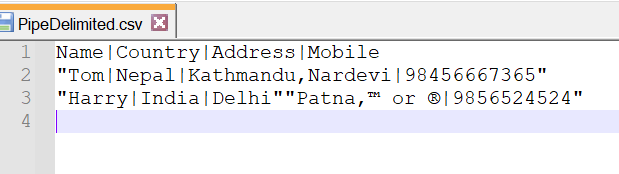

I have a pipe delimited csv file whose data are to be inserted into sql server.

Then I

Opened that file in excel and added comma in column values.

Then saved.

Then I bulk inserted that file into sql server.

BULK INSERT #temp1 FROM '\\as03\AppData\PipeDelimited.csv' WITH ( FIRSTROW = 2 , FIELDTERMINATOR ='|' , ROWTERMINATOR='\n' , ERRORFILE ='\\as03\AppData\ErrorFiles\PipeDelimited.csv' , CODEPAGE = '65001' **strong text**, MAXERRORS = 99999999 )

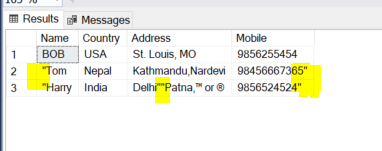

But got the double quotes (") in first and last column values and also got two consecutive double quotes ("") where one double quote was already in file.

Here is the data inserted in sql server.

Is where some way to insert data in sql server by ignoring double quotes that were added by excel or notepad????

CodePudding user response:

This appears to be a non-(PowerShell)issue as it actually works fine in PowerShell:

$Csv = @'

Name|Country|Address|Mobile

Tom|Nepal|Kathmandu,Nardevi|98456667365

Harry|India|Delhi"Patna,™ or R|9856524524

'@ -Split '[\r?\n]'

$Csv |ConvertFrom-Csv -Delimiter '|' # Import-Csv .\PipeDelimited.csv -Delimiter '|'

Yields:

Name Country Address Mobile

---- ------- ------- ------

Tom Nepal Kathmandu,Nardevi 98456667365

Harry India Delhi"Patna,™ or R 9856524524

In other words, you might simply convert your PipeDelimited.csv to a more common CommaDelimited.csv with text indicators like:

Import-Csv .\PipeDelimited.csv -Delimiter '|' |Export-Csv .\CommaDelimited.csv

CodePudding user response:

Your file was corrupted by editting it in Excel and saving it as a CSV. The best solution is to not use Excel to edit such files, but rather either use scripting or a text editor (depending on the complexity - just adding a comma to one field feels easiest in a text editor).

However; if we're saying the damage is done and you need a script to fix the issues caused you could run something like this; this reads in the data as plain text, applies a regex to remove the offending quotes, then spits the result out to a copy of the file (I've written to a copy rather than back to the original so you can rollback more easily if this wasn't what you wanted).

[string]$sourcePath = '.\PipeDelimited.csv'

[string]$outputPath = '.\PipeDelimitedCorrected.csv'

[System.Text.Encoding]$encoding = [System.Text.Encoding]::UTF8 # based on your code page

[string[]]$data = [System.IO.File]::ReadAllLines($sourcePath, $encoding)

$data = $data | ForEach-Object {((($_ -replace '^"', '') -replace '"$', '') -replace '""', '"')}

# replaces quotes at the start and end of the line with blanks,

# then replaces escaped double quotes with individual double quotes...

# it's a quick and dirty approach, but looking at your example should be sufficient

[System.IO.File]::WriteAllLines($outputPath, $data, $encoding)