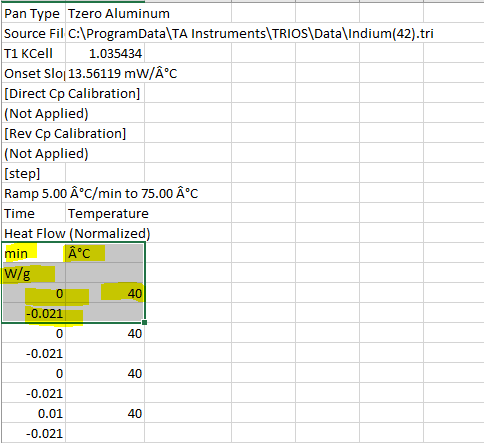

I used a previously posted code to convert my txt files to csv. However upon conversion the rows are all mixed up on my csv file. There are 3 variables which need to be in different columns but instead the code has pasted one of the variable below the first variable (see screenshots). Can someone help me fix this? Thanks

My code:

setwd("C:/Users/maany/Desktop/test/")

filelist = list.files(pattern = ".txt")

for (i in 1:length(filelist)){

input<-filelist[i]

output<-paste0(gsub("\\.txt$", "", input), ".csv")

print(paste("Processing the file:", input))

data = read.delim(input, header = TRUE)

setwd("C:/Users/maany/Desktop/test/done")

write.table(data, file=output, sep=",", col.names=TRUE, row.names=FALSE)

setwd("C:/Users/maany/Desktop/test/")

}

My txt file:

CodePudding user response:

Skip the first 9 lines of the .txt file

data = read.delim(input, header = TRUE, skip = 9)

You'll get

head(data)

min X.C W.g

1 0.00 40.01 -0.084

2 0.00 40.01 -0.082

3 0.00 40.01 -0.081

4 0.01 40.01 -0.080

5 0.01 40.01 -0.079

6 0.01 40.01 -0.078

CodePudding user response:

The following worked with the file in the link.

I have the original file in directory ~/Temp.

- The filename is put together with

file.path; - the output filename uses function

file_path_sans_extinstead ofsub/paste, it's tested and safer;

This is part one.

fl <- "TN_Barley_2016_6_5_TL_UC Tahoe_2.txt"

fl <- file.path("~/Temp", fl)

output <- tools::file_path_sans_ext(fl)

output <- paste0(output, ".csv")

Now write convert the first lines. Read the data in with scan, replace the tab characters by commas and write to file with writeLines.

txt <- scan(fl, what = character(), nmax = 9, sep = "\n")

txt <- gsub("\\t", ",", txt)

writeLines(txt, con = output)

And finally, the tabular data. Now yes, read/write with table read/write functions, read.delim and write.table.

df1 <- read.delim(fl, skip = 9, check.names = FALSE)

write.table(df1, file = output, append = TRUE, sep = ",",

col.names = TRUE, row.names = FALSE)