I have a json file that I am trying to unpack that looks like this:

[{'batter': 'LA Marsh',

'bowler': 'MJG Nielsen',

'non_striker': 'M Kapp',

'runs': {'batter': 0, 'extras': 0, 'total': 0}},

{'batter': 'LA Marsh',

'bowler': 'MJG Nielsen',

'non_striker': 'M Kapp',

'runs': {'batter': 0, 'extras': 0, 'total': 0},

'wickets': [{'player_out': 'LA Marsh', 'kind': 'bowled'}]},

{'batter': 'EA Perry',

'bowler': 'MJG Nielsen',

'non_striker': 'M Kapp',

'runs': {'batter': 0, 'extras': 0, 'total': 0}}]

using the following code:

df = pd.json_normalize(data)

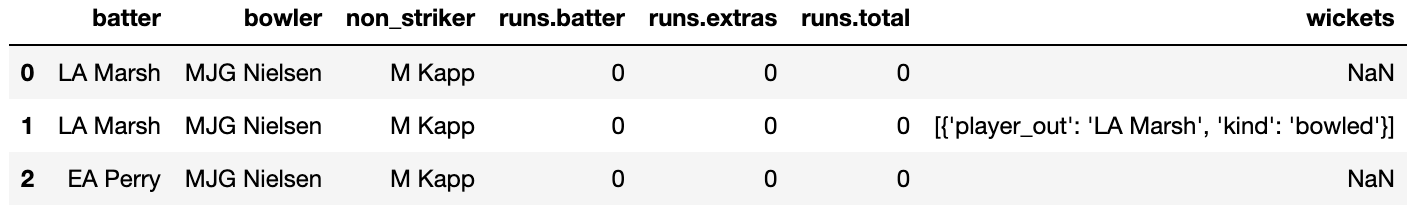

I get the following:

As you can see, the second entry has a nested list in it. In place of the column 'wickets' I would like to have two columns "player_out" and "kind". My preferred output looks like this:

CodePudding user response:

Use:

df = df.drop(columns=['wickets']).join(df['wickets'].explode().apply(pd.Series))

CodePudding user response:

You can try:

import pandas as pd

from collections import MutableMapping

def flatten(d, parent_key='', sep='.'):

items = []

for k, v in d.items():

new_key = parent_key sep k if parent_key else k

if isinstance(v, MutableMapping):

items.extend(flatten(v, new_key, sep=sep).items())

elif isinstance(v, list):

for idx, value in enumerate(v):

items.extend(flatten(value, new_key, sep).items())

else:

items.append((new_key, v))

return dict(items)

data = [{'batter': 'LA Marsh',

'bowler': 'MJG Nielsen',

'non_striker': 'M Kapp',

'runs': {'batter': 0, 'extras': 0, 'total': 0}},

{'batter': 'LA Marsh',

'bowler': 'MJG Nielsen',

'non_striker': 'M Kapp',

'runs': {'batter': 0, 'extras': 0, 'total': 0},

'wickets': [{'player_out': 'LA Marsh', 'kind': 'bowled'}]},

{'batter': 'EA Perry',

'bowler': 'MJG Nielsen',

'non_striker': 'M Kapp',

'runs': {'batter': 0, 'extras': 0, 'total': 0}}]

output = []

for dict_data in data:

output.append(flatten(dict_data))

df = pd.DataFrame(output)

print(df)

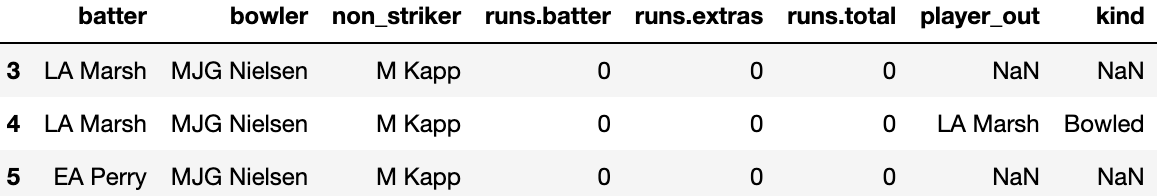

Output:

batter bowler non_striker runs.batter runs.extras runs.total wickets.player_out wickets.kind

0 LA Marsh MJG Nielsen M Kapp 0 0 0 NaN NaN

1 LA Marsh MJG Nielsen M Kapp 0 0 0 LA Marsh bowled

2 EA Perry MJG Nielsen M Kapp 0 0 0 NaN NaN

CodePudding user response:

if you want to keep using json normalize you need to fisrt homogenize the data

the apply json normalize

nan_entries = [{'player_out': pd.NA, 'kind': pd.NA}]

data = [{'batter': 'LA Marsh',

'bowler': 'MJG Nielsen',

'non_striker': 'M Kapp',

'runs': {'batter': 0, 'extras': 0, 'total': 0}},

{'batter': 'LA Marsh',

'bowler': 'MJG Nielsen',

'non_striker': 'M Kapp',

'runs': {'batter': 0, 'extras': 0, 'total': 0},

'wickets': [{'player_out': 'LA Marsh', 'kind': 'bowled'}]},

{'batter': 'EA Perry',

'bowler': 'MJG Nielsen',

'non_striker': 'M Kapp',

'runs': {'batter': 0, 'extras': 0, 'total': 0}}]

# homogenize data

nan_entries = [{'player_out': pd.NA, 'kind': pd.NA}]

for entry in data:

if 'wickets' not in entry.keys():

entry['wickets'] = nan_entries

# use json normailze

pd.json_normalize(data,

record_path='wickets',

meta=['batter', 'bowler', 'non_striker', ['runs', 'batter'],

['runs', 'extras'], ['runs', 'total'] ],

record_prefix='wickets.')

output

wickets.player_out wickets.kind batter bowler non_striker runs.batter runs.extras runs.total

0 <NA> <NA> LA Marsh MJG Nielsen M Kapp 0 0 0

1 LA Marsh bowled LA Marsh MJG Nielsen M Kapp 0 0 0

2 <NA> <NA> EA Perry MJG Nielsen M Kapp 0 0 0