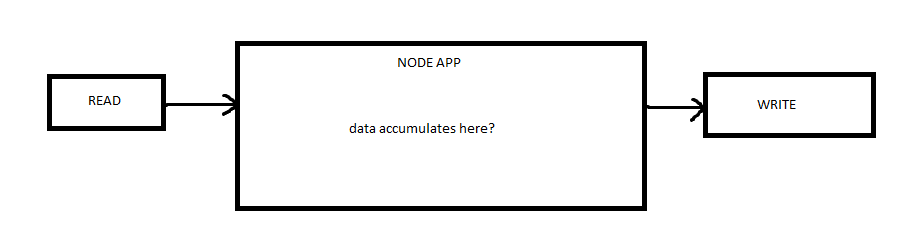

I have a readStream piped to writeStream. Read stream reads from an internet and write stream writes to my local database instance. I noticed that read speed is much faster than write speed and my app memory usage rises until it reaches

JavaScript heap out of memory

I suspect that it accumulates read data in the NodeJS app like this:

How can I limit read stream so it reads only what write stream is capable of writing at the given time?

CodePudding user response:

If you do not handle the data appropriately you would run into memory leak issues and this is what happened in your case. That's the reason you see that message.

Here is a small example demonstrating how you could rate limit your reads and accordingly handle writes while streaming the data.

let fs = require('fs');

let readableStream = fs.createReadStream('./src/roughdata.json'); // Read from

let writableStream = fs.createWriteStream('./src/data.json'); // Write to

readableStream.setEncoding('utf8'); // setting character encoding

let chunk; // piece of data (size defined by byteSize)

let count = 0; // bytes counter

let byteSize = 10; // how many bytes to read on each pass while streaming the data

readableStream.on('readable', function () {

while ((chunk = readableStream.read(byteSize)) != null) {

writableStream.write(chunk);

count = byteSize; // increment the counter on each pass to calculate the size

}

});

readableStream.on('end', function () {

console.log(`${count} bytes data read and write is done!`)

});

CodePudding user response:

Ok so long story short - mechanism you need to be aware of to solve these kind of issues is backpressure. It is not a problem when you are using standard node's pipe(). I am using custom fan-out to multiple streams thus it happened

You can read about it here https://nodejs.org/en/docs/guides/backpressuring-in-streams/