I am building project in pycharm IDE using pyspark. The Spark install successfully and can be call easily from command prompt. The Interpreter also configured correctly in project setting. I also tried with pip install pyspark.

The main.py looks like:-

import os

os.environ["SPARK_HOME"] = "/usr/local/spark"

from pyspark import SparkContext

import pyspark

from pyspark.sql import SparkSession

from pyspark.sql.types import *

import pyspark.sql.functions as F

from genericFunc import genericFunction

from config import constants

spark = genericFunction.start_data_pipeline()

inputDf = genericFunction.read_json(constants.INPUT_FOLDER_PATH "file-000.json")

inputDf1 = genericFunction.read_json(constants.INPUT_FOLDER_PATH " file-001.json")

and the generic function looks like:-

from pyspark.sql import SparkSession

print('w')

def start_data_pipeline():

#setting up spark session

'''

This function will set the spark session and return it to the __main__

function.

'''

try:

spark = SparkSession\

.builder\

.appName("Nike ETL")\

.getOrCreate()

return spark

except Exception as e:

raise

def read_json(file_name):

#setting up spark session

'''

This function will set the spark session and return it to the __main__

function.

'''

try:

spark = start_data_pipeline()

spark = spark.read \

.option("header", "true") \

.option("inferSchema", "true")\

.json(file_name)

return spark

except Exception as e:

raise

def load_as_csv(df,file_name):

#setting up spark session

'''

This function will set the spark session and return it to the __main__

function.

'''

try:

df.repartition(1).write.format('com.databricks.spark.csv')\

.save(file_name, header = 'true')

except Exception as e:

raise

Error:

Error:

Unresolved reference 'genericFunc'

"C:\Users\MY PC\PycharmProjects\pythonProject1\venv\Scripts\python.exe" C:/Capgemini/cv/tulsi/test-tulsi/main.py

Traceback (most recent call last):

File "C:/Capgemini/cv/tulsi/test-naveen/main.py", line 6, in <module>

from pyspark import SparkContext

ImportError: No module named pyspark

Process finished with exit code 1

Please help

CodePudding user response:

You don't have pyspark installed in a place available to the python installation you're using. To confirm this, on your command line terminal, with your virtualenv activated, enter your REPL (python) and type import pyspark:

If you see the No module name 'pyspark' ImportError you need to install that library. Quit the REPL and type:

pip install pyspark

Then re-enter the repl to confirm it works:

As a note, it is critical your virtual environment is activated. When in the directory of your virtual environment:

$ source bin/activate

These instructions are for a unix-based machine, and will vary for Windows.

CodePudding user response:

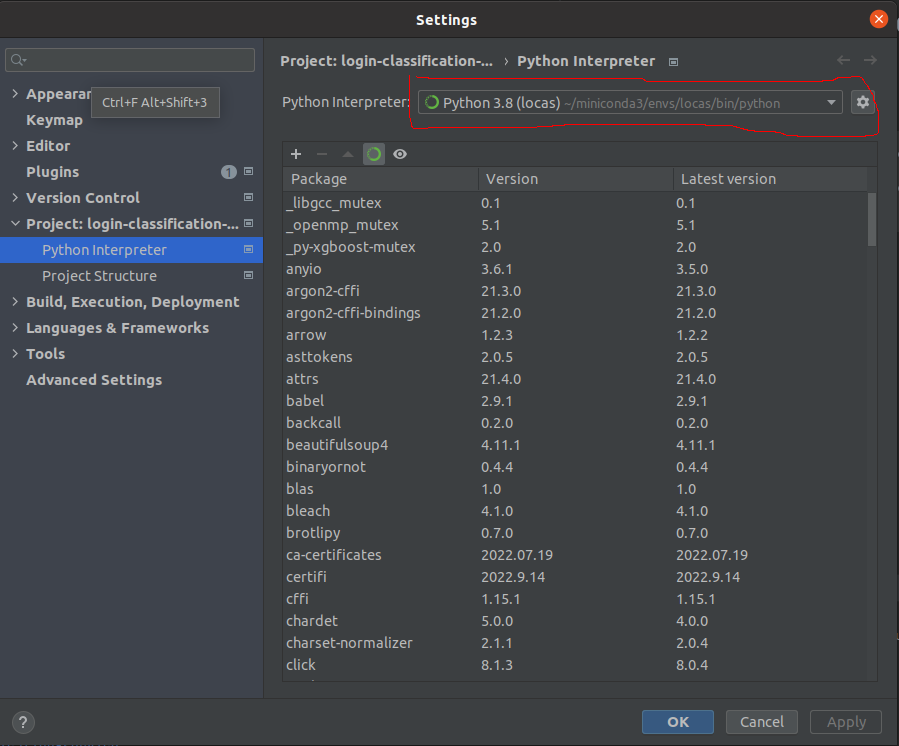

The problem is that PyCharm creates its own virtual environment (venv) before running a python project and that venv do not have the packages installed - in this case pyspark. So you need to point PyCharm to the correct python shell where the packages are available.

You should go to File -> Settings -> Project -> Python Interpreter

and change the Python Interpreter to correct python that has the packages. To find your python run this your python shell

>>> import os

>>> import sys

>>> os.path.dirname(sys.executable)

'C:\\Doc\\'