I'm using an AHK script to dump the current clipboard contents to a file (which contains a copy of a part of Microsoft OneNote page to a file).

I would like to modify this binary file to search for a specific string and be able to import it back into AHK.

I tried the following but it looks like powershell is doing something additional to the file (like changing the encoding) and the import of the file into the clipboard is failing.

$ThisFile = 'B:\Users\Desktop\onenote-new-entry.txt'

$data = Get-Content $ThisFile

$data = $data.Replace('asdf','TESTREPLACE!')

$data | Out-File -encoding utf8 $ThisFile

Any suggestions on doing a string replace to the file without changing existing encoding?

I tried manually modifying in a text editor and it works fine. Obviously though I would like to have the modifications be done in mass and automatically which is why I need a script.

The text copied from OneNote and then dumped to file via AHK looks like this:

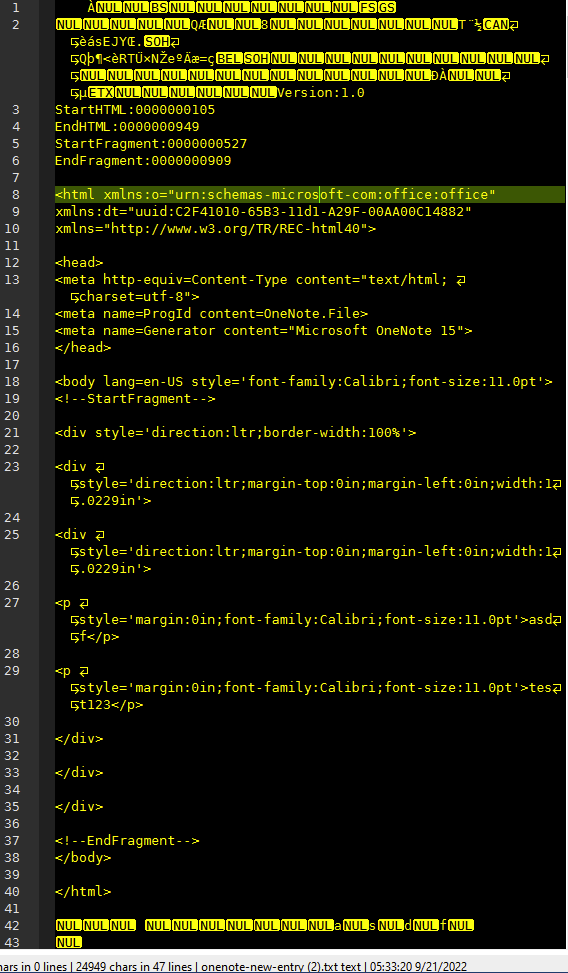

However, note the clipboard dump file has a lot of other meta-data as shown below when opened in an editor. To download for testing with PS,

CodePudding user response:

Since your file is a mix of binary data and UTF-8 text, you cannot use text processing (as you tried with Out-File -Encoding utf8), because the binary data would invariably be interpreted as text too, resulting in its corruption.

PowerShell offers no simple method for editing binary files, but you can solve your problem via an auxiliary "hex string" representation of the file's bytes:

# To compensate for a difference between Windows PowerShell and PowerShell (Core) 7

# with respect to how byte processing is requested: -Encoding Byte vs. -AsByteStream

$byteEncParam =

if ($IsCoreCLR) { @{ AsByteStream = $true } }

else { @{ Encoding = 'Byte' } }

# Read the file *as a byte array*.

$ThisFile = 'B:\Users\Desktop\onenote-new-entry.txt'

$data = Get-Content @byteEncParam -ReadCount 0 $ThisFile

# Convert the array to a "hex string" in the form "nn-nn-nn-...",

# where nn represents a two-digit hex representation of each byte,

# e.g. '41-42' for 0x41, 0x42, which, if interpreted as a

# single-byte encoding (ASCII), is 'AB'.

$dataAsHexString = [BitConverter]::ToString($data)

# Define the search and replace strings, and convert them into

# "hex strings" too, using their UTF-8 byte representation.

$search = 'asdf'

$replacement = 'TESTREPLACE!'

$searchAsHexString = [BitConverter]::ToString([Text.Encoding]::UTF8.GetBytes($search))

$replaceAsHexString = [BitConverter]::ToString([Text.Encoding]::UTF8.GetBytes($replacement))

# Perform the replacement.

$dataAsHexString = $dataAsHexString.Replace($searchAsHexString, $replaceAsHexString)

# Convert he modified "hex string" back to a byte[] array.

$modifiedData = [byte[]] ($dataAsHexString -split '-' -replace '^', '0x')

# Save the byte array back to the file.

Set-Content @byteEncParam $ThisFile -Value $modifiedData

Note:

The string replacement performed is (a) literal, and (b) case-sensitive, and (c) - for accented characters such as

é- only works the if the input - like string literals in .NET - uses the composed Unicode normalization form , whereéis a single code point and encoded as such (resulting in a multi-byte UTF-8 escape sequence).More sophisticated replacements, such as regex-based ones, would only be possible if you knew how to split the file data into binary and textual parts, allowing you to operate on the textual parts directly.

Optional reading: Modifying a UTF-8 file without incidental alterations:

Note:

- The following applies to text-only files that are UTF-8-encoded.

- Unless extra steps are taken, reading and re-saving such files in PowerShell can result in unwanted incidental changes to the file. Avoiding them is discussed below.

PowerShell never preserves information about the character encoding of an input file, such as one read with Get-Content. Also, unless you use -Raw, information about the specific newline format is lost, as well as whether the file had a trailing newline or not.

Assuming that you know the encoding:

Read the file with

Get-Content -Rawand specify the encoding with-Encoding(if necessary). You'll receive the file's content as a single, multi-line .NET string.Use

Set-Content-NoNewLineto save the modified string back to the file, using-Encodingwith the original encoding.Caveat: In Windows PowerShell,

-Encoding utf8invariably creates a UTF-8 file with BOM, unlike in PowerShell (Core) 7 , which defaults to BOM-less UTF-8 and requires you to use-Encoding utf8BOMif you want a BOM.If you're using Windows PowerShell and do not want a UTF-8 BOM, use

$null =New-Item-Force ...as a workaround, and pass the modified string to the-Valueparameter.

Therefore:

$ThisFile = 'B:\Users\Desktop\onenote-new-entry.txt'

$data = Get-Content -Raw -Encoding utf8 $ThisFile

$data = $data.Replace('asdf','TESTREPLACE!')

# !! Note the caveat re BOM mentioned above.

$data | Set-Content -NoNewLine -Encoding utf8 $ThisFile

Streamlined reformulation, in a single pipeline:

(Get-Content -Raw -Encoding utf8 $ThisFile) |

ForEach-Object Replace 'asdf', 'TESTREPLACE!' |

Set-Content -NoNewLine -Encoding utf8 $ThisFile

With the New-Item workaround, if the output file mustn't have a BOM:

(Get-Content -Raw -Encoding utf8 $ThisFile) |

ForEach-Object Replace 'asdf', 'TESTREPLACE!' |

New-Item -Force $ThisFile |

Out-Null # suppress New-Item's output (a file-info object)