It's my first time using np.where to select pixels from a bgr image, i have no idea how to select pixels that r>g from image by using np.where, I tried to do that by using codes like this:

bgr = cv2.imread('im.jpg')

bgr = np.where(bgr[1]>bgr[2],np.full_like(bgr,[255,255,255]),bgr)

cv2.imshow('result',bgr)

cv2.waitKey(0)

but it seems didn't work. Can anybody help me?

CodePudding user response:

I think that it doesn't work because RGB is the last dimension of your image. Rewrite the slicing in np.where. Something like that:

bgr = cv2.imread('im.jpg')

print(bgr.shape) # (h, w, 3)

bgr = np.where(bgr[..., 1:2] > bgr[..., 2:3], # make sure that tensors are of shape 3

np.full_like(bgr, 255), bgr)

cv2.imshow('result', bgr)

cv2.waitKey(0)

Note that the ellipsis bgr[..., 1:2] means bgr[:, :, 1:2] here.

CodePudding user response:

You seem to want to make all pixels white where red exceeds green and retain the original colours elsewhere. If so, I find it clearer this way:

# Load image

im = cv2.imread('image.png')

# Make simple synonyms for red and green

R = im[...,2]

G = im[...,1]

# Make True/False Boolean of pixels where red exceeds green

MoreRedThanGreen = R > G

# Make the image white wherever it is more red than green

im[MoreRedThanGreen,:] = [255,255,255]

# Save result

cv2.imwrite('result.png', im)

That makes this start image:

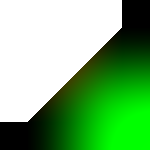

into this:

Note: Read the colon (:) in this line as meaning "all the channels":

im[MoreRedThanGreen,:] = [255,255,255]