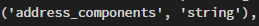

In Databricks, using PySpark, I am working on a DataFrame that has the following column, where each row is a list with multiple dictionaries:

I would like to unpack/flatten this column, so that there is a separate column for each of the dictionary values. However, the issue is that the data type of this column is a string. How can I unpack the column?

For reference, here is an example of a value:

[{"long_name":"Sofia","short_name":"Sofia","types":["locality","political"]},{"long_name":"Sofia City Province","short_name":"Sofia City Province","types":["administrative_area_level_1","political"]},{"long_name":"Bulgaria","short_name":"BG","types":["country","political"]}]

CodePudding user response:

Your string column can be converted to array of structs using from_json and providing the schema. Then you can do inline to explode it to columns.

df = spark.createDataFrame(

[('[{"long_name":"Sofia","short_name":"Sofia","types":["locality","political"]},{"long_name":"Sofia City Province","short_name":"Sofia City Province","types":["administrative_area_level_1","political"]},{"long_name":"Bulgaria","short_name":"BG","types":["country","political"]}]',)],

['address_components'])

df = df.selectExpr(

"inline(from_json(address_components, 'array<struct<long_name:string,short_name:string,types:array<string>>>'))"

)

df.show(truncate=0)

# ------------------- ------------------- ----------------------------------------

# |long_name |short_name |types |

# ------------------- ------------------- ----------------------------------------

# |Sofia |Sofia |[locality, political] |

# |Sofia City Province|Sofia City Province|[administrative_area_level_1, political]|

# |Bulgaria |BG |[country, political] |

# ------------------- ------------------- ----------------------------------------