I'm using Google BigQuery to run some queries on very large datasets. As I usually exceed some limits (e.g. query took longer than 6 hours) I want to use batch queries as they don't expect results to be done immediatly.

After the results are available I want to write them to a pandas dataframe and continue from there.

What is the best way, to wait for the results. I tried with time.sleep, although it works, there might be a better way.

from google.cloud import bigquery

from google.oauth2 import service_account

import pandas as pd

key_path = "../path_to_my_credentials.json"

credentials = service_account.Credentials.from_service_account_file(

key_path, scopes=["https://www.googleapis.com/auth/cloud-platform"],

)

client = bigquery.Client(credentials=credentials, project=credentials.project_id,)

job_config = bigquery.QueryJobConfig(

priority=bigquery.QueryPriority.BATCH

)

query = """

SELECT

name, gender,

SUM(number) AS total

FROM

`bigquery-public-data.usa_names.usa_1910_2013`

GROUP BY

name, gender

ORDER BY

total DESC

LIMIT

10

"""

query_job = client.query(query, job_config=job_config)

query_job = client.get_job(

query_job.job_id, location=query_job.location

)

while(query_job.state != 'DONE'):

time.sleep(60)

df = query_job.to_dataframe()

Thanks!

CodePudding user response:

Try using the native add_done_callback method instead:

For example, with your above query:

def set_df(future):

global df

df = future.to_dataframe()

query_job = client.query(query=query)

query_job.add_done_callback(set_df)

print(df)

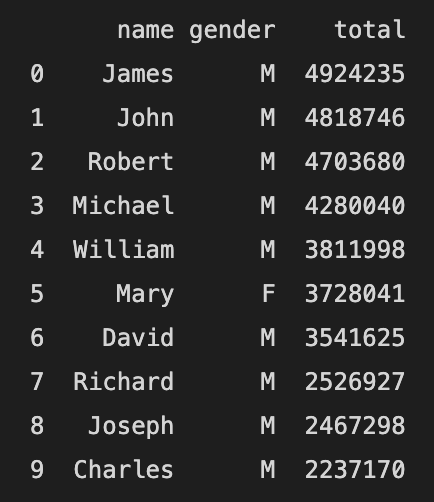

This results in the creation of a Dataframe, df, that looks like:

CodePudding user response:

I think you’re just missing “result()”

client.query(q).result().to_dataframe()