I am trying to convert string type value(mm/dd/yyyy) in Date column into date format(dd-mm-yyyy). there are total of 5 date related column in my file and i want to change them into Proper date format(dd-mm-yy) from (mm/dd//yy). how can i achieve this??

df = pd.DataFrame([[10/23/2019, 09/13/2021], [06/16/2020, 03/16/2021], [09/06/2022, 12/23/2019], columns=['A', 'B'])

Output will be like 23-10-2019, 13-09-2021

My code:

df = df.withColumn('date_col',to_date('Date_col', 'dd-MM-yy'))

the code is running fine but returning undefined in output for date column. As i have 5 date columns is it possible to do it through using For Loop.

CodePudding user response:

to_date changes a column to date type, so you need to specify the format your string is in but not the target format. You should use date_format function which finally change the date column to another string of another format.

Converts a date/timestamp/string to a value of string in the format specified by the date format given by the second argument.

df.select(date_format(to_date(df.t, 'MM/dd/yyyy'), "dd-MM-yyyy").alias('date')).show()

If you only have 5 columns to change to the date type and this number will not change dynamically, I suggest you just do:

df.withColumn("col1", date_format(to_date("dt1", MM/dd/yyyy'), "dd-MM-yyyy"))

.withColumn("col2", date_format(to_date("dt2", MM/dd/yyyy'), "dd-MM-yyyy"))

.withColumn("col3", date_format(to_date("dt3", MM/dd/yyyy'), "dd-MM-yyyy"))

.withColumn("col4", date_format(to_date("dt4", MM/dd/yyyy'), "dd-MM-yyyy"))

.withColumn("col5", date_format(to_date("dt5", MM/dd/yyyy'), "dd-MM-yyyy"))

CodePudding user response:

Here are my 2 cents

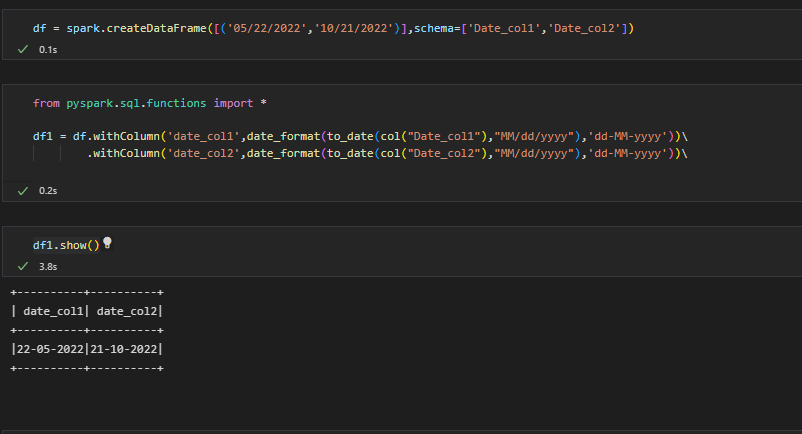

Created a data frame with 2 columns(You can create as many columns as you need)

df = spark.createDataFrame([('05/22/2022','10/21/2022')],schema=['Date_col1','Date_col2'])Use the to_date() followed by date_format()

from pyspark.sql.functions import * df1 = df.withColumn('date_col1',date_format(to_date(col("Date_col1"),"MM/dd/yyyy"),'dd-MM-yyyy'))\ .withColumn('date_col2',date_format(to_date(col("Date_col2"),"MM/dd/yyyy"),'dd-MM-yyyy'))\Print the data frame

df1.show()

Please check the below image: