So I'm trying to expand the Roberta Pretrained Model and I was doing a basic model for testing but I'm getting this error from TensorFlow: ValueError: Output tensors of a Functional model must be the output of a TensorFlow Layer. which is from the Model api of Keras but I don't exactly know what's causing it.

Code:

LEN_SEQ = 64

BATCH_SIZE = 16

TEST_TRAIN_SPLIT = 0.9

TRANSFORMER = 'roberta-base'

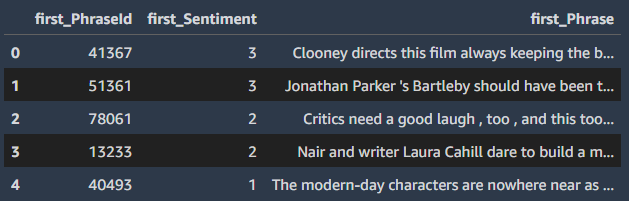

df = pd.read_csv('train-processed.csv')

df = df.head(100)

samples_count = len(df)

# Create labels

target = df['first_Sentiment'].values.astype(int)

labels = np.zeros((samples_count, target.max() 1))

labels[np.arange(samples_count), target] = 1

tokenizer = AutoTokenizer.from_pretrained(TRANSFORMER)

tokens = tokenizer(

df['first_Phrase'].tolist(),

max_length=LEN_SEQ,

truncation=True,

padding='max_length',

add_special_tokens=True,

return_tensors='tf'

)

base_model = TFAutoModel.from_pretrained(TRANSFORMER)

embedding = base_model.roberta(input_ids=tokens['input_ids'], attention_mask=tokens['attention_mask'])

embedding.trainable = False

# Define inputs

input_ids = Input(shape=(LEN_SEQ,), name='input_ids', dtype='int32')

input_mask = Input(shape=(LEN_SEQ,), name='input_mask', dtype='int32')

# Define hidden layers

layer = Dense(LEN_SEQ * 2, activation='relu')(embedding[1])

layer = Dense(LEN_SEQ, activation='relu')(layer)

# Define output

output = Dense(target.max() 1, activation='softmax', name='output')(layer)

model = Model(inputs=[input_ids, input_mask], outputs=[output])

Full error traceback:

---------------------------------------------------------------------------

ValueError Traceback (most recent call last)

<ipython-input-80-9a6ccb1b4ca8> in <module>

10 output = Dense(target.max() 1, activation='softmax', name='output')(layer)

11

---> 12 model = Model(inputs=[input_ids, input_mask], outputs=[output])

13

14 model.compile(

/usr/local/lib/python3.8/site-packages/tensorflow/python/training/tracking/base.py in _method_wrapper(self, *args, **kwargs)

528 self._self_setattr_tracking = False # pylint: disable=protected-access

529 try:

--> 530 result = method(self, *args, **kwargs)

531 finally:

532 self._self_setattr_tracking = previous_value # pylint: disable=protected-access

/usr/local/lib/python3.8/site-packages/keras/engine/functional.py in __init__(self, inputs, outputs, name, trainable, **kwargs)

107 generic_utils.validate_kwargs(kwargs, {})

108 super(Functional, self).__init__(name=name, trainable=trainable)

--> 109 self._init_graph_network(inputs, outputs)

110

111 @tf.__internal__.tracking.no_automatic_dependency_tracking

/usr/local/lib/python3.8/site-packages/tensorflow/python/training/tracking/base.py in _method_wrapper(self, *args, **kwargs)

528 self._self_setattr_tracking = False # pylint: disable=protected-access

529 try:

--> 530 result = method(self, *args, **kwargs)

531 finally:

532 self._self_setattr_tracking = previous_value # pylint: disable=protected-access

/usr/local/lib/python3.8/site-packages/keras/engine/functional.py in _init_graph_network(self, inputs, outputs)

144 base_layer_utils.create_keras_history(self._nested_outputs)

145

--> 146 self._validate_graph_inputs_and_outputs()

147

148 # A Network does not create weights of its own, thus it is already

/usr/local/lib/python3.8/site-packages/keras/engine/functional.py in _validate_graph_inputs_and_outputs(self)

719 if not hasattr(x, '_keras_history'):

720 cls_name = self.__class__.__name__

--> 721 raise ValueError('Output tensors of a ' cls_name ' model must be '

722 'the output of a TensorFlow `Layer` '

723 '(thus holding past layer metadata). Found: ' str(x))

ValueError: Output tensors of a Functional model must be the output of a TensorFlow `Layer` (thus holding past layer metadata). Found: tf.Tensor(

[[0.18333092 0.18954797 0.22477032 0.21039596 0.1919548 ]

[0.18219706 0.1903447 0.2256843 0.20587942 0.19589448]

[0.18239683 0.1907878 0.22491893 0.20824413 0.19365236]

[0.18193942 0.1898969 0.2259874 0.20646562 0.1957107 ]

[0.18132213 0.1893883 0.22565623 0.21005587 0.1935775 ]

[0.18237704 0.18789911 0.22692119 0.20759228 0.1952104 ]

[0.18217668 0.18732095 0.22601548 0.21063867 0.19384828]

[0.1817196 0.18970788 0.22175607 0.21405536 0.19276106]

[0.18154216 0.18738106 0.22770867 0.2091297 0.19423842]

[0.1839993 0.19110405 0.2193769 0.2124882 0.19303149]

[0.18009834 0.19029345 0.22552258 0.21138497 0.19270067]

[0.18262982 0.18932794 0.22548872 0.20995376 0.19259974]

[0.18132062 0.1894746 0.22257458 0.21089785 0.19573237]

[0.18127124 0.18927224 0.2275273 0.2061446 0.19578464]

[0.18001163 0.1883382 0.22915907 0.20934238 0.19314879]

[0.18409619 0.19204247 0.22269006 0.20967877 0.19149245]

[0.18143429 0.18780865 0.22895294 0.21044146 0.1913626 ]

[0.18210162 0.18980804 0.22135185 0.21205473 0.1946838 ]

[0.18077913 0.18933856 0.22730026 0.2079047 0.19467732]

[0.18248595 0.19133545 0.2252994 0.20402898 0.1968502 ]

[0.18053354 0.18830904 0.22379933 0.21369977 0.19365832]

[0.18100418 0.1889128 0.22656825 0.21134934 0.19216539]

[0.18219638 0.18901002 0.22543809 0.20894748 0.194408 ]

[0.17991781 0.18693839 0.23250549 0.21227528 0.18836297]

[0.18322821 0.1881207 0.22497904 0.20976694 0.19390512]

[0.17972894 0.18888594 0.2251662 0.21268585 0.19353302]

[0.1822505 0.18769115 0.22729188 0.21127912 0.19148737]

[0.18432644 0.18830952 0.22477935 0.20987424 0.19271052]

[0.1801894 0.18920776 0.22684936 0.20734173 0.19641179]

[0.181594 0.1880084 0.22798598 0.20937674 0.19303486]

[0.18252885 0.19045824 0.22497422 0.207161 0.19487773]

[0.18196142 0.18878765 0.22479571 0.2105628 0.19389246]

[0.18600896 0.18686578 0.2283819 0.21188499 0.18685843]

[0.18056509 0.18865508 0.22694935 0.21080662 0.19302382]

[0.18446274 0.1887065 0.22405164 0.21271324 0.19006592]

[0.1812612 0.18995184 0.22384171 0.20790772 0.19703752]

[0.1861402 0.189157 0.2236694 0.21078445 0.19024895]

[0.18149142 0.18862149 0.2255336 0.20888737 0.19546609]

[0.18088317 0.1882689 0.22780944 0.20749897 0.19553955]

[0.1824722 0.18926203 0.22691077 0.2071967 0.1941583 ]

[0.18111941 0.18773855 0.22366299 0.21535842 0.19212064]

[0.18248987 0.18920848 0.22602491 0.20733926 0.19493747]

[0.18306294 0.19167435 0.22505572 0.21000686 0.19020009]

[0.18466519 0.1885763 0.22352514 0.21257839 0.19065501]

[0.18297954 0.18976018 0.2262897 0.20864752 0.19232307]

[0.18216778 0.18953851 0.22490299 0.21057723 0.1928135 ]

[0.18181367 0.19077264 0.2232015 0.21115994 0.1930523 ]

[0.18345618 0.18753015 0.22660162 0.20830849 0.1941036 ]

[0.18212378 0.18797131 0.2247642 0.21066691 0.19447377]

[0.18199605 0.19106121 0.22245005 0.21217921 0.19231346]

[0.18243583 0.18764758 0.22628336 0.21369886 0.18993443]

[0.18162242 0.18957089 0.22591078 0.20930369 0.19359224]

[0.18090473 0.18757755 0.22858356 0.20813066 0.19480348]

[0.17951688 0.18841572 0.22520997 0.21235934 0.19449812]

[0.1850496 0.18895829 0.22575855 0.20854111 0.1916925 ]

[0.18254244 0.18938984 0.22754729 0.20879866 0.19172177]

[0.1816532 0.18972425 0.22676478 0.20679341 0.19506434]

[0.18303266 0.19159187 0.22373216 0.20538329 0.19625996]

[0.18126963 0.18750906 0.2258774 0.21198079 0.1933631 ]

[0.18387978 0.18828613 0.22228165 0.21189795 0.19365448]

[0.1834729 0.18976368 0.22469373 0.20830937 0.19376035]

[0.18359789 0.18833868 0.22379532 0.21078889 0.19347927]

[0.18039297 0.18886234 0.22411437 0.2105467 0.19608359]

[0.17980678 0.18979622 0.2266618 0.20471531 0.19901991]

[0.18554561 0.19003332 0.22477089 0.21021138 0.18943883]

[0.18349187 0.18941568 0.22224301 0.21004184 0.19480757]

[0.18351436 0.19169463 0.22155108 0.21009424 0.19314568]

[0.18123321 0.18985166 0.22660086 0.21186577 0.19044854]

[0.18183744 0.192495 0.22091088 0.21275932 0.1919973 ]

[0.18028514 0.18943599 0.22416686 0.21241388 0.19369814]

[0.18061554 0.18873625 0.22677769 0.21073307 0.19313747]

[0.18186866 0.18851075 0.22588421 0.21183755 0.19189876]

[0.18126652 0.18949142 0.22501452 0.20897155 0.19525598]

[0.1835434 0.19079022 0.22333461 0.21146008 0.19087164]

[0.18269798 0.19171126 0.22150221 0.21224435 0.19184415]

[0.17996274 0.19000672 0.22470033 0.2105299 0.19480029]

[0.18345153 0.19032337 0.2239142 0.21167503 0.19063583]

[0.18224017 0.19025423 0.22567508 0.2087501 0.19308044]

[0.18233515 0.18966553 0.22833474 0.20635706 0.1933075 ]

[0.18210347 0.18650064 0.22770585 0.21101129 0.19267873]

[0.18199693 0.19086935 0.22255068 0.20988034 0.19470267]

[0.18119748 0.18983872 0.22518982 0.20845842 0.19531558]

[0.18367417 0.19071157 0.22310348 0.21277103 0.18973975]

[0.17965038 0.18936628 0.22479466 0.21279414 0.19339451]

[0.18141513 0.18989322 0.22380653 0.21031635 0.19456872]

[0.18295668 0.19067182 0.22385122 0.20624346 0.1962768 ]

[0.17981796 0.18981294 0.22544417 0.21043345 0.19449154]

[0.18068986 0.1897383 0.22433658 0.21027999 0.1949553 ]

[0.18146665 0.18844193 0.22996067 0.20703284 0.19309792]

[0.18278767 0.18972701 0.22451803 0.20893572 0.19403161]

[0.18077034 0.1892612 0.2236769 0.21081012 0.19548143]

[0.18254872 0.19220418 0.22300169 0.20895892 0.19328652]

[0.18032935 0.19029863 0.22319157 0.21000609 0.19617435]

[0.18328631 0.18907256 0.22911799 0.20782094 0.19070214]

[0.17863902 0.18771355 0.23066713 0.21065918 0.19232109]

[0.18178153 0.19022569 0.22538401 0.20857622 0.1940325 ]

[0.18072292 0.18907587 0.22616044 0.21096109 0.19307965]

[0.18215105 0.18966101 0.22436853 0.21200544 0.191814 ]

[0.18104836 0.18830387 0.22495148 0.21120267 0.19449359]

[0.18192047 0.18981694 0.22512193 0.2107065 0.19243418]], shape=(100, 5), dtype=float32)

Data example:

Any help appreciated. I'm new to transformers so please feel free to point any extra considerations.

CodePudding user response:

You need to pass a list of [input_ids , input_mask] to base_model.

# !pip install transformers

from transformers import TFAutoModel

import tensorflow as tf

LEN_SEQ = 64

# Define inputs

input_ids = tf.keras.layers.Input(shape=(LEN_SEQ,), name='input_ids', dtype='int32')

input_mask = tf.keras.layers.Input(shape=(LEN_SEQ,), name='input_mask', dtype='int32')

base_model = TFAutoModel.from_pretrained('roberta-base')

for layer in base_model.layers:

layer.trainable = False

# Check summary of tf_roberta_model

base_model.summary()

embedding = base_model([input_ids, input_mask])[1]

# Or

# embedding = base_model([input_ids, input_mask]).pooler_output

# Define hidden layers

layer = tf.keras.layers.Dense(LEN_SEQ * 2, activation='relu')(embedding)

layer = tf.keras.layers.Dense(LEN_SEQ, activation='relu')(layer)

# Define output

output = tf.keras.layers.Dense(2, activation='softmax', name='output')(layer)

model = tf.keras.models.Model(inputs=[input_ids, input_mask], outputs=[output])

model.summary()

Output:

Model: "tf_roberta_model_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

roberta (TFRobertaMainLayer multiple 124645632

)

=================================================================

Total params: 124,645,632

Trainable params: 0

Non-trainable params: 124,645,632

_________________________________________________________________

Model: "model"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_ids (InputLayer) [(None, 64)] 0 []

input_mask (InputLayer) [(None, 64)] 0 []

tf_roberta_model_2 (TFRobertaM TFBaseModelOutputWi 124645632 ['input_ids[0][0]',

odel) thPoolingAndCrossAt 'input_mask[0][0]']

tentions(last_hidde

n_state=(None, 64,

768),

pooler_output=(Non

e, 768),

past_key_values=No

ne, hidden_states=N

one, attentions=Non

e, cross_attentions

=None)

dense (Dense) (None, 128) 98432 ['tf_roberta_model_2[0][1]']

dense_1 (Dense) (None, 64) 8256 ['dense[0][0]']

output (Dense) (None, 2) 130 ['dense_1[0][0]']

==================================================================================================

Total params: 124,752,450

Trainable params: 106,818

Non-trainable params: 124,645,632

__________________________________________________________________________________________________