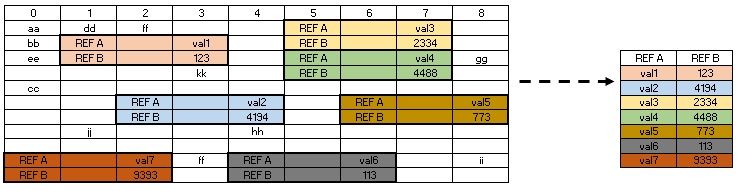

I'm trying to achieve this weird transformation :

The input is made of some random blocks including 6 cells (colored in the image above).

The order is not important in the output ! Here is the dataframe used :

import pandas as pd

from numpy import nan

dico = {0: ['aa', 'bb', 'ee', nan, 'cc', nan, nan, nan, nan, 'REF A', 'REF B'],

1: ['dd', 'REF A', 'REF B', nan, nan, nan, nan, 'jj', nan, nan, nan],

2: ['ff', nan, nan, nan, nan, 'REF A', 'REF B', nan, nan, 'val7', 9393],

3: [nan, 'val1', 123, 'kk', nan, nan, nan, nan, nan, 'ff', nan],

4: [nan, nan, nan, nan, nan, 'val2', 4194, 'hh', nan, 'REF A', 'REF B'],

5: ['REF A', 'REF B', 'REF A', 'REF B', nan, nan, nan, nan, nan, nan, nan],

6: [nan, nan, nan, nan, nan, 'REF A', 'REF B', nan, nan, 'val6', 113],

7: ['val3', 2334, 'val4', 4488, nan, nan, nan, nan, nan, nan, nan],

8: [nan, nan, 'gg', nan, nan, 'val5', 773, nan, nan, 'ii', nan]}

df = pd.DataFrame(dico)

Do you have some suggestions guys ? Thank you so much !

CodePudding user response:

Your excel file has colours and other metadata that show how the REF and val and numbers are connected. Let's take advantage of that with xlsx_cells from pyjanitor. Under the hood it uses openpyxl to generate a Pandas DataFrame with all the metadata that we can then use to reshape to the desired form:

# pip install pyjanitor

import pandas as pd

from janitor import xlsx_cells

path = 'Downloads/rubic.xlsx'

df = xlsx_cells(path, include_blank_cells=False, sheetnames=0, fill=True)

target = df.loc[df.fill.str['fgColor'].str['tint'].ne(0), ['value']]

cond = target.value.str.startswith('REF', na=False)

other = np.where(~cond, target.value, np.nan)

# fill the nulls above with the values below

target = target.assign(other = other).bfill()

target = target.loc[target.value != target.other]

index = target.groupby('value').cumcount()

(target

.assign(index = index)

.pivot(index = 'index', columns = 'value', values = 'other')

.rename_axis(index=None, columns=None)

)

REF A REF B

0 val3 2334

1 val1 123

2 val4 4488

3 val2 4194

4 val5 773

5 val7 9393

6 val6 113

BREAKDOWN:

- Here we read in the data - it puts every cell into its own row, along with some relevant metadata:

path = 'Downloads/rubic.xlsx'

df = xlsx_cells(path, include_blank_cells=False, sheetnames=0, fill=True)

df.head()

value internal_value coordinate row column data_type is_date number_format fill

0 0 0 A1 1 1 n False General {'patternType': None, 'fgColor': {'rgb': '0000...

1 1 1 B1 1 2 n False General {'patternType': None, 'fgColor': {'rgb': '0000...

2 2 2 C1 1 3 n False General {'patternType': None, 'fgColor': {'rgb': '0000...

3 3 3 D1 1 4 n False General {'patternType': None, 'fgColor': {'rgb': '0000...

4 4 4 E1 1 5 n False General {'patternType': None, 'fgColor': {'rgb': '0000...

We know the rows are connected with some colour; you can access the fill column to see the sub keys - internally fill is a dictionary per row:

df.fill[0]

{'patternType': None,

'fgColor': {'rgb': '00000000', 'type': 'rgb', 'tint': 0.0},

'bgColor': {'rgb': '00000000', 'type': 'rgb', 'tint': 0.0}}

The useful metadata available here is the tint - for the relevant rows that are coloured, the tint is not zero - let's use that to filter just for the rows we are interested in:

target = df.loc[df.fill.str['fgColor'].str['tint'].ne(0), ['value']]

target.head()

value

12 REF A

13 val3

15 REF A

16 val1

17 REF B

We also know from the data that after each REF comes either val or a number; we'll use that pattern to reshape accordingly:

cond = target.value.str.startswith('REF', na=False)

other = np.where(~cond, target.value, np.nan)

# fill the nulls above with the values below

target = target.assign(other = other).bfill()

target = target.loc[target.value != target.other]

target.head()

value other

12 REF A val3

15 REF A val1

17 REF B 2334

20 REF B 123

22 REF A val4

All that's left is to pivot - we create a unique column with groupby.cumcount before pivoting:

index = target.groupby('value').cumcount()

(target

.assign(index = index)

.pivot(index = 'index', columns = 'value', values = 'other')

.rename_axis(index=None, columns=None)

)

REF A REF B

0 val3 2334

1 val1 123

2 val4 4488

3 val2 4194

4 val5 773

5 val7 9393

6 val6 113

This is specific to the data you shared; for other forms, you might have to do more/less wrangling - and it is dependent on being able to identify the right pattern.

Another possible solution is with numpy; again this is specific to the data shared.

There is a pattern, REF A is paired with val*, while REF B is paired with the numbers. We also know that the numbers are immediately below the val. Let's use that pattern to generate the dataframe:

# get booleans for cells that start with `val`

# convert to numpy

# index within numpy space

bools = df.apply(lambda df: df.str.startswith("val")).to_numpy(na_value=False)

bools = bools.astype(np.bool8)

arr = df.to_numpy()

vals = arr[bools]

rows, cols = bools.nonzero()

# we know that the numbers are in the immediate next row

# directly underneath val

# hence the increment when indexing

numbers = arr[rows 1, cols]

pd.DataFrame({'REF A': vals, 'REF B' : numbers})

REF A REF B

0 val3 2334

1 val1 123

2 val4 4488

3 val2 4194

4 val5 773

5 val7 9393

6 val6 113

CodePudding user response:

I came up with this solution (that obviously may not be the best one) :

First of all, just to make sure that every block has the two values for REF A and REF B :

df.fillna("Missing Value", inplace=True)

Then,

# --- Masking any value different from the 4 cells block

m1 = ~(df[df.columns].isin(['REF A', 'REF B']))

m2 = ~(df[df.columns].isin(['REF A', 'REF B'])).shift(2, axis=1).fillna(False)

out1 = (

pd.DataFrame(df.mask(m1 & m2)

.stack()

.reset_index()

.groupby("level_1").agg(list)

.pop(0).tolist())

.stack()

)

#<class 'pandas.core.series.Series'>

# --- Getting rid of any row containing the expected columns names

out2 = (

out1.loc[~out1.str.startswith("REF").fillna(False)]

.reset_index(level=0)

.drop(columns="level_0")

.reset_index(drop=True)

)

#<class 'pandas.core.frame.DataFrame'>

# --- Making the final dataframe by joining every row with the next one

out3 = (

out2.rename(columns={0: "REF A"})

.join(out2.shift(-1).rename(columns={0: 'REF B'}))

.iloc[::2]

.sort_values(by="REF A") #optionally

.reset_index(drop=True)

)

#<class 'pandas.core.frame.DataFrame'>

Output :

print(out3)

REF A REF B

0 val1 123

1 val2 4194

2 val3 2334

3 val4 4488

4 val5 773

5 val6 113

6 val7 9393