I have realized that my multithreading program isn't doing what I think its doing. The following is a MWE of my strategy. In essence I'm creating nThreads threads but only actually using one of them. Could somebody help me understand my mistake and how to fix it?

import threading

import queue

NPerThread = 100

nThreads = 4

def worker(q: queue.Queue, oq: queue.Queue):

while True:

l = []

threadIData = q.get(block=True)

for i in range(threadIData["N"]):

l.append(f"hello {i} from thread {threading.current_thread().name}")

oq.put(l)

q.task_done()

threadData = [{} for i in range(nThreads)]

inputQ = queue.Queue()

outputQ = queue.Queue()

for threadI in range(nThreads):

threadData[threadI]["thread"] = threading.Thread(

target=worker, args=(inputQ, outputQ),

name=f"WorkerThread{threadI}"

)

threadData[threadI]["N"] = NPerThread

threadData[threadI]["thread"].setDaemon(True)

threadData[threadI]["thread"].start()

for threadI in range(nThreads):

# start and end are in units of 8 bytes.

inputQ.put(threadData[threadI])

inputQ.join()

outData = [None] * nThreads

count = 0

while not outputQ.empty():

outData[count] = outputQ.get()

count = 1

for i in outData:

assert len(i) == NPerThread

print(len(i))

print(outData)

edit

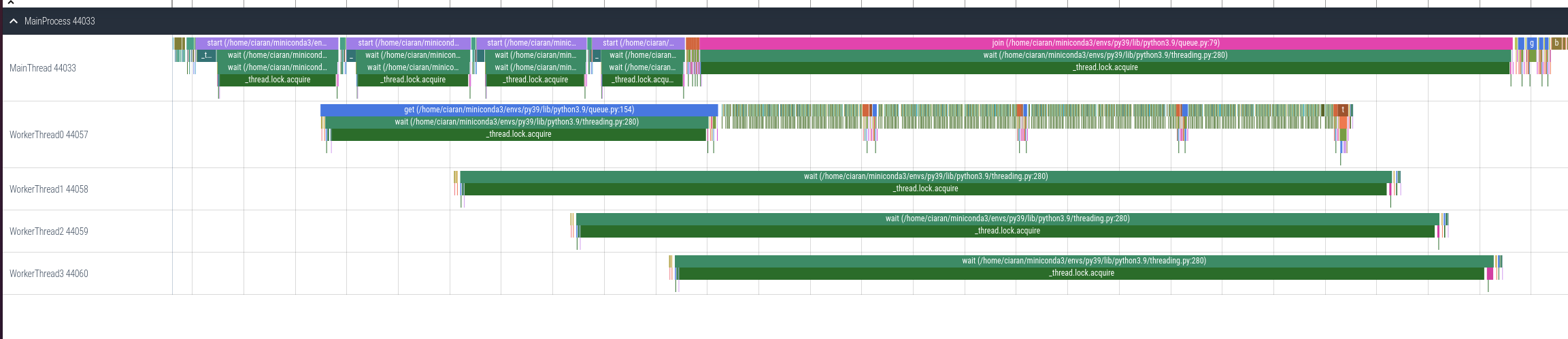

I only actually realised that I had made this mistake after profiling. Here's the output, for information:

CodePudding user response:

In your sample program, the worker function is just executing so fast that the same thread is able to dequeue every item. If you add a time.sleep(1) call to it, you'll see other threads pick up some of the work.

However, it is important to understand if threads are the right choice for your real application, which presumably is doing actual work in the worker threads. As @jrbergen pointed out, because of the GIL, only one thread can execute Python bytecode at a time, so if your worker functions are executing CPU-bound Python code (meaning not doing blocking I/O or calling a library that releases the GIL), you're not going to get a performance benefit from threads. You'd need to use processes instead in that case.

I'll also note that you may want to use concurrent.futures.ThreadPoolExecutor or multiprocessing.dummy.ThreadPool for an out-of-the-box thread pool implementation, rather than creating your own.