With df.replace able to replace pd.NaT with any value but np.nan/None

Note: I have to do multiple data transformations where I'd be using fillna('') which wont work on NaT and I don't want chained replace as it's bit expensive on massive dataframes.

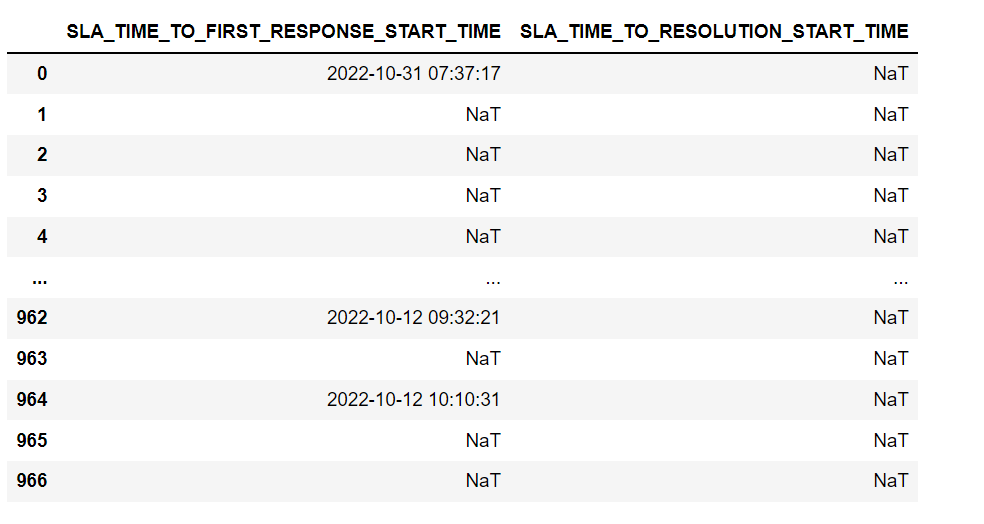

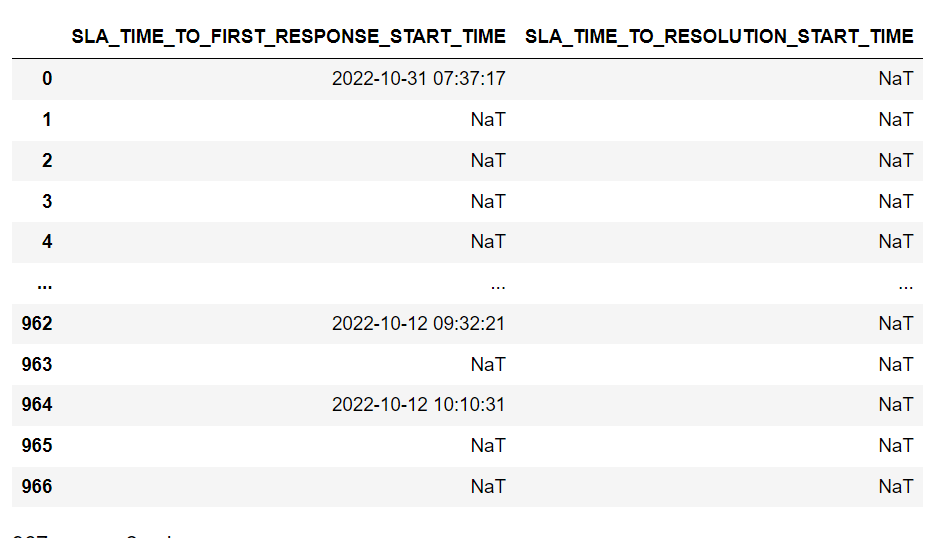

I have a df (provided after dtype info)

ID int64

TYPE object

NAME object

LOCATION_ID float64

COUNTRY_ID float64

REGION_ID float64

SLA_TIME_TO_FIRST_RESPONSE_START_TIME datetime64[ns]

SLA_TIME_TO_FIRST_RESPONSE_STOP_TIME datetime64[ns]

SLA_TIME_TO_RESOLUTION_START_TIME datetime64[ns]

SLA_TIME_TO_RESOLUTION_STOP_TIME datetime64[ns]

df.replace(pd.NaT,np.nan)

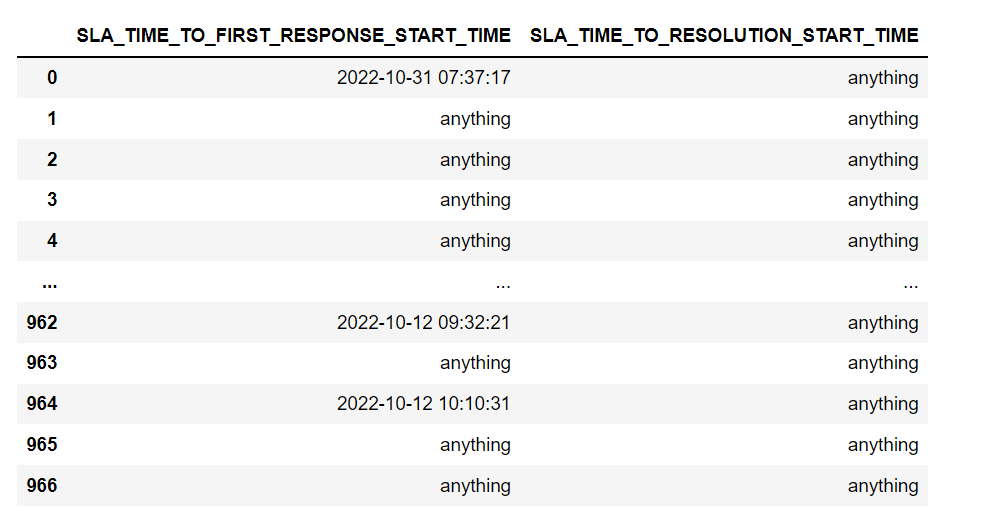

df.replace(pd.NaT, 'anything')

CodePudding user response:

pandas has some issues with types overall and that is why those pd.NaT and 'pd.NA' are for.

Here is an example of the issue when using the columns with bool type. After creating a DataFrame where one column bool_type holds only boolean values will be type 'bool' while another column boot_with_none containing booleans and 'None' values will be of type 'object'.

import pandas as pd

df = pd.DataFrame({"bool_type": [True, False, True], "boot_with_none": [True, False, None]})

df.info()

# # Column Non-Null Count Dtype

# --- ------ -------------- -----

# 0 bool_type 3 non-null bool

# 1 boot_with_none 2 non-null object

print(df)

# bool_type boot_with_none

# 0 True True

# 1 False False

# 2 True None

If we try to convert the boot_with_none column to type bool, it will automatically replace the 'None' value with 'False'.

df["boot_with_none"] = df["boot_with_none"].astype(bool)

df.info()

# # Column Non-Null Count Dtype

# --- ------ -------------- -----

# 0 bool_type 3 non-null bool

# 1 boot_with_none 3 non-null bool

print(df)

# bool_type boot_with_none

# 0 True True

# 1 False False

# 2 True False

One way to overcome this issue is to set the column type to object and then it can hold Nones. In the following code you can see that one column is of type datetime64 and another of type object. After the replace method, the column type datetime64 remains with pd.NaTs while the object type column changes the value to None.

import pandas as pd

import numpy as np

df = pd.DataFrame({'original_type': [np.datetime64("2018-01-01"), np.datetime64("2018-01-02"), None]})

df["object_type"] = df["original_type"].astype(object)

df.info()

# # Column Non-Null Count Dtype

# --- ------ -------------- -----

# 0 original_type 2 non-null datetime64[ns]

# 1 object_type 2 non-null object

df["original_type"] = df["original_type"].replace(pd.NaT, None)

df["object_type"] = df["object_type"].replace(pd.NaT, None)

print(df)

# original_type object_type

# 0 2018-01-01 2018-01-01 00:00:00

# 1 2018-01-02 2018-01-02 00:00:00

# 2 NaT None

Edit

Second issue is with np.nan. As type(np.nan) == float, it should be in a float type column (or 'object' type column). This example shows how pandas automatically converts a 'int' type column to 'float' type column and all its values to 'float's after setting one of the values to 'np.nan'. While 'object' type column remains with no change because it can hold arbitrary type of values.

import pandas as pd

df = pd.DataFrame({"ints": [1, 2, 3]})

df["objects"] = df["ints"].astype(object)

df["ints_with_none"] = df["ints"]

df.loc[2, "ints_with_none"] = np.nan

df.info()

# # Column Non-Null Count Dtype

# --- ------ -------------- -----

# 0 ints 3 non-null int64

# 1 objects 3 non-null object

# 2 ints_with_none 2 non-null float64

print(df)

# ints objects ints_with_none

# 0 1 1 1.0

# 1 2 2 2.0

# 2 3 3 NaN

CodePudding user response:

Fillna should work

df.fillna('anything')

If it doesnt work, check if they are truly pd.NaT objects. Using type.

type(df.iloc[1,0])