Hi I'm using below code to list out no files from hdfs directory . Now I have to count no of files I got from list using below code but getting error.

val hdfspath = "/data/fnl-ecomm/release/*/*/*/schema/*.json" import org.apache.hadoop.fs. (FileSystem, Path)

val fs = org.apache.hadoop.fs.FileSystem.get(spark.sparkContext.hadoopConfiguration)

fs.globStatus(new Path(hdfspath)).filter(.isFile).map(_.getPath).foreach(println)

var count: Int =0

if(status!=null)

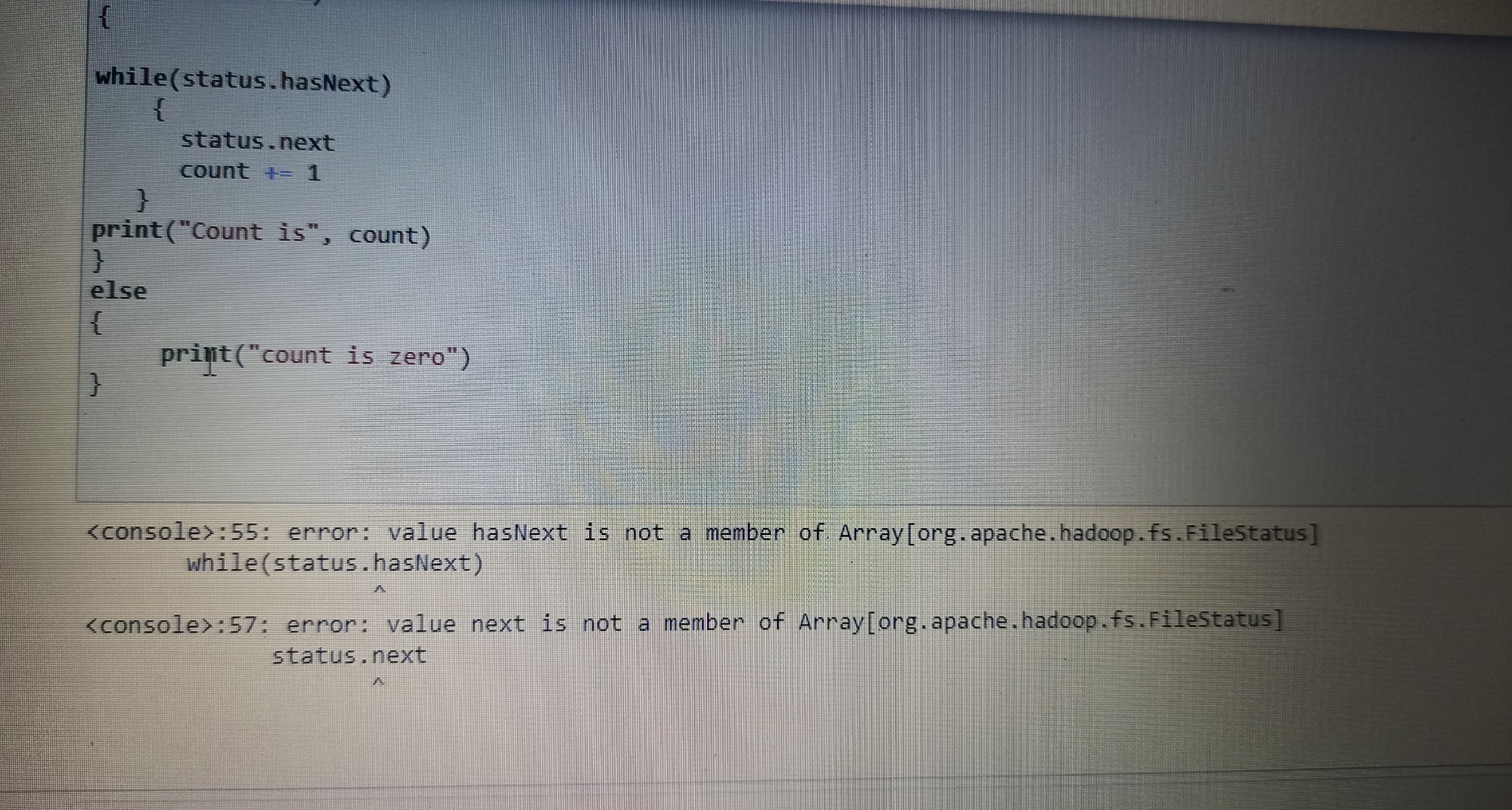

while(status.hasNext)

{

status.next

count = 1

} print("Count is", count)

}

else

{

print("count is zero")

}

I have tried above code but getting error

CodePudding user response:

import org.apache.hadoop.fs.FileSystem

val fs = FileSystem.get(spark.sparkContext.hadoopConfiguration)

val filesCount = fs.listStatus(new Path(hdfsPath)).count(_.isFile)