I came across this code for tuning the topology of the neural network. However I am unsure of how I can instantiate the first layer without flatening the input.

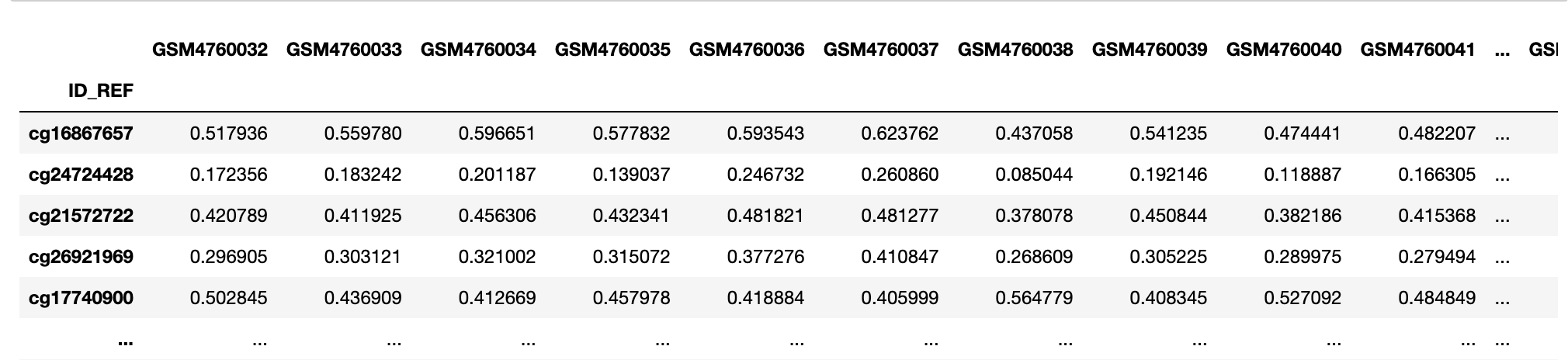

My input is like this:

With M features (the rows) and N samples (the columns).

How can I create the first (input) layer?

# Initialize sequential API and start building model.

model = keras.Sequential()

model.add(keras.layers.Flatten(input_shape=(28,28)))

# Tune the number of hidden layers and units in each.

# Number of hidden layers: 1 - 5

# Number of Units: 32 - 512 with stepsize of 32

for i in range(1, hp.Int("num_layers", 2, 6)):

model.add(

keras.layers.Dense(

units=hp.Int("units_" str(i), min_value=32, max_value=512, step=32),

activation="relu")

)

# Tune dropout layer with values from 0 - 0.3 with stepsize of 0.1.

model.add(keras.layers.Dropout(hp.Float("dropout_" str(i), 0, 0.3, step=0.1)))

# Add output layer.

model.add(keras.layers.Dense(units=10, activation="softmax"))

I know that Keras usually instantiates the first hidden layer along with the input layer, but I don't see how I can do it in this framework. Below is the code for instantiating input first hidden layer at once.

model.add(Dense(100, input_shape=(CpG_num,), kernel_initializer='normal', activation='relu')

CodePudding user response:

If you have multiple inputs and want to set your input shape, let's suppose you have a dataframe with m-> rows, n-> columns... then simply do this...

m = no_of_rows #1000

n = no_of_columns #10

no_of_layers = 64

#we will not write m because m will be taken as a batch here.

_input = tf.keras.layers.Input(shape=(n))

dense = tf.keras.layers.Dense(no_of_layers)(_input)

output = tf.keras.backend.function(_input , dense)

#Now, I can see that it is working or not...!

x = np.random.randn(1000 , 10)

print(output(x).shape)