I need to build a relief profile graph by coordinates, I have a csv file with 12,000,000 lines. searching through a csv file of the same height takes about 2 - 2.5 seconds. I rewrote the csv to parquet and it helped me save some time, it takes about 1.7 - 1 second to find one height. However, I need to build a profile for 500 - 2000 values, which makes the time very long. In the future, you may have to increase the base of the csv file, which will slow down this process even more. In this regard, my question is, is it possible to somehow reduce the processing time of values? Code example:

import dask.dataframe as dk

import numpy as np

import pandas as pd

import time

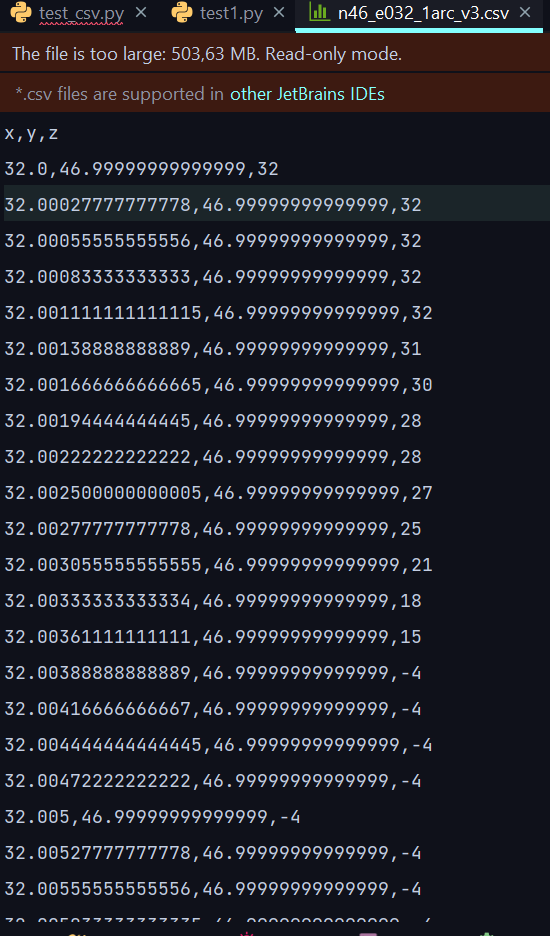

filename = 'n46_e032_1arc_v3.csv'

df = dk.read_csv(filename)

df.to_parquet('n46_e032_1arc_v3_parquet')

Latitude1y, Longitude1x = 46.6276, 32.5942

Latitude2y, Longitude2x = 46.6451, 32.6781

sec, steps, k = 0.00027778, 1, 11.73

Latitude, Longitude = [Latitude1y], [Longitude1x]

sin, cos = Latitude2y - Latitude1y, Longitude2x - Longitude1x

y, x = Latitude1y, Longitude1x

while Latitude[-1] < Latitude2y and Longitude[-1] < Longitude2x:

y, x, steps = y sec * k * sin, x sec * k * cos, steps 1

Latitude.append(y)

Longitude.append(x)

time_start = time.time()

long, elevation_data = [], []

df2 = dk.read_parquet('n46_e032_1arc_v3_parquet')

for i in range(steps 1):

elevation_line = df2[(Longitude[i] <= df2['x']) & (df2['x'] <= Longitude[i] sec) &

(Latitude[i] <= df2['y']) & (df2['y'] <= Latitude[i] sec)].compute()

elevation = np.asarray(elevation_line.z.tolist())

if elevation[-1] < 0:

elevation_data.append(0)

else:

elevation_data.append(elevation[-1])

long.append(30 * i)

plt.bar(long, elevation_data, width = 30)

plt.show()

print(time.time() - time_start)

CodePudding user response:

Here's one way to solve this problem using KD trees. A KD tree is a data structure for doing fast nearest-neighbor searches.

import scipy.spatial

tree = scipy.spatial.KDTree(df[['x', 'y']].values)

elevations = df['z'].values

long, elevation_data = [], []

for i in range(steps):

lon, lat = Longitude[i], Latitude[i]

dist, idx = tree.query([lon, lat])

elevation = elevations[idx]

if elevation < 0:

elevation = 0

elevation_data.append(elevation)

long.append(30 * i)

Note: if you can make assumptions about the data, like "all of the points in the CSV are equally spaced," faster algorithms are possible.

CodePudding user response:

It looks like your data might be on a regular grid. If (and only if) every combination of x and y exist in your data, then it probably makes sense to turn this into a labeled 2D array of points, after which querying the correct position will be very fast.

For this, I'll use xarray, which is essentially pandas for N-dimensional data, and integrates well with dask:

# bring the dataframe into memory

df = dk.read('n46_e032_1arc_v3_parquet').compute()

da = df.set_index(["y", "x"]).z.to_xarray()

# now you can query the nearest points:

desired_lats = xr.DataArray([46.6276, 46.6451], dims=["point"])

desired_lons = xr.DataArray([32.5942, 32.6781], dims=["point"])

subset = da.sel(y=desired_lats, x=desired_lons, method="nearest")

# if you'd like, you can return to pandas:

subset_s = subset.to_series()

# you could do this only once, and save the reshaped array as a zarr store:

ds = da.to_dataset(name="elevation")

ds.to_zarr("n46_e032_1arc_v3.zarr")