I have a dataset which has empty cells, and also cells which contain only spaces (one or more). I want to convert all these cells into Null.

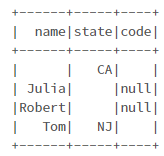

Sample dataset:

data = [("", "CA", " "), ("Julia", "", None),("Robert", " ", None), ("Tom", "NJ", " ")]

df = spark.createDataFrame(data,["name", "state", "code"])

df.show()

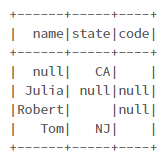

I can convert empty cells by:

df = df.select( [F.when(F.col(c)=="", None).otherwise(F.col(c)).alias(c) for c in df.columns] )

df.show()

And cells with one space:

df = df.select( [F.when(F.col(c)==" ", None).otherwise(F.col(c)).alias(c) for c in df.columns] )

df.show()

But, I don't want to repeat the above codes for cells with 2, 3, or more spaces.

Is there any way I can convert those cells at once?

CodePudding user response:

You can additional use trim or regex_replace the column before you apply when-otherwise

Trim

df = df.select( [F.when(F.trim(F.col(c))=="", None).otherwise(F.col(c)).alias(c) for c in df.columns] )

Regex Replace

df = df.select( [F.when(F.regexp_replace(col(c), "^\s $", ""))=="", None).otherwise(F.col(c)).alias(c) for c in df.columns] )

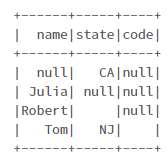

CodePudding user response:

you could use trim to remove the spaces which leaves a blank and then check for blanks in all cells.

see example below

data_sdf. \

selectExpr(*['if(trim({0}) = "", null, {0}) as {0}'.format(c) for c in data_sdf.columns]). \

show()

# ------ ----- ----

# | name|state|code|

# ------ ----- ----

# | null| CA|null|

# | Julia| null|null|

# |Robert| null|null|

# | Tom| NJ|null|

# ------ ----- ----

the list comprehension would result in if expression statements for every column

['if(trim({0}) = "", null, {0}) as {0}'.format(c) for c in data_sdf.columns]

# ['if(trim(name) = "", null, name) as name',

# 'if(trim(state) = "", null, state) as state',

# 'if(trim(code) = "", null, code) as code']