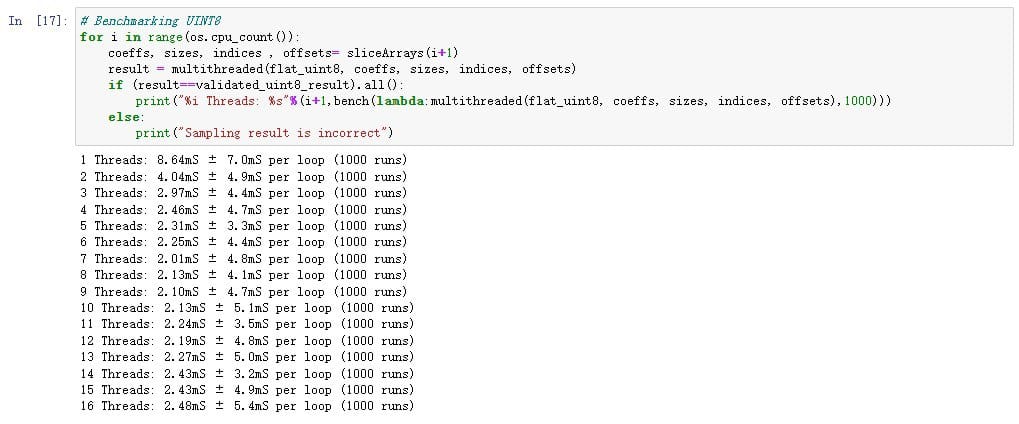

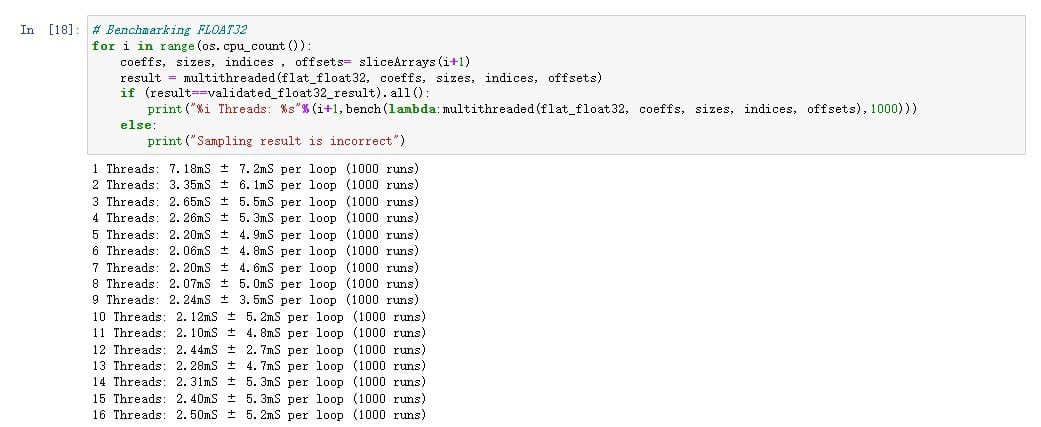

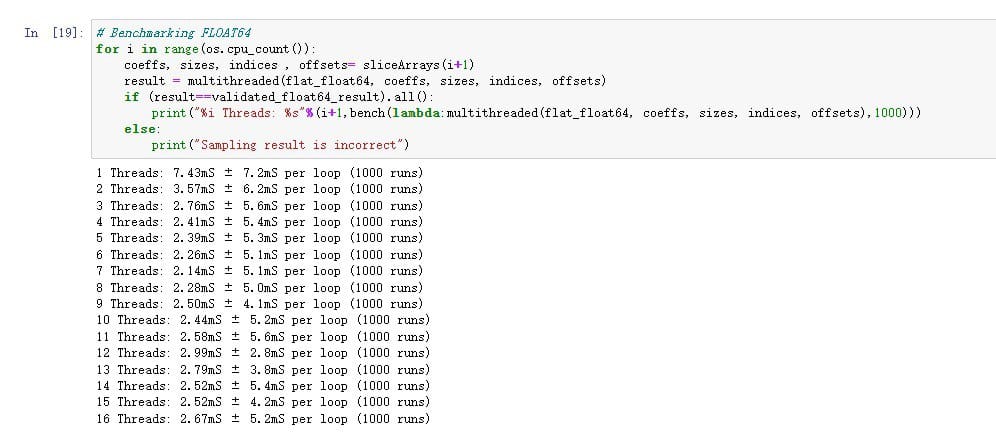

I'm working on an image processing pipeline in Python and I'm using Cython for the main computation so that it can run really fast. From early benchmarks, I found a memory bottleneck where the code would not scale at all using multiple threads.

I revised the algorithm a bit to reduce the bandwidth required and now it scales to 2 cores (4 threads with hyperthreading) but it still becomes bottlenecked by memory bandwidth. You can find the different versions of the algorithm here if you are curious:

All of these benchmarks are performed without any manual memory management and since the overall size of the data is relatively small (120MB), I would assume python puts them on a single memory stick (all of the systems have dual-channel memory). I'm not sure if it is possible to somehow tell python to split the data and store it on different physical memory modules so that the algorithm can take advantage of the dual-channel memory. I tried googling ways to do this in C but that wasn't successful either. Is memory automatically managed by the OS or is it possible to do this?

P.S.: before you comment, I have made sure to split the inputs as evenly as possible. Also, the sampling algorithm is extremely simple (multiply and accumulate), so having a memory bottleneck is not an absurd concept (it is actually pretty common in image processing algorithms).

CodePudding user response:

The OS manages splitting the program virtual address space to the different physical addresses (Ram sticks, pagefile, etc) this is transparent to python or any programming language, all systems were already using both sticks for read and write.

The fact that both float64 and float32 have the same performance means each core cache is almost never used, so consider making better use of it in your algorithms, make your code more cache friendly.

While this may seem hard for a loop that just computes a multiplication, you can group computations to reduce the number of times you access the memory.

Edit: also consider shifting the work to the GPU or TPU as dedicated gpus are very good at convolutions, and have much faster memories.