ONNX weight is a kind of deep learning model representation format, ONNX format allows the AI developers in the transformation between different framework model, to call on the generality and the current PyTorch *, Caffe2 *, Apache MXNet *, Microsoft Cognitive Toolkit *, baidu fly blade support ONNX format, OpenVINO model optimizer support the ONNX intermediary file format of the model transformation IR,

Current OpenVINO official support ONNX model mainly includes: bert_large, bvlc_alexnet, bvlc_googlenet, bvlc_reference_caffenet, bvlc_reference_rcnn_ilsvrc13 model, inception_v1, inception_v2, resnet50, squeezenet, densenet121, emotion_ferplus, mnist, shufflenet, VGG19, zfnet512, it is important to note these model upgrade version is not supported,

Since 2019 r04 OpenVINO version supports all Pytorch model of public support for the model list as follows:

Pytorch ONNX conversion to OpenVINO IR

This example demonstrates how to convert ONNX from torchvision public model, and then converted to IR, use OpenVINO with the complete process of call, we will with resnet18 as an example to demonstrate,

download model and turn ONNX format

To download and use torchvision training model, first need to install good pytorch, and then execute the following code can download related support model:

1 import torchvision. Models as models

2 resnet18=models. Resnet18 (pretrained=True)

3 alexnet=models. Alexnet (pretrained=True)

4 squeezenet=models. Squeezenet1_0 (pretrained=True)

5 vgg16=models. Vgg16 (pretrained=True)

6 densenet=models. Densenet161 (pretrained=True)

Inception=7 models. Inception_v3 (pretrained=True)

8 googlenet=models. Googlenet (pretrained=True)

9 shufflenet=models. Shufflenet_v2_x1_0 (pretrained=True)

Mobilenet=10 models. Mobilenet_v2 (pretrained=True)

11 resnext50_32x4d=models. Resnext50_32x4d (pretrained=True)

12 wide_resnet50_2=models. Wide_resnet50_2 (pretrained=True)

13 mnasnet=models. Mnasnet1_0 (pretrained=True)

Here, we only need to perform resnet18=models. Resnet18 (pretrained=True) resnet18 can be downloaded on the model, the model of input format requirements as follows:

Size is 224 x224,

RGB three-channel images,

Mean=[0.485, 0.456, 0.406]

STD=[0.229, 0.224, 0.225]

Download and turned as ONNX code is as follows:

model=torchvision. Models. Resnet18 (pretrained=True). The eval ()

Dummy_input=torch. Randn ((1, 3, 224, 224))

The torch. Onnx. Export (model, dummy_input, "resnet18. Onnx")

to the IR format

Cmd to open the installed OpenVINO:

Deployment_tools \ model_optimizer

Directory, execute the following command line statement:

python mo_onnx. Py -- -- input_model D: \ \ python pytorch_tutorial \ resnet18 onnx

You can see resnet18 model has better success!

OpenVINO SDK call

To convert good IR model, you can first by OpenVINO202R3 Python version of the SDK to complete accelerating reasoning forecast, the complete code implementation is as follows:

the from __future__ import print_function

The import cv2

The import numpy as np

The import of logging as the log

The from openvino. Inference_engine import IECore

With the open (' imagenet_classes. TXT) as f:

Labels=[line strip () for the line in f.r eadlines ()]

Def image_classification () :

Model_xml="resnet18. XML"

Model_bin="resnet18. Bin"

# Plugin initialization for specified device and the load extensions library if specified

The info (" Creating Inference Engine ")

Ie=IECore ()

# Read IR

The info (" Loading network files: \ n \ t \ n \ t {} {} ". The format (model_xml model_bin))

Net=ie. Read_network (model=model_xml, weights=model_bin)

The info (" Preparing input blobs ")

Input_blob=next (iter (net. Inputs))

Out_blob=next (iter (net. Outputs))

# Read and pre - process input images'

N, c, h, w=net. Inputs [input_blob]. Shape

Images=np. Ndarray (shape=(n, c, h, w))

SRC=https://bbs.csdn.net/topics/cv2.imread (" D:/images/messi. JPG ")

Image=cv2. Resize (SRC (w, h))

Image=np. Float32 (image)/255.0

Image [: :] -=(np) float32 (0.485), np. Float32 (0.456), np. Float32 (0.406))

Image [: :]/=(np) float32 (0.229), np. Float32 (0.224), np. Float32 (0.225))

Image=image transpose ((2, 0, 1))

# Loading model to the plugin

The info (" Loading model to the plugin ")

Exec_net=ie. Load_network (network=net, device_name="CPU")

# Start sync inference

The info (" Starting inference in synchronous mode ")

Res=exec_net. Infer (inputs={input_blob: [image]})

# Processing output blob

The info (" Processing output blob ")

Res=res [out_blob]

Label_index=np. Argmax (res, 1)

Label_txt=labels [label_index [0]]

Cv2. PutText (SRC, label_txt, (10, 50), cv2. FONT_HERSHEY_SIMPLEX, 1.0, (255, 0, 255), 2, 8)

Cv2. Imshow (" ResNet18 -from Pytorch image classification ", SRC)

Cv2. WaitKey (0)

Cv2. DestroyAllWindows ()

If __name__=="__main__ ':

Image_classification ()

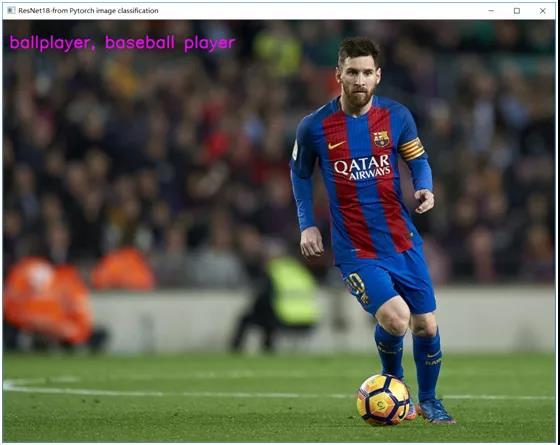

The results are as follows: