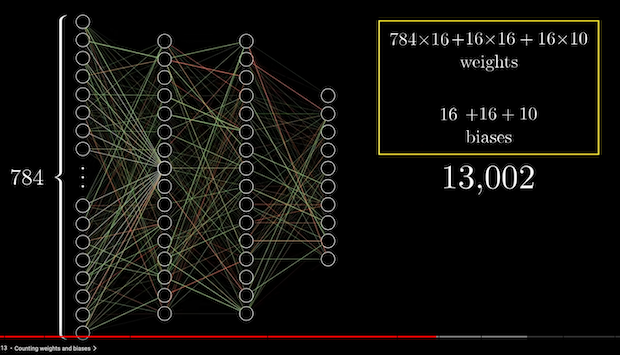

In the example from 3b1b's video about Neural Network (

Then I made the same model in Tensorflow, and the model.summary() shows the following:

Model: "model_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 784, 1)] 0

dense_8 (Dense) (None, 784, 16) 32

dense_9 (Dense) (None, 784, 16) 272

dense_10 (Dense) (None, 784, 10) 170

=================================================================

Total params: 474

Trainable params: 474

Non-trainable params: 0

_________________________________________________________________

Code used to produce the above:

#I'm using Keras through Julia so the code may look different?

input_shape = (784,1)

inputs = layers.Input(input_shape)

outputs = layers.Dense(16)(inputs)

outputs = layers.Dense(16)(outputs)

outputs = layers.Dense(10)(outputs)

model = keras.Model(inputs, outputs)

model.summary()

Which does not reflect the input shape at all? So I made another model with input_shape=(1,1), and I get the same Total Params:

Model: "model_3"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_10 (InputLayer) [(None, 1, 1)] 0

dense_72 (Dense) (None, 1, 16) 32

dense_73 (Dense) (None, 1, 16) 272

dense_74 (Dense) (None, 1, 10) 170

=================================================================

Total params: 474

Trainable params: 474

Non-trainable params: 0

_________________________________________________________________

I don't think it's a bug, but I probably just don't understand what these mean / how Params are calculated.

Any help will be very appreciated.

CodePudding user response:

A Dense layer is applied to the last dimension of your input. In your case it is 1, instead of 784. What you actually want is:

import tensorflow as tf

input_shape = (784, )

inputs = tf.keras.layers.Input(input_shape)

outputs = tf.keras.layers.Dense(16)(inputs)

outputs = tf.keras.layers.Dense(16)(outputs)

outputs = tf.keras.layers.Dense(10)(outputs)

model = tf.keras.Model(inputs, outputs)

model.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_2 (InputLayer) [(None, 784)] 0

dense_3 (Dense) (None, 16) 12560

dense_4 (Dense) (None, 16) 272

dense_5 (Dense) (None, 10) 170

=================================================================

Total params: 13,002

Trainable params: 13,002

Non-trainable params: 0

_________________________________________________________________

From the TF docs:

Note: If the input to the layer has a rank greater than 2, then Dense computes the dot product between the inputs and the kernel along the last axis of the inputs and axis 0 of the kernel (using tf.tensordot). For example, if input has dimensions (batch_size, d0, d1), then we create a kernel with shape (d1, units), and the kernel operates along axis 2 of the input, on every sub-tensor of shape (1, 1, d1) (there are batch_size * d0 such sub-tensors). The output in this case will have shape (batch_size, d0, units).