I was trying to deploy a helm chart using Terraform helm provider. With the below code I'm able to create a helm release and created namespace for the resources.

provider "helm" {

kubernetes {

kube_config_path = trim(base64decode(data.test.config.result.config_path), "\n")

exec {

api_version = "client.authentication.k8s.io/v1"

command = "kubelogin"

args = [

"get-token",

"--login", "spn",

"--environment", "AzurePublicCloud",

"--server-id", "6dae42f8-4368-4678-94ff-3960e28e3630",

"--tenant-id", data.azurerm_client_config.current.tenant_id,

"--client-id", data.azurerm_client_config.current.client_id,

"--client-secret", data.azurerm_key_vault_secret.service_principal_key.value,

]

}

}

debug = true

}

resource "helm_release" "helmrelname" {

name = "helmrelname"

repository = "https://mycompany.github.io/charts"

chart = "helmrelname1"

namespace = "helmrelname-test"

create_namespace = true

timeout = 800

wait_for_jobs = true

wait = true

force_update = true

set {

name = "helmrelname.monitoring.create"

value = "false"

type = "auto"

}

depends_on = [data.test.config]

lifecycle {

ignore_changes = all

}

}

On "terraform apply" I could see that the execution failed with below error

query: failed to query with labels: secrets is forbidden: User "3df53t-3fea-48b4-a932-3061e1fec6cc" cannot list resource "secrets" in API group "" in the namespace "helmrelname-test"

What I should do to resolve this?

Note: "3df53t-3fea-48b4-a932-3061e1fec6cc" is the service principal object id

CodePudding user response:

Seems issue was casued because of service principal permissions. Replicated the same via below code.

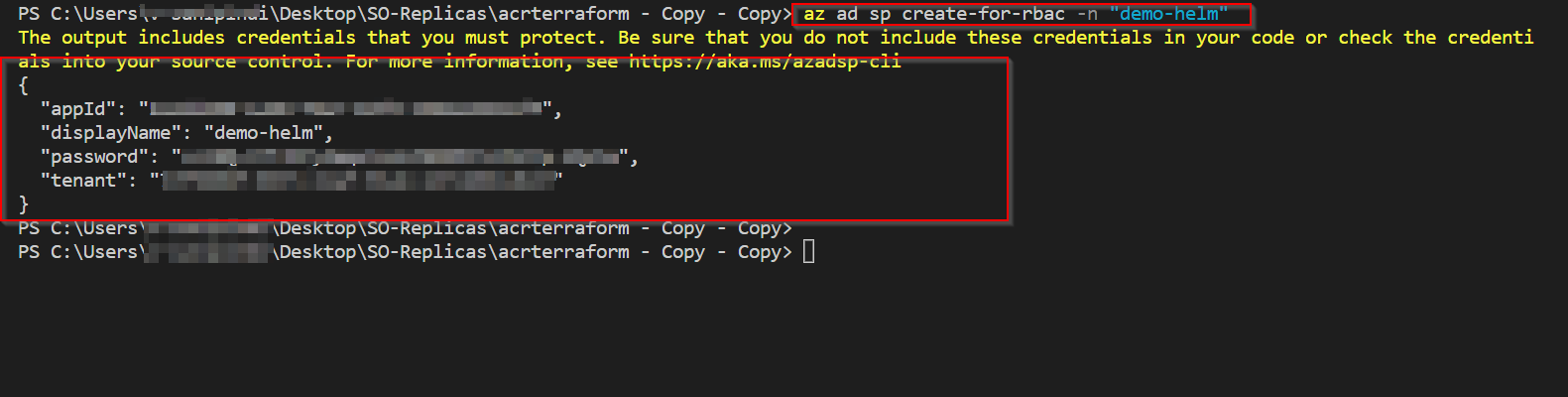

Step1: created a service principal by running below command

az ad sp create-for-rbac -n "demo-helm"

Output as follow

Step2: main tf file as follows NOTE: Copy the above secret password and appid information

data "azurerm_resource_group" "example" {

name = "*********"

}

data "azuread_client_config" "current" {}

provider "helm" {

kubernetes {

// kube_config_path = trim(base64decode(data.test.config.result.config_path), "\n")

exec {

api_version = "client.authentication.k8s.io/v1"

command = "kubelogin"

args = [

"get-token",

"--login", "spn",

"--environment", "AzurePublicCloud",

"--server-id", "*****************************",

"--tenant-id", "*****************************",

"--client-id", "*****************************",

"--client-secret", "*****************************",

]

}

}

debug = true

}

resource "helm_release" "helmrelname" {

name = "helmrelname"

repository = "https://mycompany.github.io/charts"

chart = "helmrelname1"

namespace = "helmrelname-test"

create_namespace = true

timeout = 800

wait_for_jobs = true

wait = true

force_update = true

set {

name = "helmrelname.monitoring.create"

value = "false"

type = "auto"

}

//depends_on = [data.test.config]

lifecycle {

ignore_changes = all

}

}

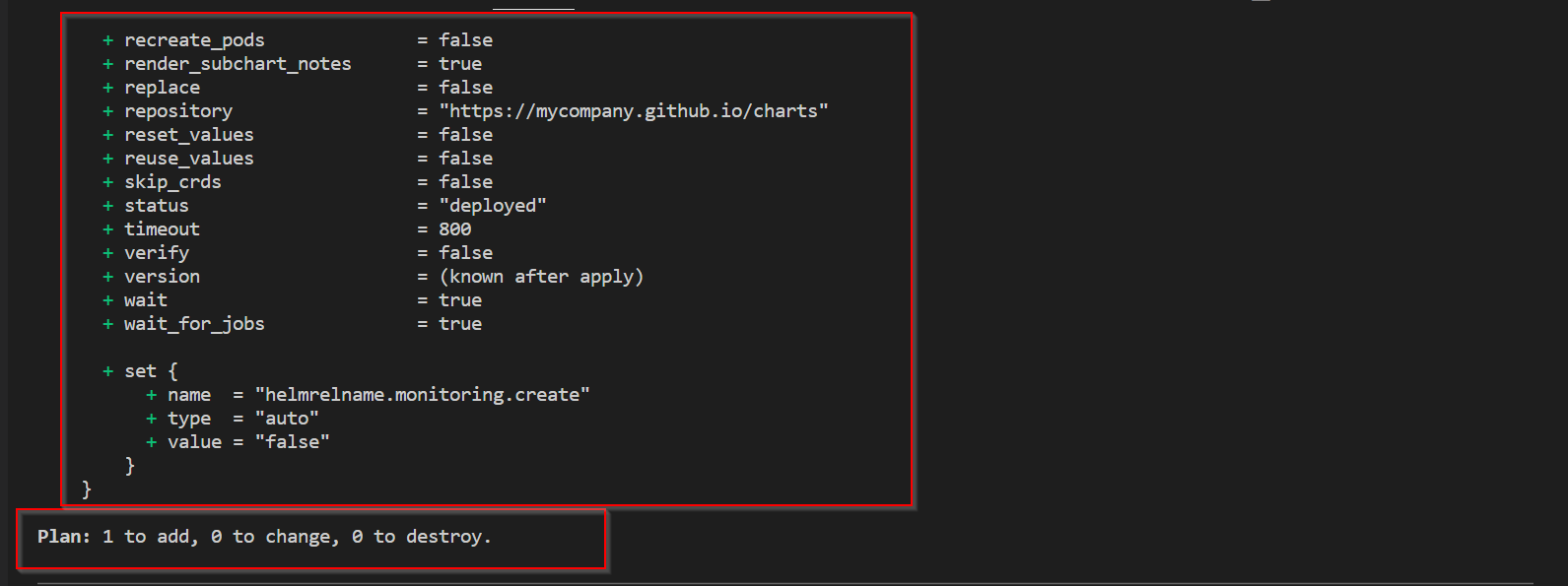

Step3: Upon running plan and apply

terraform plan

terraform apply -auto-approve

NOTE: We need valid chart repository access configured on portal.

CodePudding user response:

Added the clusterrolebinding for cluster admin role to objecid "3df53t-3fea-48b4-a932-3061e1fec6cc" and issue got resolved.

Command used.

kubectl create clusterrolebinding --clusterrole cluster-admin --user "3df53t-3fea-48b4-a932-3061e1fec6cc"