I have an input form validator like this:

<input pattern="[a-zA-Z]{2,}" .../>

Which works fine to validate html input.

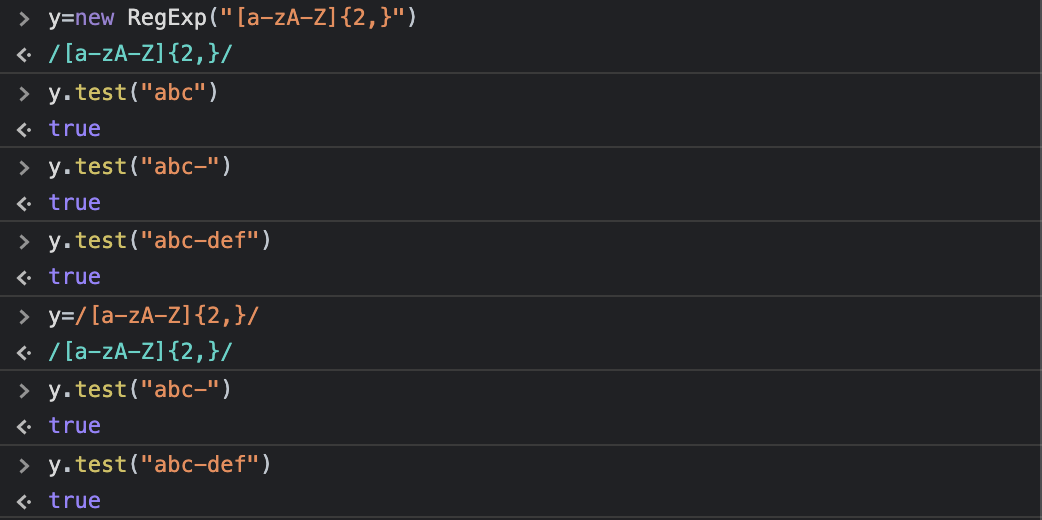

However when I try to use the same in JavaScript:

const regExp = new RegExp("[a-zA-Z]{2,}");

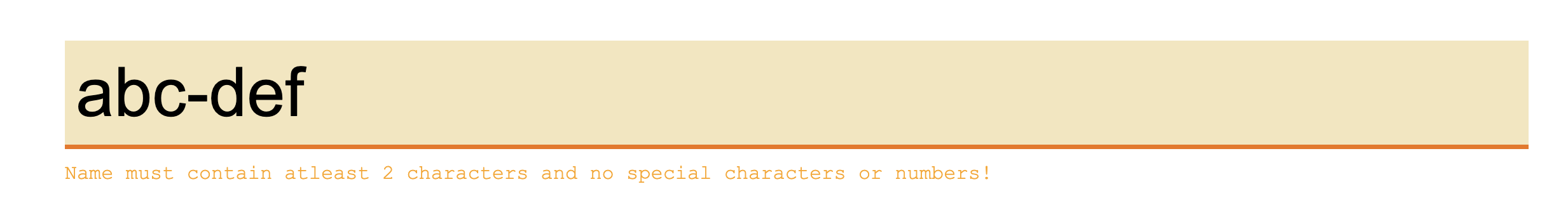

this regex behaves unexpectedly as in html:

Can someone tell me what am I missing? Ideally I would want to pass regexString in a utility function rather than using /.

CodePudding user response:

You are missing the start and end of line characters. The following should work in JS as the pattern property of the input does.

const regExp = new RegExp("^[a-zA-Z]{2,}$");

The pattern property of input has the start and end characters intrinsically included, since the input is checked as a whole, while in JS you might use a regular expression to test a whole text, where the pattern could match multiple times, if you are not using the start and end of line characters.