Software version information:

Windows 10 64

Tensorflow1.15

Tensorflow object detection API 1 x

Python3.6.5

VS2015 vc + +

CUDA10.0

Hardware:

CPUi7

GPU 1050 ti

How to install tensorflow object detection API framework, look here:

Support tensorflow1 Tensorflow Object Detection API finally. The x and tensorflow2. X

data set processing and generate

You first need to download the data sets, download address is:

https://pan.baidu.com/s/1UbFkGm4EppdAU660Vu7SdQ

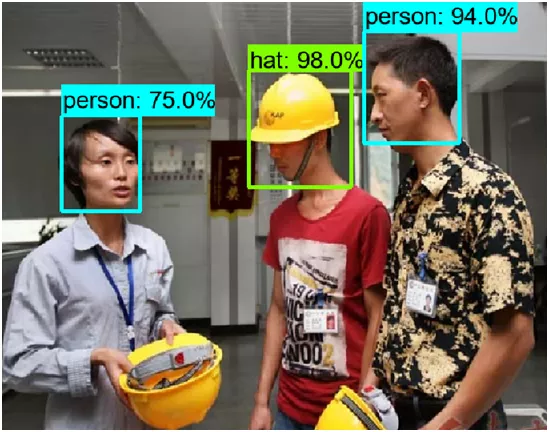

A total of 7581 images, based on Pascal VOC2012 complete annotation, is divided into two categories, respectively is safety helmet (hat with the person) with people, the json format is as follows:

item {

Id: 1

Name: 'hat'

}

Item {

Id: 2

Name: 'person'

}

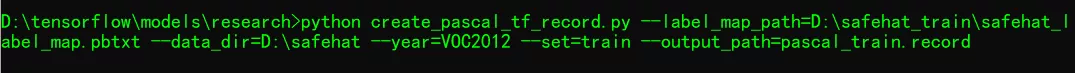

After download data set and cannot be tensorflow object detection of converting script tfrecord API framework, mainly has several errors of XML and JPEG image formats, I revised them all after a trial, after correction of the data can be produced to run two scripts below tfrecord data of training set and validation set command line is as follows:

It is important to note here create_pascal_tf_record. Py script of the 165 lines

'aeroplane_ + FLAGS. Set +'. TXT ')

Is amended as:

FLAGS. Set + '. TXT ')

Reason is that the data set to do classification train/val, so you need to change, change after completion of preservation, run the command line, can generate tfrecord correctly, otherwise will encounter errors,

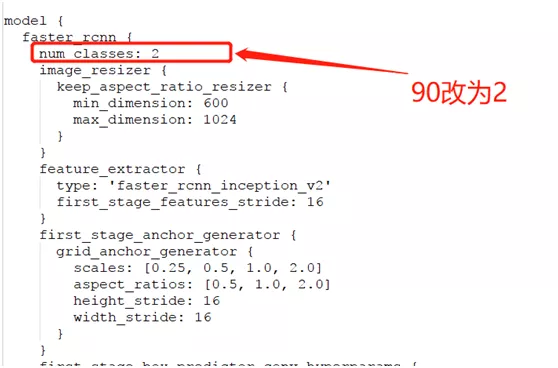

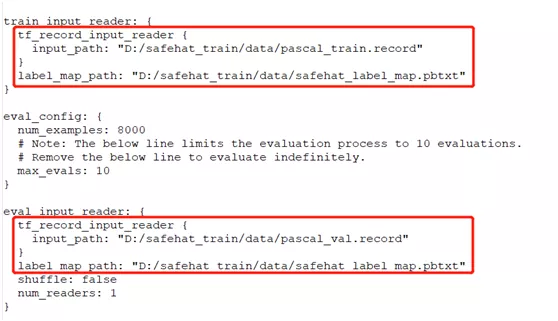

Faster_rcnn_inception_v2_coco based object detection model implementation migration study, first of all need to configure the migration study config file, the corresponding configuration file can be from:

Research \ object_detection \ samples \ configs

Found that found in the file:

Faster_rcnn_inception_v2_coco. Config

After the change in the relevant part of the configuration file, about how to change, modify, we can see here:

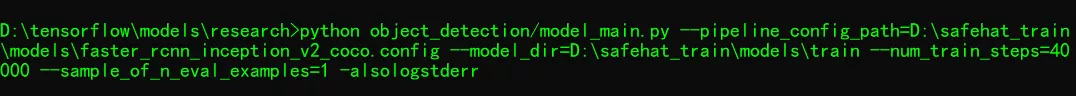

Completed finished, after D plate under several new directory, execute the following command line parameters:

Will start training, training a total of 40000 step, in the process of training can be trained by tensorboard view results:

model export

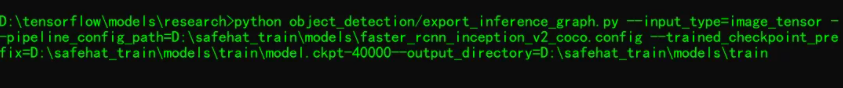

Completed the 40000 step after the training, you can see the corresponding checkpoint file, with the aid of tensorflow object detection API framework provides the model of the export script, you can freeze the checkpoint file exported as figure PB file format, the relevant command line arguments are as follows:

Get pb files, use OpenCV4. X tf_text_graph_faster_rcnn in. Py script, transformation generates graph. PBTXT configuration file, the resulting:

- frozen_inference_graph. Pb

- frozen_inference_graph. PBTXT

to OpenVINO IR file

python mo_tf. Py

- input_model D: \ safehat_train \ models \ "train" \ frozen_inference_graph pb/

- transformations_config extensions \ front \ tf \ faster_rcnn_support_api_v1 15. Json/

- tensorflow_object_detection_api_pipeline_config D: \ safehat_train \ models \ pipeline config/

- input_shape [1600600]/

- reverse_input_channels

using the model, helmet detection

the from __future__ import print_function

The import cv2

The import time

The import of logging as the log

The from openvino. Inference_engine import IECore

Labels=(" hat ", "person")

Def safehat_detection () :

Model_xml="D:/projects/opencv_tutorial/data/models/safetyhat/frozen_inference_graph. XML"

Model_bin="D:/projects/opencv_tutorial/data/models/safetyhat/frozen_inference_graph bin"

The info (" Creating Inference Engine ")

Ie=IECore ()

# Read IR

Net=ie. Read_network (model=model_xml, weights=model_bin)

The info (" Preparing input blobs ")

Input_it=iter (net. Input_info)

Input1_blob=next (input_it)

Input2_blob=next (input_it)

Print (input2_blob)

Out_blob=next (iter (net. Outputs))

# Read and pre - process input images'

Print (net. Input_info [input1_blob] input_data. Shape)

Print (net. Input_info [input2_blob] input_data. Shape)

Image=cv2. Imread (" D:/safehat/test/1. JPG ")

Ih, iw, IC=image shape

Image_blob=cv2. Within DNN. BlobFromImage (image, 1.0, (600, 600), (0, 0, 0), False)

# Loading model to the plugin

Exec_net=ie. Load_network (network=net, device_name="CPU")

# Start sync inference

The info (" Starting inference in synchronous mode ")

Inf_start1=time. Time ()

Res=exec_net. Infer (inputs={input1_blob: [[600, 600, 1.0]], input2_blob: [image_blob]})

Inf_end1=time. Time () - inf_start1

Print (" inference time (ms) : %, 3 f "% (inf_end1 * 1000))

# Processing output blob

Res=res [out_blob]

For obj res in [0] [0] :

If obj [2] & gt; 0.5:

The index=int (obj [1])

Xmin=int (obj [3] * iw)

Ymin=int (obj [4] * ih)

Xmax=int (obj [5] * iw)

Ymax=int (obj [6] * ih)

Cv2. PutText (image, labels, [index - 1] (xmin, ymin), cv2. FONT_HERSHEY_SIMPLEX, 1.0, (255, 0, 0), (2)

Cv2. A rectangle (image, (xmin, ymin), (xmax, ymax), (0, 0, 255), 2, 8, 0)

Cv2. Imshow (" safetyhat detection ", image)

Cv2. WaitKey (0)

Cv2. DestroyAllWindows ()

If __name__=="__main__ ':

nullnullnull