I am a newbie to Pandas, and somewhat newbie to python

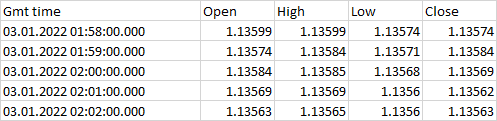

I am looking at stock data, which I read in as CSV and typical size is 500,000 rows. The data looks like this

I need to check the data against itself - the basic algorithm is a loop similar to

Row = 0

x = get "low" price in row ROW

y = CalculateSomething(x)

go through the rest of the data, compare against y

if (a):

append ("A") at the end of row ROW # in the dataframe

else

print ("B") at the end of row ROW

Row = Row 1

the next iteration, the datapointer should reset to ROW 1. then go through same process each time, it adds notes to the dataframe at the ROW index

I looked at Pandas, and figured the way to try this would be to use two loops, and copying the dataframe to maintain two separate instances

The actual code looks like this (simplified)

df = pd.read_csv('data.csv')

calc1 = 1 # this part is confidential so set to something simple

calc2 = 2 # this part is confidential so set to something simple

def func3_df_index(df):

dfouter = df.copy()

for outerindex in dfouter.index:

dfouter_openval = dfouter.at[outerindex,"Open"]

for index in df.index:

if (df.at[index,"Low"] <= (calc1) and (index >= outerindex)) :

dfouter.at[outerindex,'notes'] = "message 1"

break

elif (df.at[index,"High"] >= (calc2) and (index >= outerindex)):

dfouter.at[outerindex,'notes'] = "message2"

break

else:

dfouter.at[outerindex,'notes'] = "message3"

this method is taking a long time (7 minutes ) per 5K - which will be quite long for 500,000 rows. There may be data exceeding 1 million rows

I have tried using the two loop method with the following variants:

using iloc - e.g df.iloc[index,2]

using at - e,g df.at[index,"low"]

using numpy& at - eg df.at[index,"low"] = np.where((df.at[index,"low"] < ..."

The data is floating point values, and datetime string.

Is it better to use numpy? maybe an alternative to using two loops? any other methods, like using R, mongo, some other database etc - different from python would also be useful - i just need the results, not necessarily tied to python.

any help and constructs would be greatly helpful

Thanks in advance

CodePudding user response:

You have to split main dataset in smaller datasets for eg. 50 sub-datasets with 10.000 rows each to increase speed. Do functions in each sub-dataset using threading or concurrency and then combine your final results.

CodePudding user response:

You are copying the dataframe and manually looping over the indicies. This will almost always be slower than vectorized operations.

If you only care about one row at a time, you can simply use csv module.

numpy is not "better"; pandas internally uses numpy

Alternatively, load the data into a database. Examples include sqlite, mysql/mariadb, postgres, or maybe DuckDB, then use query commands against that. This will have the added advantage of allowing for type-conversion from stings to floats, so numerical analysis is easier.

If you really want to process a file in parallel directly from Python, then you could move to Dask or PySpark, although, Pandas should work with some tuning, though Pandas read_sql function would work better, for a start.