I have thee following cluster, and it creates fine. But I also want to add a kubernetes_namespace resource so that a namespace gets created after the private cluster is recreated. But how do I get terraform to actually connect to the private cluster after it creates?

resource "azurerm_kubernetes_cluster" "aks_cluster" {

for_each = var.aks_clusters

name = "aks-${each.key}-${var.env}-001"

location = azurerm_resource_group.aks_rg.location

resource_group_name = azurerm_resource_group.aks_rg.name

dns_prefix = "test-${each.key}-aks-cluster"

kubernetes_version = data.azurerm_kubernetes_service_versions.current.latest_version

private_cluster_enabled = true #false until networking is complete

private_cluster_public_fqdn_enabled = true

#

# - Name must start with a lowercase letter, have max length of 12,

# and only have characters a-z0-9.

#

default_node_pool {

name = substr("test${each.key}",0,12)

vm_size = var.aks_cluster_vm_size

os_disk_size_gb = var.aks_cluster_os_size_gb

orchestrator_version = data.azurerm_kubernetes_service_versions.current.latest_version

availability_zones = [1, 2]

enable_auto_scaling = true

max_count = var.node_max_count

min_count = var.node_min_count

node_count = var.node_count

type = "VirtualMachineScaleSets"

vnet_subnet_id = var.aks_subnets[each.key].id

node_labels = {

"type" = each.key

"environment" = var.env

}

tags = {

"type" = each.key

"environment" = var.env

}

}

network_profile {

network_plugin = "kubenet"

pod_cidr = var.aks_subnets[each.key].pcidr

service_cidr = var.aks_subnets[each.key].scidr

docker_bridge_cidr = var.aks_subnets[each.key].dockcidr

dns_service_ip = var.aks_subnets[each.key].dnsip

}

service_principal {

client_id = var.aks_app_id

client_secret = var.aks_password

}

role_based_access_control {

enabled = true

}

tags = local.resource_tags

}CodePudding user response:

with a private build agent in the same VNet or in a peered VNet. The Terraform State should be stored in a blob container or wherever you want, just not locally.

CodePudding user response:

If you are trying to do this from Local machine, It's not possible as the Terraform client won't be able to resolve the API server IP address and send REST calls to it if they are on different networks in case of private AKS cluster. The best option would be to install the Terraform client on a machine in the network where you would like to deploy the AKS cluster or a connected network. In any case, the network has to be created before attempting to create the cluster. This is because when you are creating a namespace with Terraform in an AKS cluster, the provider is no longer azurerm_* and it will try to directly access the API server (it does not send a REST API call to ARM like az aks command invoke).

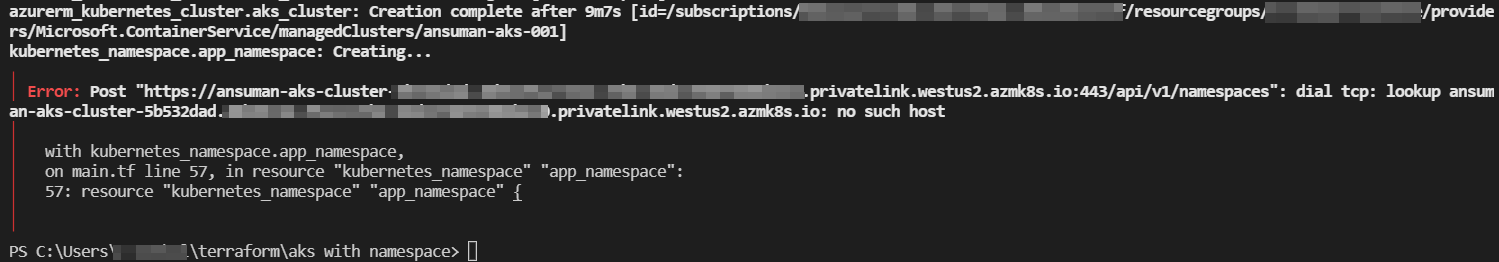

Error from Local machine for Private cluster:

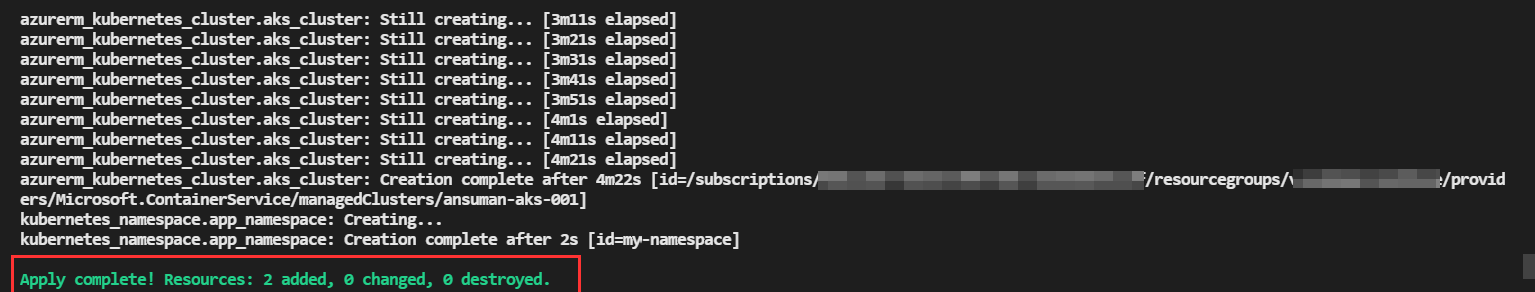

But it will be possible for public cluster :

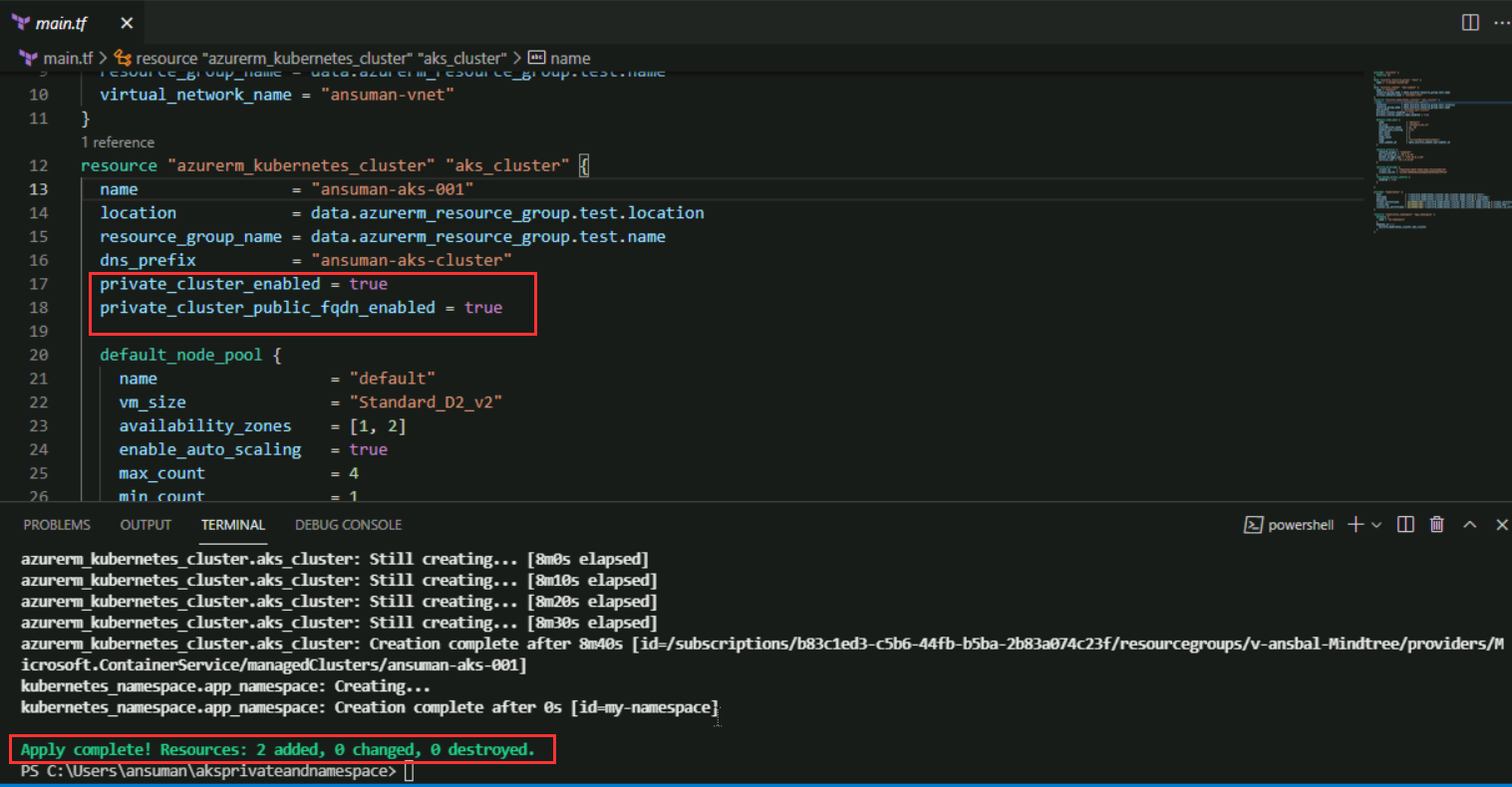

So , for Workaround you can create a VM in the same vnet that you will be using for the AKS cluster and deploy the terraform script from inside the VM.

provider "azurerm" {

features {}

}

data "azurerm_resource_group" "test" {

name = "resourcegroup"

}

data "azurerm_subnet" "aks-subnet" {

name = "default"

resource_group_name = data.azurerm_resource_group.test.name

virtual_network_name = "ansuman-vnet"

}

resource "azurerm_kubernetes_cluster" "aks_cluster" {

name = "ansuman-aks-001"

location = data.azurerm_resource_group.test.location

resource_group_name = data.azurerm_resource_group.test.name

dns_prefix = "ansuman-aks-cluster"

private_cluster_enabled = true

private_cluster_public_fqdn_enabled = true

default_node_pool {

name = "default"

vm_size = "Standard_D2_v2"

availability_zones = [1, 2]

enable_auto_scaling = true

max_count = 4

min_count = 1

node_count = 2

type = "VirtualMachineScaleSets"

vnet_subnet_id = data.azurerm_subnet.aks-subnet.id

}

network_profile {

network_plugin = "kubenet"

dns_service_ip = "10.1.0.10"

docker_bridge_cidr = "170.10.0.1/16"

service_cidr = "10.1.0.0/16"

}

service_principal {

client_id = "f6a2f33d-xxxxxx-xxxxxx-xxxxxxx-xxxxxx"

client_secret = "mLk7Q~2S6D1Omoe1xxxxxxxxxxxxxxxxxxxxx"

}

role_based_access_control {

enabled = true

}

}

provider "kubernetes" {

host = "${azurerm_kubernetes_cluster.aks_cluster.kube_config.0.host}"

username = "${azurerm_kubernetes_cluster.aks_cluster.kube_config.0.username}"

password = "${azurerm_kubernetes_cluster.aks_cluster.kube_config.0.password}"

client_certificate = base64decode("${azurerm_kubernetes_cluster.aks_cluster.kube_config.0.client_certificate}")

client_key = base64decode("${azurerm_kubernetes_cluster.aks_cluster.kube_config.0.client_key}")

cluster_ca_certificate = base64decode("${azurerm_kubernetes_cluster.aks_cluster.kube_config.0.cluster_ca_certificate}")

}

resource "kubernetes_namespace" "app_namespace" {

metadata {

name = "my-namespace"

}

depends_on = [

azurerm_kubernetes_cluster.aks_cluster

]

}

Output:

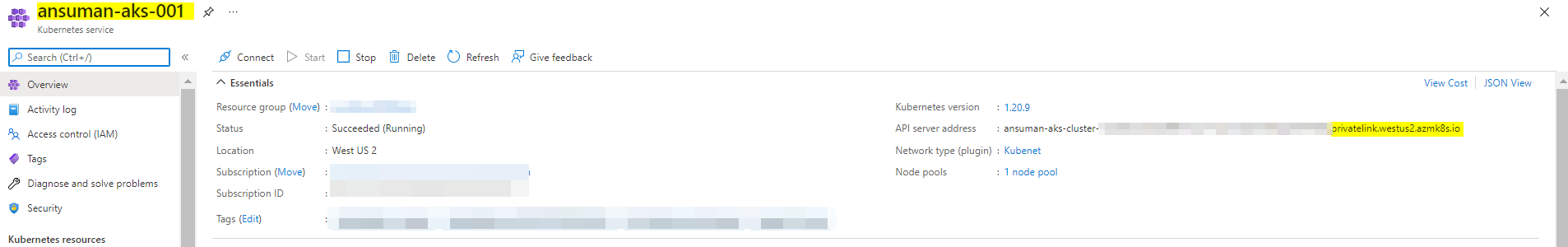

Note: If you want to verify if the namespace is added or not then open portal from your VM only and then you can verify as they are on same network.

For more information on how to connect to private aks_cluster , you can refer the below link.

Reference:

Create a private Azure Kubernetes Service cluster - Azure Kubernetes Service | Microsoft Docs