I have a requirement where I need to point our DEV Azure Data Factory to a Production Azure SQL database and also have the ability to switch the data source back to the Dev database should we need to.

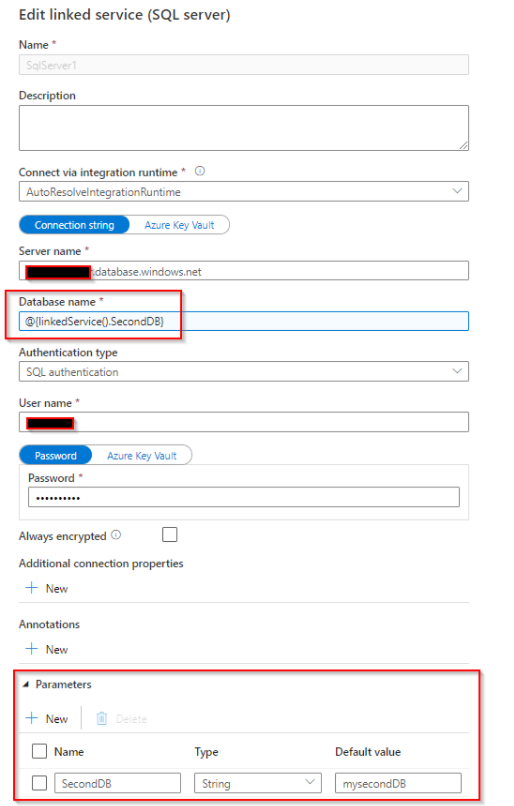

I've been looking at creating parameters against the linked services but unsure of the best approach.

Should I create parameters as follows and choose the relevant parameters depending on the environment I want to pull data from?

DevFullyQualifiedDomainName

ProdFullyQualifiedDomainName

DevDatabaseName

ProdDatabaseName

DevUserName

ProdUserName

Thanks

CodePudding user response:

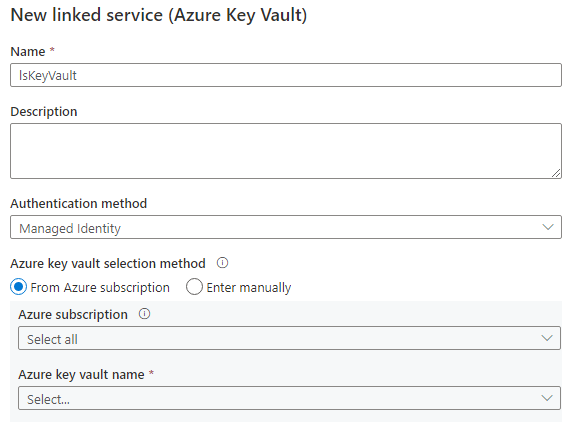

I would suggest using Azure Key Vault for that.

Create an Azure Key Vault for each environment (dev, prod, etc.)

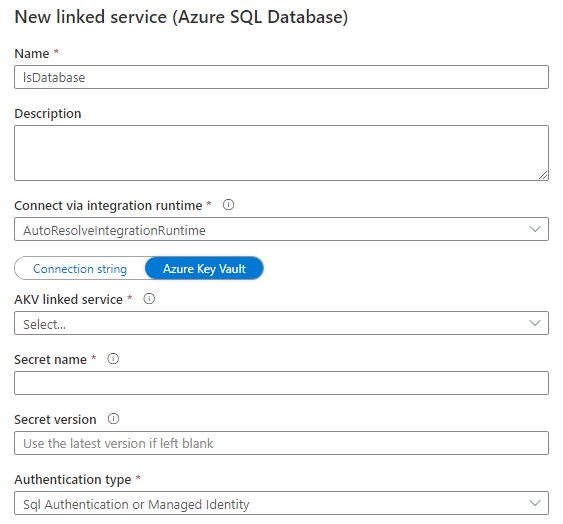

Create secrets inside both key vaults with the same name but different values. For example, for the database server name, create the same secret "database-server" in both dev and prod key vaults but with the correct value representing the connection string of the dev and prod server respectively, in the following format:

integrated security=False;encrypt=True;connection timeout=30;data source=<serverName>.database.windows.net;initial catalog=<databaseName>;user id=<userName>;password=<loginPassword>In your Azure Data Factory, create a Key Vault linked service pointing to your key vault.

In your Azure Data Factory, create a new Azure SQL Database linked service selecting the Key Vault created in step 1 and the secret created in step 2.

Now you can easily switch between dev and prod by simply adjusting your Key Vault linked service to point to the desired environment.

Have fun ;)

Create New parameter in dataset, assign the dataset parameter to that same Linked service parameter, which will be used to store the trigger data.

A custom event trigger has the ability to parse and deliver a custom data payload to your pipeline. You define the pipeline parameters and then populate the values on the Parameters page. To parse the data payload and provide values to the pipeline parameters, use the format @triggerBody().event.data. keyName_.

As per Microsoft Official Documents, which could be referred:

Reference trigger metadata in pipelines

System variables in custom event trigger

When you utilize a pipeline activity in a source, it will request you for a dataset parameter. In this case, utilize dynamic content and choose the parameter containing the trigger data.