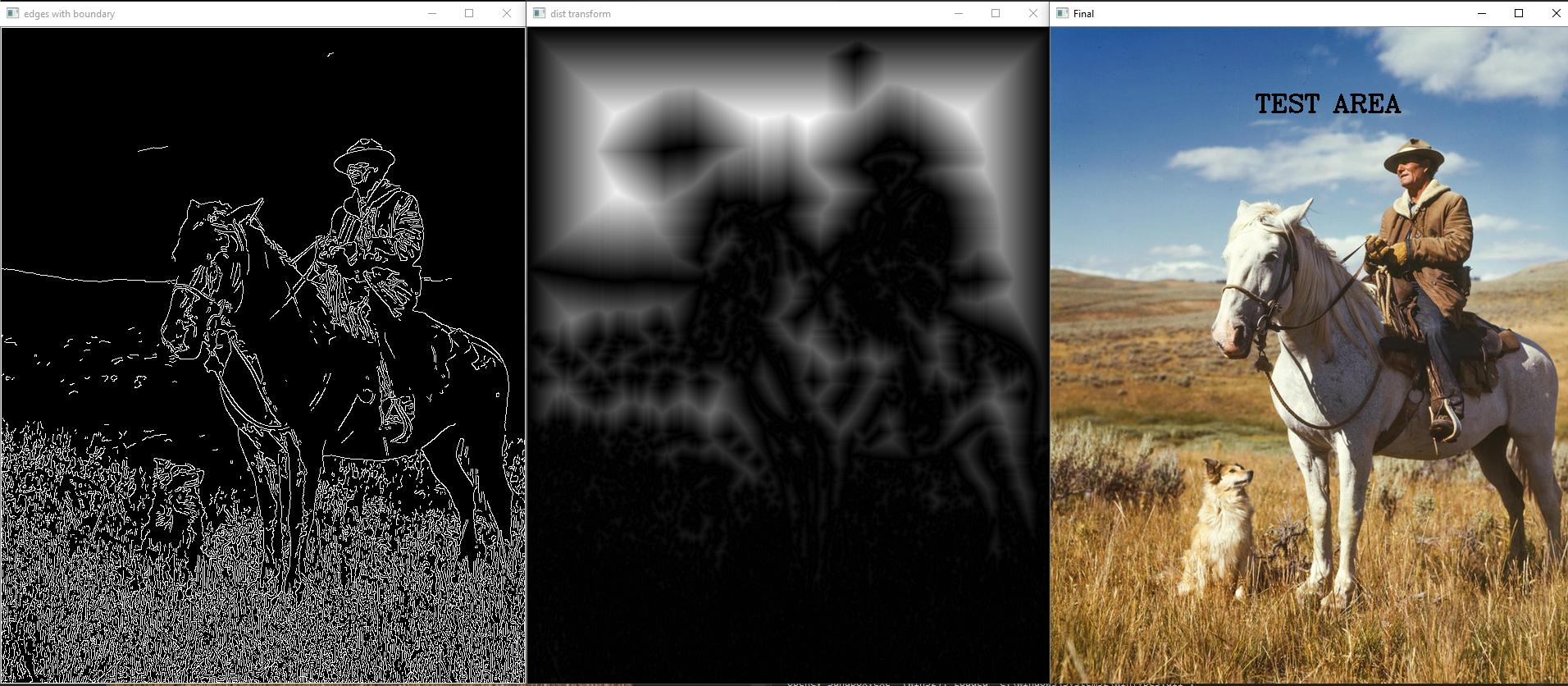

Run a distance transform on your black area mask. Take the highest value from that distance transform and use that location as the center of your text. (you will want to make sure to put an edge around the image so that the distance transform pushes away from the boundaries of the image as well). What this will do is find the black area furthest away from any non-background pixels. You can use minMaxLoc opencv function to find the location of the highest value after the distance transform.

This will put the text anywhere on the picture (purely based on your background mask / image edges). If you want to enforce some kind of limits (like background but only top left of screen, etc), just modify your mask accordingly (add in arbitrary positive edge pixels or shapes) before inverting and running the distance transform.

Some liberties that I took with your code: I ran a canny right on the color image because it had better contrast properties than the grayscale as far as the sky is concerned. I got rid of your closing morphology... I kinda understand why you wanted to do that to close shapes, but since I was doing a distance transform this step is unnecessary.

One additional step that could be implemented depending on your application is that you can keep the value of the max point as well as the location (make sure not to normalize) and if that value is less than half the max dimension of your text (probably width), then the text will clip into objects and/or the image edge which you may want to throw an error on (or change text size or something).

Here is the demonstration code that produced the images above:

#include <stdio.h>

#include <opencv2/opencv.hpp>

#include <Windows.h>

#include <string>

using namespace std;

using namespace cv;

int main(int argc, char** argv)

{

bool debugFlag = true;

std::string path = "C:/Local Software/voyDICOM/resources/images/cowboyPic.jpg";

Mat originalImg = cv::imread(path, cv::IMREAD_COLOR);

if (debugFlag) { imshow("orignal", originalImg); }

Mat edged;

cv::Canny(originalImg, edged, 150, 200); //canny runs just fine on color images (often better than grayscale depending)

if (debugFlag) { imshow("edges", edged); }

//set boundary pixels so text doesnt clip into image edge

cv::rectangle(edged, Point(0,0), Point(edged.cols-1,edged.rows-1), 255,1);

imshow("edges with boundary", edged);

Mat inverted;//needs to be inverted for distance transform to work propperly

bitwise_not(edged, inverted);

Mat distMap;

cv::distanceTransform(inverted, distMap, DIST_L1,3);

if (debugFlag)

{

//note that this normalization will limit your ability to enforce a fail case (if there is not sufficient room at best point)

normalize(distMap, distMap, 0, 1.0, NORM_MINMAX); //this is only neccesary for visualization

imshow("dist transform", distMap);

}

Point maxPt, minPt;

double maxVal, minVal;

minMaxLoc(distMap, &minVal, &maxVal, &minPt, &maxPt);

//Estimate the size of the box so that you can offset the textbox from center point to top left corner.

double fontScale = 1;

int fontThick = 2;

std::string sampleText = "TEST AREA";

int baseline = 0;

Size textSize = getTextSize(sampleText, FONT_HERSHEY_COMPLEX, fontScale, fontThick, &baseline);

maxPt.x -= textSize.width/2;

maxPt.y -= textSize.height/2;

cv::putText(originalImg, "TEST AREA", maxPt, FONT_HERSHEY_COMPLEX, fontScale, Scalar(0, 0, 0), fontThick);

imshow("Final", originalImg);

waitKey(0);

}