I have a dataframe where one column consists of tuples, i.e

df['A'].values = array([(1,2), (5,6), (11,12)])

Now I want to split this into two different columns. A working solution is

df['A1'] = df['A'].apply(lambda x: x[0])

But this is extremely slow. On my Dataframe it takes multiple minutes. So I would like to vectorize this, to something like

df['A1'] = df['A'][:,0]

With pandas, or using numpy or anything. But all of them give me an error similar to

"*** KeyError: 'key of type tuple not found and not a MultiIndex'"

Is there any vectorized way? This feels like a super simple question and task but i cannot find any working and properly vectorized function.

CodePudding user response:

n: int = 2

df = pd.DataFrame(df["A"].apply(lambda x: (x[:n], x[n:])).tolist(), index=df.index)

you can have a look into pandarallel also.

CodePudding user response:

Try this:

>>> df['A1'] = df['A'].str[0]

Or:

>>> df['A1'] = [dan[0] for dan in df['A'].to_numpy()]

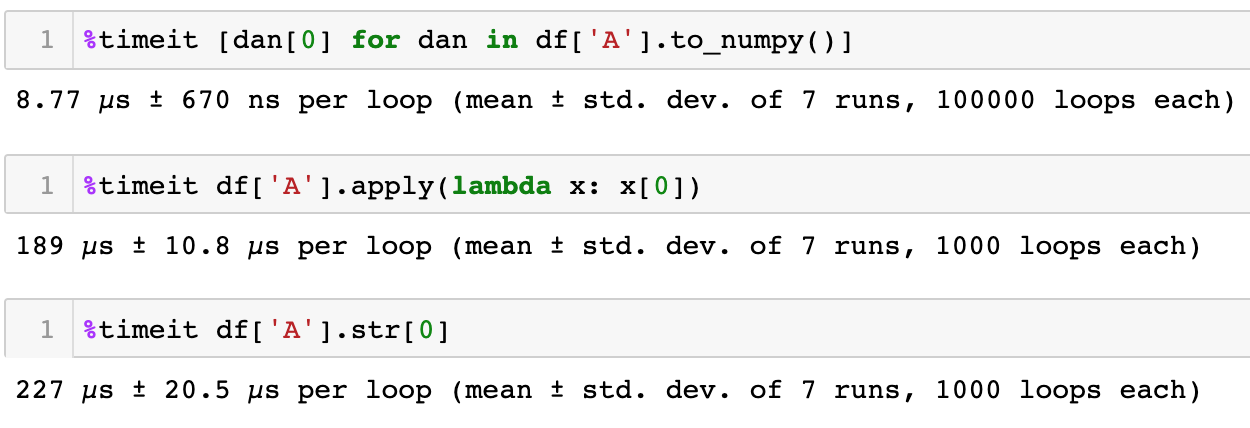

Check runtime:

CodePudding user response:

I'll do it in numpy and skip over the pandas bits.

You can get a decent speedup using np.fromiter together with either itertools.chain.from_iterable to extract everything in one go or operator.itemgetter for individual columns.

import operator as op

import itertools as it

a = [*zip(range(10000),range(10000,20000))]

A = np.empty(10000,object)

A[...] = a

A

# array([(0, 10000), (1, 10001), (2, 10002), ..., (9997, 19997),

# (9998, 19998), (9999, 19999)], dtype=object)

(*np.fromiter(it.chain.from_iterable(A),int,len(A[0])*A.size).reshape(A.size,-1).T,)

# (array([ 0, 1, 2, ..., 9997, 9998, 9999]), array([10000, 10001,

# 10002, ..., 19997, 19998, 19999]))

np.fromiter(map(op.itemgetter(0),A),int,A.size)

# array([ 0, 1, 2, ..., 9997, 9998, 9999])