i am working on land marks detection and to football fields from camera

so i build a neural network but i get a very low accuracy and high loss

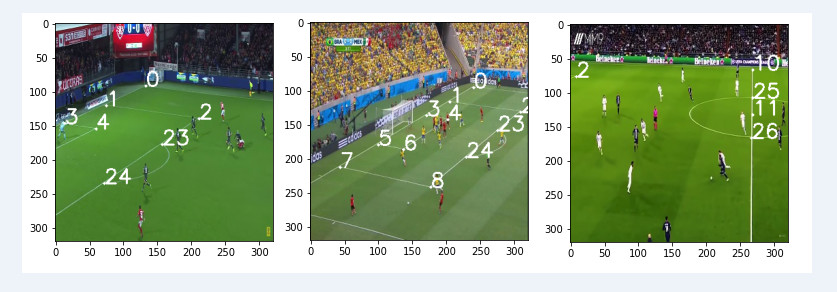

x data is football matches images taken from camera

y data is coordinates of 29 land marks across the field

link for the dataset:

x shape (565, 320, 320, 3)

y shape (565, 29, 2)

every land mark have a two values x,y and land marks that is not in the image take -1

example :

y[0]=array([[ 72., 133.],[ 39., 148.],[122., 154.],

[ 3., 163.],

[ 33., 166.],

[ -1., -1.],

[ -1., -1.],......])

NOTE:

i did normalize for( x => /255 , y => /320) to make the data between 0-1 for x and (-1,0-1) for y

model :

input = tf.keras.layers.Input((320,320,3))

l = tf.keras.layers.Conv2D(128,(5,5),padding='same')(input)

l=tf.keras.layers.BatchNormalization()(l)

l=tf.keras.layers.LeakyReLU()(l)

l=tf.keras.layers.MaxPool2D()(l)

l = tf.keras.layers.Conv2D(64,(5,5),padding='same')(l)

l=tf.keras.layers.BatchNormalization()(l)

l=tf.keras.layers.LeakyReLU()(l)

l=tf.keras.layers.MaxPool2D()(l)

l = tf.keras.layers.Conv2D(32,(5,5),padding='same')(l)

l=tf.keras.layers.BatchNormalization()(l)

l=tf.keras.layers.LeakyReLU()(l)

l=tf.keras.layers.MaxPool2D()(l)

l=tf.keras.layers.Flatten()(l)

l=tf.keras.layers.Dense(256,activation='tanh')(l)

l=tf.keras.layers.Dense(128,activation='tanh')(l)

l=tf.keras.layers.Dense(29*2,activation='tanh')(l)

CodePudding user response:

Your model does not really seem well suited to detect objects. For object detection, a more complex architecture is required. You may look at the YOLO object detector, which is a very popular object detection model. In this repository, there is a rather easy to use and easy to retrain implementation of YOLOv3 available: https://github.com/YunYang1994/TensorFlow2.0-Examples

There are many reasons, why your model will not perform well for object detection. One reason is, that you seem to always predict exactly 29 landmarks, even if there are less objects in the image. Additionally, it is quite hard for the network to determine, which landmark to place at which position in your output, since your output does not preserve the structure of the image and presents the final landmark predictions in an unordered way. This introduces the problem of how to train the model, since you need to assign a landmark to a specific network output to train it. Models like YOLO work differently, as they predict if there is an object at many, regularly spaced, positions in the image, in combination with a score that tells you, how confident a detection is. This solves the problems I just described and should be a good output for your model.

If you do not want to use another repository and instead want to create a new model from scratch, a simple approach might look like this: Do not use dense layers at the end of the model. Instead, use convolutional layers, and additionally use smaller strides in the earlier layers. If you, for example, only use strides of (2, 2) and only use strides two times, this should give you (at some stage in your network) convolutional layers with a shape of (90, 90, n), with n being the number of filters in the convolutional layer. Now you could just set n=1, which basically gives you a result that gives you a 90x90 array of values, that are evenly spread over your image. Then you could train the model, to use these evenly spaced values to predict the probability of an object being at the current location, so that a value of over 0.5 will mean that an object was detected at the corresponding position.