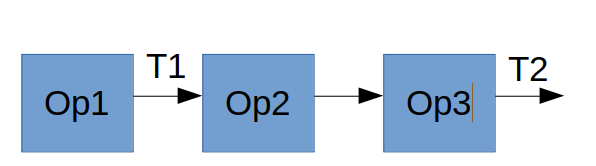

If I have the following graph and want to get the values of tensors T1 and T2 in TF without eager execution, how would I do this? I only know of eval() or session.run() (running that twice could be an option) or tf.print(), but printing is not desired (for performance reasons).

Especially, how is this functionality implemented in TensorFlow? Does this impose a big overhead towards just getting T2? I would be happy to be pointed to relevant resources as well.

I'm generally looking for discussions on this -- if people want to add comparisons to how other frameworks do this (Caffe, Torch, CNTK, Theano, Chainer, DyNet, etc.), that's great! In the end, I am trying to understand how these frameworks could be expanded by operators that return operator-specfic metrics that a user can use to monitor training.

Thanks!

CodePudding user response:

you can pass multiple parameters to session.run, and it will run the network once and return each of those parameters.

For example (from the docs):

a = tf.constant([10, 20])

b = tf.constant([1.0, 2.0])

u, v = session.run([a, b])