I am making a call to a REST API with Azure Synapse and the return dataset looks something like this:

{

"links": [

{

"rel": "next",

"href": "[myRESTendpoint]?limit=1000&offset=1000"

},

{

"rel": "last",

"href": "[myRESTendpoint]?limit=1000&offset=60000"

},

{

"rel": "self",

"href": "[myRESTendpoint]"

}

],

"count": 1000,

"hasMore": true,

"items": [

{

"links": [],

"closedate": "6/16/2014",

"id": "16917",

"number": "62000",

"status": "H",

"tranid": "0062000"

},...

],

"offset": 0,

"totalResults": 60316

}

I am familiar with making a REST call to a single endpoint that can return all the the data with a single call using a Synapse pipeline, but this particular REST endpoint has a hard limit on only returning 1000 records, but it does give a property named "hasMore".

Is there a way to recursively make rest calls in a Synapse pipeline until the "hasMore" property equals false?

The end goal of this is to sink data to either a dedicated SQL pool or into ADLS2 and transform from there.

CodePudding user response:

I have tried to achieve the same scenario using Azure Data Factory which seems to be more appropriate and easy to achieve the goal "The end goal of this is to sink data to either a dedicated SQL pool or into ADLS2 and transform from there".

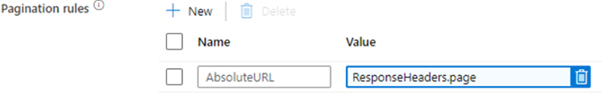

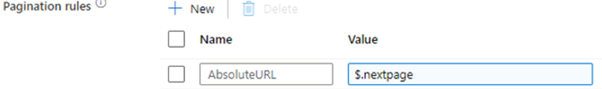

As you have to hit the page recursively to fetch 1000 records , you might set it in the following fashion if the response header/response body contain the URL for the next page.

You're less likely to be able to use the functionality if the next page link or query parameter isn't included in the response headers/body.

Alternatively, you may utilise loop logic and do the Copy Activity.

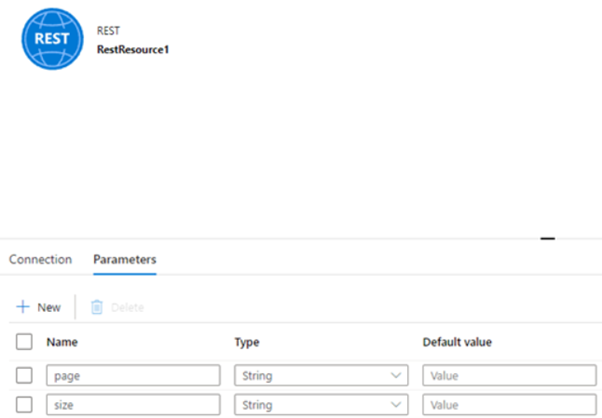

Create two parameters in the Rest Connector:

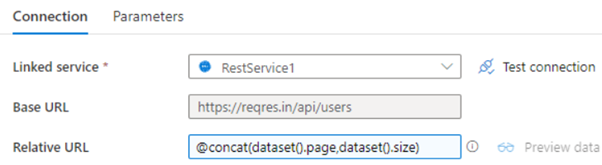

Fill in the parameters for the RestConnector's relative URL.

Using the Set Variable action, the value of this variable would be increased in a loop. For each cycle, the URL for the Copy Activity is dynamically set.If you want to loop or iterate, you may use the Until activity.

Alternative:

In my experience, the REST connection pagination is quite rigid. Usually put the action within a loop. As a result, to have more control. FOREACH Loop, here

CodePudding user response:

For those following the thread, I used IpsitaDash-MT's suggestion using the ForEach loop. In the case of this API, when a call is made I get a property returned at the end of the call named "totalResults". Here are the steps I used to achieve what I was looking to do:

- Make a dummy call to the API to get the "totalResults" parameter. This is just a call to return the number of results I am looking to get. In the case of this API, the body of the request is a SQL statement, so when the dummy request is made I am only asking for the ID's of the results I am looking to get.

I then take the property "totalResults" from that request set a dynamic value in the "Items" of the ForEach loop like this:

@range(0,add(div(sub(int(activity('Get Pages Customers').output.totalResults),mod(int(activity('Get Pages Customers').output.totalResults),1000)),1000),1))

NOTE: The API only allows pages of 1000 results, I do some math to get a range of page numbers. I also have to add 1 to the final result to include the last page.

- In the API I have two parameters that can be passed "limit" and "offset". Since I want all of the data there is no reason to have limit set to anything other than 1000 (the max allowable number). The offset parameter can be set to any number less than or equal to "totalResults" - "limit" and greater than or equal to 0. So I use the range established in step 2 and multiply it out by 1000 to set the offset parameter in the URL.

Setting the offset parameter in the copy data activity

Dynamic value of the Relative URL in the REST connector

NOTE: I found it better to sink the data as JSON into ADLS2 first rather than into a dedicated SQL pool due to the Lookup feature.

Since synapse does not allow nested ForEach loops, I run the data through a data flow to format the data and check for duplicates and updates.

When the data flow is completed it kicks off a lookup activity to get the data that was just processed and pass it into a new pipeline to use another ForEach loop to get the child data for each ID of parent data.