A sample code:

const string UtcTimeFormat = "yyyy-MM-ddTHH:mm:ss.fffZ";

public byte[] UtcTimeAscii => Encoding.ASCII.GetBytes(DateTime.UtcNow.ToString(UtcTimeFormat));

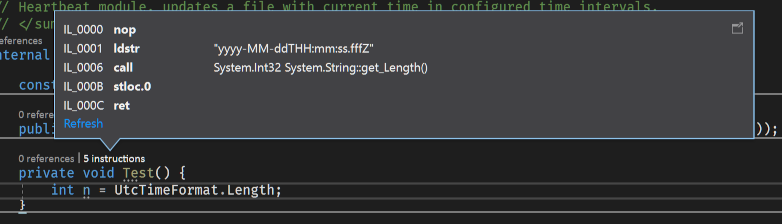

Here's what I got as IL for Length property: (Using Microscope VS add in)

Now: why is there a system call instead of just plain 24?

It's a constant string. Is there a way to tell the compiler it's known ahead-of-time?

I know, I can define 24 as const int, but it's not right, because there would be no direct binding between the actual string and its length. Of course I can call Length() but I'm just curious, maybe is there a special syntax to use that kind of optimization?

I also switched the configuration from Debug to Release but the system call remained.

CodePudding user response:

That optimization, if it would be done, wouldn't be done by the C# compiler but rather by the JIT compiler at (well, just before) runtime. The IL is generally not indicative of what optimizations are happening, and can never prove the absence of a given optimization.

So let's look at the resulting machine code that would actually run. You can do that in the debugger, if you turn off "disable optimization on module load" (otherwise you would see intentionally unoptimized machine code), or you can use sharplab.io: like this

C.M()

L0000: mov eax, 0x18

L0005: ret

The result is clear: getting the length of a constant string was optimized to the constant length, no calls or even loads are involved. By the way it would not have been a system call in any case, it could have been a normal call to a subroutine.