I am a bit struggling to find the best approach to achieve this.

I am trying to achieve a full automated process to spin up a Microsoft sql server, a SQL Database using terraform, and ultimately, when I have all the infra in place, to release a *.dacpac against the SQL Database to create tables and column and ultimately seed some data inside this database.

So far I am using azure pipeline to achieve this and this is my workflow:

- terraform init

- terraform validate

- terraform apply

- database script (to create the tables and script

The above steps works just fine and everything falls in place perfectly. Now I would like to implement another step to seed the database from a csv or excel.

A did some research on google and read Microsoft Documentation but apparently there are different ways of doing this but all those approaches, from bulk insert to bcp sqlcmd, are documented with a local server and not a cloud server.

Can anyone please advice me about how to achieve this task using azure pipeline and a cloud SQL Database? Thank you so so much for any hint

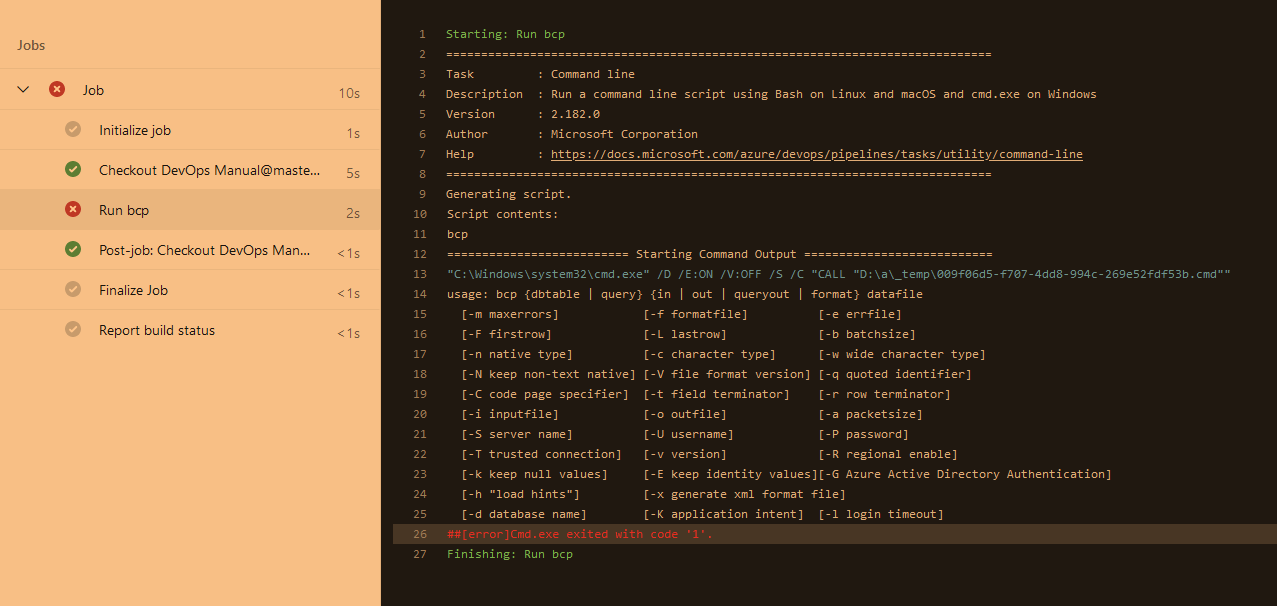

UPDATE: If I create a task in release pipeline for cmd script. I get the following error:

2021-11-12T17:38:39.1887476Z Generating script.

2021-11-12T17:38:39.2119542Z Script contents:

2021-11-12T17:38:39.2146058Z bcp Company in "./Company.csv" -c -t -S XXXXX -d XXXX -U usertest -P ***

2021-11-12T17:38:39.2885488Z ========================== Starting Command Output ===========================

2021-11-12T17:38:39.3505412Z ##[command]"C:\Windows\system32\cmd.exe" /D /E:ON /V:OFF /S /C "CALL "D:\a\_temp\33c62204-f40c-4662-afb8-862bbd4c42b5.cmd""

2021-11-12T17:38:39.7265213Z SQLState = S1000, NativeError = 0

2021-11-12T17:38:39.7266751Z Error = [Microsoft][ODBC Driver 17 for SQL Server]Unable to open BCP host data-file

CodePudding user response: