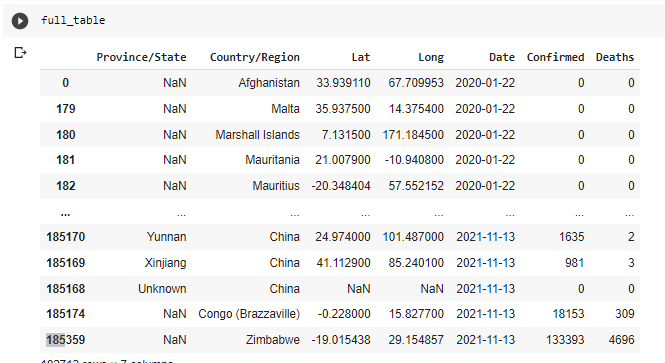

So I'm working with the JHU covid19 data and they've left their recovered dataset go, they're no longer tracking it, just confirmed cases and deaths. What I'm trying to do here is recreate it. The table is the confirmed cases and deaths for every country for every date sorted by date and my getRecovered function below attempts to pick the date for that row, find the date two weeks before that and for the country of that row, and return a 'Recovered' column, which is the confirmed of two weeks ago - the dead today.

Maybe a pointless exercise, but still would like to know how to do it haha. I know it's a big dataset also and there's a lot of operations there, but I've been running it 20 mins now and still going. Did I do something wrong or would it just take this long?

Thanks for any help, friends.

urls = [

'https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_time_series/time_series_covid19_confirmed_global.csv',

'https://raw.githubusercontent.com/CSSEGISandData/COVID-19/master/csse_covid_19_data/csse_covid_19_time_series/time_series_covid19_deaths_global.csv'

]

[wget.download(url) for url in urls]

confirmed = pd.read_csv('time_series_covid19_confirmed_global.csv')

deaths = pd.read_csv('time_series_covid19_deaths_global.csv')

dates = confirmed.columns[4:]

confirmed_long_form = confirmed.melt(

id_vars =['Province/State', 'Country/Region', 'Lat', 'Long'],

value_vars=dates,

var_name='Date',

value_name='Confirmed'

)

deaths_long_form = deaths.melt(

id_vars =['Province/State', 'Country/Region', 'Lat', 'Long'],

value_vars=dates,

var_name='Date',

value_name='Deaths'

)

full_table = confirmed_long_form.merge(

right=deaths_long_form,

how='left',

on=['Province/State', 'Country/Region', 'Date', 'Lat', 'Long']

)

full_table['Date'] = pd.to_datetime(full_table['Date'])

full_table = full_table.sort_values(by='Date', ascending=True)

def getRecovered(row):

ts = row['Date']

country = row['Country/Region']

ts = pd.Timestamp(ts)

do = pd.tseries.offsets.DateOffset(n = 14)

newTimeStamp = ts - do

oldrow = full_table.loc[(full_table['Date'] == newTimeStamp) & (full_table['Country/Region'] == country)]

return oldrow['Confirmed'] - row['Deaths']

full_table['Recovered'] = full_table.apply (lambda row: getRecovered(row), axis=1)

full_table

CodePudding user response:

Your function is being applied row by row, which is likely why performance is suffering. Pandas is fastest when you make use of vectorised functions. For example you can use

pd.to_datetime(full_table['Date'])

to convert the whole date column much faster (see here: Convert DataFrame column type from string to datetime).

You can then add the date offset to that column, something like:

full_table['Recovery_date'] = pd.to_datetime(full_table['Date']) - pd.tseries.offsets.DateOffset(n = 14)

You can then self merge the table on date==recovery_date (plus any other keys) and subtract the numbers.