I have sent the data bricks logs to storage account by enabling diagnostic setting, Now I have to read those logs using azure data bricks for advance analytics. when I try to mount the path it works but reads wont work .

pathIn1= "/mnt/xyx/y=2021/m=10/d=07/h=10/m=00/PT1H.json"

df1=spark.read.format("json").load(pathIn1) \

.withColumn("rawFilePath",input_file_name())

df1=spark.read.format("json").option("multiline","true") \

.json(pathIn1).withColumn("rawFilePath",input_file_name())

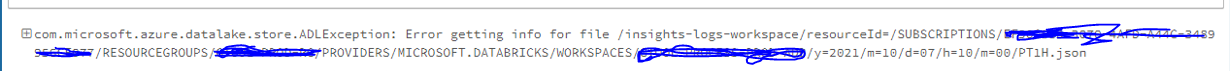

It takes 15mins to run after this ADL exception for gen1 account & for gen2 read query is just running indefinitely.

Tried many approach but getting below error.

CodePudding user response:

With help of below code I can able to read the data from Azure storage account using pyspark.

df = spark.read.json("wasbs://container_@storage_account.blob.core.windows.net/sub_folder/*.json")

df.show()

This gives me the complete data of all my json files in a terminal.

Or you can give a try in a below way:

storage_account_name = "ACC_NAME"

storage_account_access_key = "ACC_key"

spark.conf.set(

"fs.azure.account.key." storage_account_name ".blob.core.windows.net",

storage_account_access_key)

file_type = "json"

file_location = "wasbs://location/path"

df = spark.read.format(file_type).option("inferSchema", "true").load(file_location)

CodePudding user response:

this the way databricks mounts works .

If you attempt to create a mount point within an existing mount point, for example:

Mount one storage account to /mnt/storage1

Mount a second storage account to /mnt/storage1/storage2

Reason : This will fail because nested mounts are not supported in Databricks. recommended one is creating separate mount entries for each storage object.

For example:

Mount one storage account to /mnt/storage1

Mount a second storage account to /mnt/storage2

You can ref : Link

as workaround - you can read it from storage account itself for processing instead of mount.