Based on  With the code above, I only get the first subpage. Is their a modification to collect the data of all 17 subpages together?

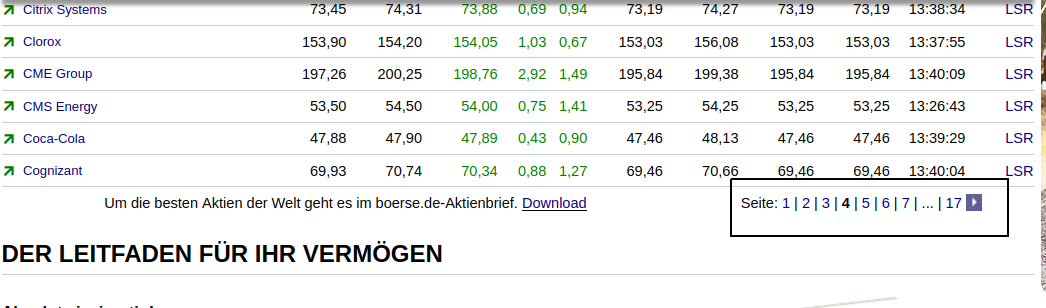

With the code above, I only get the first subpage. Is their a modification to collect the data of all 17 subpages together?

CodePudding user response:

The data is retrieved via POST request. The tutorial gets you the initial one, but they don't show how to go from there. Look at this and you can see how that works:

import requests

import pandas as pd

url = 'https://www.boerse.de/ajax/table.php'

payload = {

'LISTID': 'US78378X1072@16',

'K_SORTCOLUM': 'N',

'K_SORTORDER': 'asc',

'K_PAGE_SIZE': '30'}

page = 0

results = pd.DataFrame()

while True:

payload['K_PAGE'] = str(page)

print('Page: %s' %(page 1))

response = requests.post(url, data=payload)

df = pd.read_html(response.text)[0]

df = df.dropna(subset=['Name'])

df = df[df['Unnamed: 0'].isnull()].drop(['Unnamed: 0'], axis=1)

if len(df) == 0:

print('Finished.')

break

results = results.append(df, sort=False).reset_index(drop=True)

page =1

Output:

print(results)

Name Bid Ask Akt. ... Tagesvol. Vortag Zeit Börse

0 3M Company 15625 15660 15643 ... NaN 15370 14:39:13 LSR

1 A.O. Smith 7186 7300 7243 ... NaN 7167 14:13:52 LSR

2 Abbott Champion 11175 11215 11195 ... NaN 11083 14:37:07 LSR

3 Abbvie 10408 10430 10419 ... NaN 10260 14:41:41 LSR

4 Abiomed 29260 29530 29395 ... NaN 28915 14:41:53 LSR

.. ... ... ... ... ... ... ... ... ...

497 Yum! Brands Champion 11155 11200 11178 ... NaN 10963 14:53:58 LSR

498 Zebra Technologies 53000 53620 53310 ... NaN 52330 14:54:01 LSR

499 Zimmer Holdings 11245 11305 11275 ... NaN 11118 14:50:51 LSR

500 Zions Bancorporation 5700 5750 5725 ... NaN 5625 14:47:18 LSR

501 Zoetis 19585 19685 19635 ... NaN 19100 14:53:56 LSR

[502 rows x 13 columns]