I have pandas dataframe like this.

api region base_path

https://apis.us/image/ us /image

https://apis.emea/video/ emea /video

https://apis.asia/docs/ asia /docs

https://apis.emea/image/ emea /image

https://apis.us/video/ us /video

https://apis.us/docs/ us /docs

https://apis.asia/location/ asia /location

From the api list few apis are common in more than 1 region .Ex: /image is common for both us and emea. The output dataframe I want like this:

api_us_emea api_asia_us api_asia_emea api_us_emea_asia api_usa api_emea api_asia

https://apis.us/image/ https://apis.us/docs/ No Common api No Common api N/A N/A https://apis.asia/location/

https://apis.us/video/

Here, for common apis I always want us api to be present in the column value.Ex: api_us_emea column holds only US api, for api_asia_emea asia api and for api_us_emea_asia us api as well.

Hiow can I acheive this?

CodePudding user response:

I think this code snippet should give you what you want, or at least a reasonable direction to solve your problem. Basically iterate through the possible subsets of regions, and take all of the relevant base_paths for that subset. Remove those that we used already in bigger subsets that contain the subset that we are currently looking at. Hope I helped.

from collections import defaultdict

import pandas as pd

from itertools import chain, combinations

data = [['https://apis.us/image/', 'us', '/image'],

['https://apis.emea/video/', 'emea', '/video'],

['https://apis.asia/docs/', 'asia', '/docs'],

['https://apis.emea/image/', 'emea', '/image'],

['https://apis.us/video/', 'us', '/video'],

['https://apis.us/docs/', 'us', '/docs'],

['https://apis.asia/location/', 'asia', '/location']]

df = pd.DataFrame(data, columns=['api', 'region', 'base_path'])

def powerset(iterable):

"powerset([1,2,3]) --> () (1,) (2,) (3,) (1,2) (1,3) (2,3) (1,2,3)"

s = list(iterable)

return chain.from_iterable(combinations(s, r) for r in range(len(s) 1))

def flatten(t):

return [item for sublist in t for item in sublist]

new_dict = defaultdict(list)

for subset in reversed(list(powerset(pd.unique(df['region'])))):

if len(subset) > 0:

for api_path in pd.unique(df['base_path']):

df_path = df[df['base_path'] == api_path]

if set(subset).issubset(set(pd.unique(df_path['region']))):

new_dict[subset].append(api_path)

curr_keys = list(new_dict.keys())

for key in curr_keys:

if set(subset).issubset(set(key)) and len(key) > len(subset):

for remove_path in [x for x in new_dict[subset] if x in new_dict[key]]:

new_dict[subset].remove(remove_path)

new_df = pd.DataFrame({k: pd.Series(v) for k, v in new_dict.items()})

CodePudding user response:

Try this:

import itertools

import functools, operator

def find_coomon_elements(p):

return list(set.intersection(*[set(li) for li in p]))

def find_unique_elements(p, l):

merged_p = functools.reduce(operator.iconcat, p, [])

return [x for x in l if merged_p.count(x)==1]

strings_array = df["api"].str[:-1].str.split("/").str[-2:].apply(lambda x: (x[0][5:], x[1])).values

d = dict()

[d[t[0]].append(t[1]) if t[0] in list(d.keys()) else d.update({t[0]: [t[1]]}) for t in strings_array]

se = set([x[0] for x in strings_array])

combs = [list(itertools.combinations(se, i)) for i in range(1, len(se) 1)]

col1, col2 = [], []

for item in combs[0]:

col1.append("_".join(["api"] list(item)))

col2.append(["https://apis." item[0] "/" s for s in find_unique_elements([d[c] for c in d.keys()], d[item[0]])])

for i in range(1, len(combs)):

for item in combs[i]:

common = find_coomon_elements([d[c] for c in item])

if len(common)>0:

col1.append("_".join(["api"] list(item)))

col2.append(["https://apis." item[0] "/" s for s in common])

else:

col1.append("_".join(["api"] list(item)))

col2.append("No Common api")

output_df = pd.DataFrame({"col1":col1, "col2":col2})

output_df

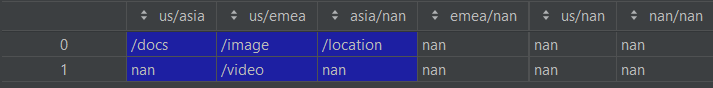

Output:

col1 col2

0 api_us []

1 api_asia [https://apis.asia/location]

2 api_emea []

3 api_us_asia [https://apis.us/docs]

4 api_us_emea [https://apis.us/image, https://apis.us/video]

5 api_asia_emea No Common api

6 api_us_asia_emea No Common api