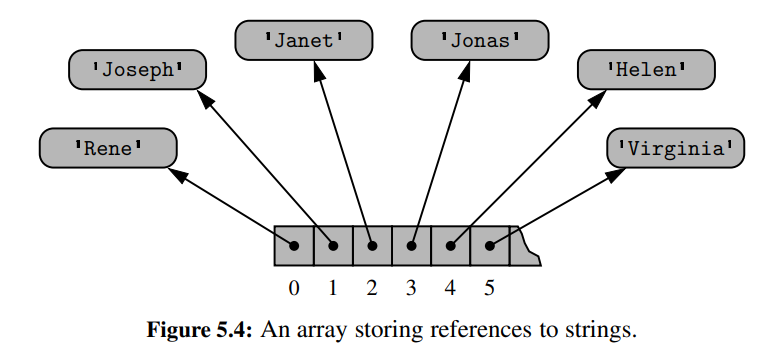

Reading  so no matter what kind of data you are inserting, there is fixed length of addresses.

so no matter what kind of data you are inserting, there is fixed length of addresses.

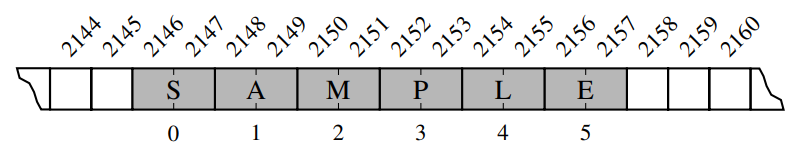

Then, the Author mentions that for strings for example, because we know that each elements is Unicode char, that can be represented in 64 bit, python uses compact arrays - holds the fixed length data and not references as mentioned above in regular lists.

Till here, everything fine and understood, the problem is in the section below -

Compact arrays have several advantages over referential structures in terms of computing performance. Most significantly, the overall memory usage will be much lower for a compact structure because there is no overhead devoted to the explicit storage of the sequence of memory references (in addition to the primary data). That is, a referential structure will typically use 64-bits for the memory address stored in the array, on top of whatever number of bits are used to represent the object that is considered the element. Also, each Unicode character stored in a compact array within a string typically requires 2 bytes. If each character were stored independently as a one-character string, there would be significantly more bytes used.

still, everything fine, but look at the math below -

As another case study, suppose we wish to store a sequence of one million, 64-bit integers. In theory, we might hope to use only 64 million bits. However, we estimate that a Python list will use four to five times as much memory. Each element of the list will result in a 64-bit memory address being stored in the primary array, and an int instance being stored elsewhere in memory. Python allows you to query the actual number of bytes being used for the primary storage of any object. This is done using the getsizeof function of the sys module. On our system, the size of a typical int object requires 14 bytes of memory (well beyond the 4 bytes needed for representing the actual 64-bit number). In all, the list will be using 18 bytes per entry, rather than the 4 bytes that a compact list of integers would require.

The typical int require 4 bytes, the address for 64bit system requires another 8 bytes, so how he ended up in 18 bytes per entry?

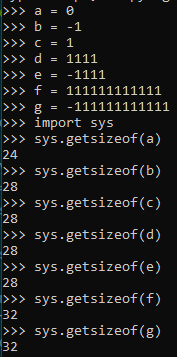

I'm adding some interesting test that I would love to understand the implementation in python under the hood if possible.

CodePudding user response:

14 bytes is an unreasonable size for an 64-bit integer object in memory. It will very likely by 16 bytes.

The extra 8 bytes is used for:

The type and size of the object. Remember that in the dynamic array, there is only a pointer to the object. It could be an integer, but it could also be a Boolean or another array. Python needs to know what the following 8 bytes represent.

Maybe a reference count. Some implementations of python use reference counting. They keep track of how many references there are to the object and then delete it from memory when the count gets to 0.

Alignment. On some architectures, 8-byte data types like double-precision floating point numbers or 64-bit pointers need to be stored at even multiples of 8 bytes. Sometimes they're just faster to use when that's the case. When you allocate memory, the system gives you a region of memory that could by used for anything, so it will be aligned on an even 8 or (usually) 16-byte boundary. That's why 14 is not a reasonable size -- it would get rounded up to 16 by alignment.

CodePudding user response:

First of all, there is a mistake in the text. They say we'd only need 4 bytes to represent a 64-bit number. But actually, with 4 bytes, you can only represent a 32-bit number; because (2^8)^4 = 2^32 (or in simpler terms, 8*4 = 32). You need 8 bytes for a 64-bit number. My guess is that the book you read used to be written with 32-bit systems in mind, and there was an attempt to adapt it for 64-bit systems, but the author got confused and now some of the numbers are inconsistent.

Second, they say "only 4 bytes would be needed to represent a 64-bit integer, but a typical int object in python is 14 bytes". They never say that a typical int in python is 4 bytes. The reason why an int takes more memory in python than in C is that C integers are fixed-size, with a fixed MAX_INT value for the maximum representable integer; whereas python handles "arbitrarily large integers", with no maximum representable value, so naturally there is going to be a small overhead to store information about the size of the int. In addition, every value in python is an object, and requires a small overhead of memory to store metainformation about the object, in addition to the actual data.

Arithmetic with arbitrarily large integer is usually called "BigNum arithmetic" or something similar. In many languages, if you want to use BigNums, you need an external library, such as the GNU Multiple Precision library for C/C . In python, builtin int are BigNums already.

Related question with more detail: Why do ints require three times as much memory in Python?; here they say "three times as much memory" because a typical C int is 8-bytes on a 64-bit system, whereas a typical Python int is apparently 24-bytes (not 14) on a 64-bit system.