We're experiencing a problem with the cert-manager related to TLS Certificates. When we deploy an application using helm, with all the required annotations, TLS secret is not created.

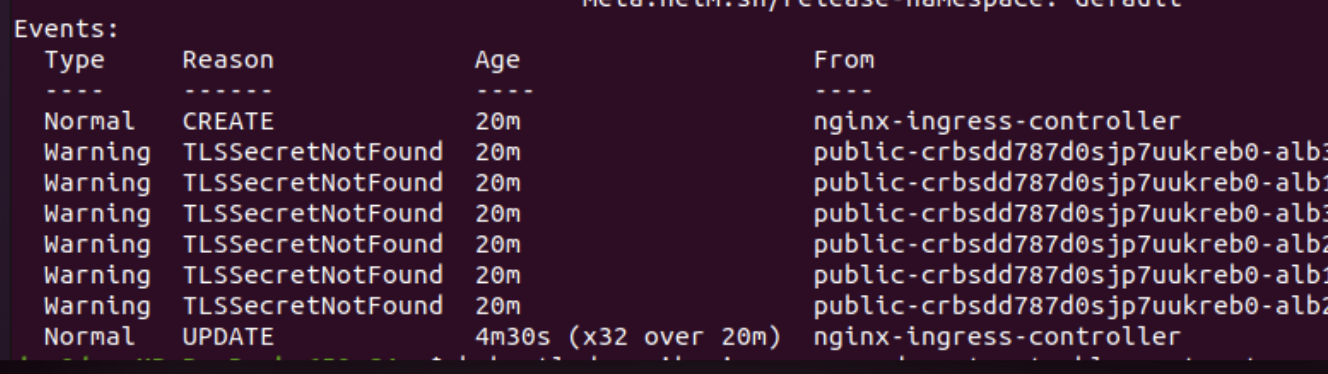

Ingress shows the following error:

What I've found is that from the Kubernetes dashboard, when I get details from the ingress resource on the secret I get a 404 error. The ingress resource gets created referencing a secret that doesn't exist.

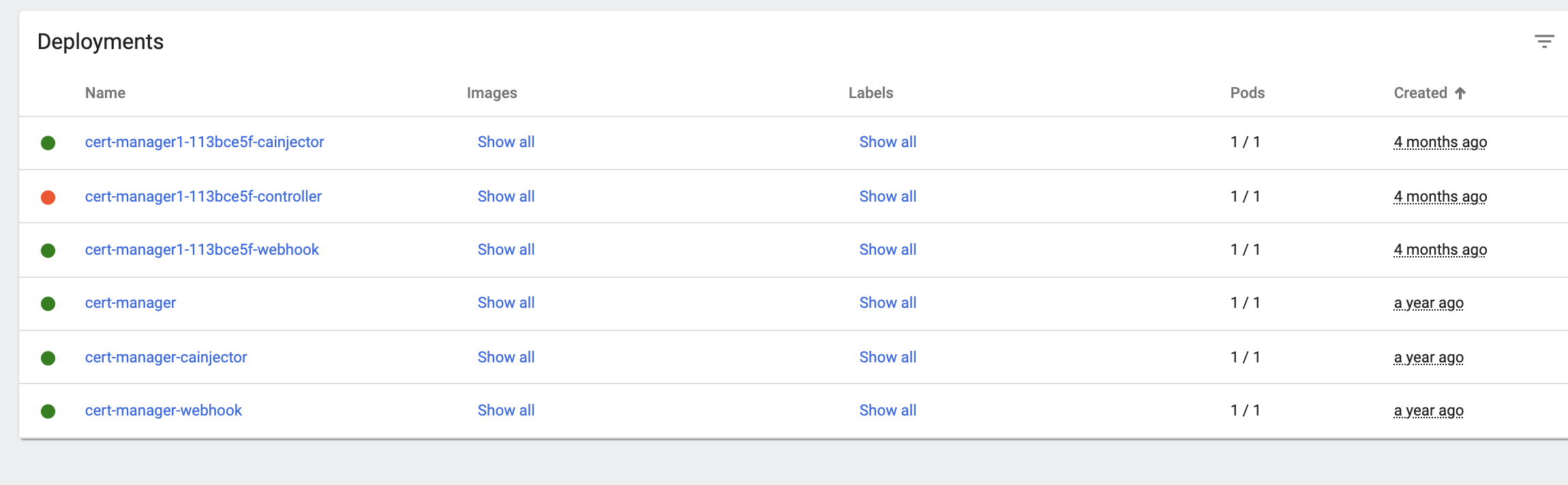

Looking at the cert-manager namespace, I found what appears to be two deployments:

The one with a year-old seems to not trigger at all. The one 4-month old seem to trigger but fails continuously with the following errors. And is displaying red for an evicted pod that failed, but it is running.

E1209 19:46:28.340854 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1.Certificate: failed to list *v1.Certificate: the server could not find the requested resource (get certificates.cert-manager.io)

E1209 19:46:41.726643 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1.CertificateRequest: failed to list *v1.CertificateRequest: the server could not find the requested resource (get certificaterequests.cert-manager.io)

E1209 19:46:42.842402 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1.Issuer: failed to list *v1.Issuer: the server could not find the requested resource (get issuers.cert-manager.io)

E1209 19:46:43.581019 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1.ClusterIssuer: failed to list *v1.ClusterIssuer: the server could not find the requested resource (get clusterissuers.cert-manager.io)

E1209 19:46:51.205804 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1.Challenge: failed to list *v1.Challenge: the server could not find the requested resource (get challenges.acme.cert-manager.io)

E1209 19:46:51.819486 1 reflector.go:138] external/io_k8s_client_go/tools/cache/reflector.go:167: Failed to watch *v1.Order: failed to list *v1.Order: the server could not find the requested resource (get orders.acme.cert-manager.io)

This is a new cluster I'm working with. I found on the cert-manager namespace a total of 473 evicted pods (I got an urge to clean those, I should right?)

Anyway, the main issue is the TLS Secret not being created by the cert-manager. I can provide a ton of additional information, but everything else looks fine.

CodePudding user response:

In the end, I resolved this issue by scaling the replica set for cert-manager1. That cause the pods to be restarted, and everything worked correctly.

However, upon further investigation is not correct to have to deploy as is likely they are conflicting with each other. Part of the solution is to remove one of them, have only one working. Also, updating to the latest version:

NAME NAMESPACE REVISION UPDATED STATUS CHART APP VERSION

cert-manager cert-manager 1 2020-07-24 18:49:08.541265133 0530 0530 deployed cert-manager-v0.15.v0.15.1

cert-manager1-113bce5f cert-manager 1 2021-08-03 14:54:54.351112781 0000 UTC deployed cert-manager-0.1.101.4.2