I want to create a VM on Google Cloud that runs my docker container. My image is in Google's Artifact Registry and I am able to deploy it to a VM, but it won't allow network requests, even locally (I tried curl from the SSH locally and it won't work). The container is a simple Node.js app that returns "Hi there" to any request.

I think the problem is specifying the ports when I create my container / run the image. When creating the container on my local command line, I simply use the -p operator to publish ports to the container. But since Google's VM's takes an image from the Artifact Registry and automatically creates a container when it builds the VM, there is no step for me to run the -p tag.

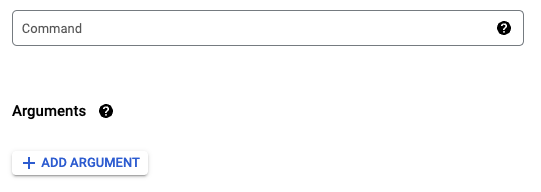

I think the solution may have to do with the "Command" option in the Cloud Console (see below) but the

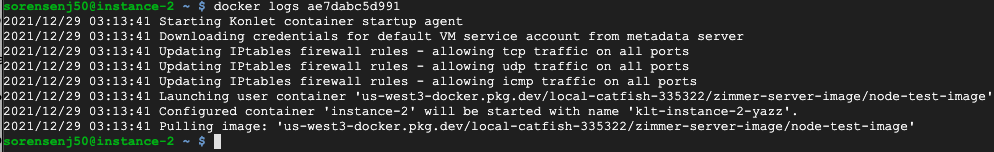

EDIT: Logs

Ok, the logs were more interesting than I thought. I was right that originally a docker container was created.

But this container was soon deleted, and new one appeared with a different id that restarted itself every so often.

EDIT 2:

Dockerfile:

FROM node:latest

WORKDIR /untitled

COPY package.json .

RUN npm install

EXPOSE 3000

COPY . ./

CMD node server.js

My project is named "untitled." This is a test image just to get everything working.

The actual Node.js code is this:

const express = require("express");

const app = express();

const port = process.env.PORT || 3000;

app.get('/', (req, res) => {

res.send("Hi there!")

})

app.listen(port, () => console.log("Listening On Port 3000"))

And my Package.json is

{

"name": "untitled",

"version": "1.0.0",

"description": "",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"author": "",

"license": "ISC",

"dependencies": {

"express": "^4.17.2"

}

}

I'm sure there's something wrong with what I'm doing but I doubt it's the source code--it doesn't exit when I run a local docker container.

CodePudding user response:

IIRC using gcloud compute create-with-container binds the container to the host's network. So, any ports exposed by the container should be available on the (same) port on the host.

Can you confirm that your container is running on the VM? SSH in and then docker container ls (as you would locally).

Can you provide more information on your curl command and the response.

You should be able to sudo journalctl the VM to review its startup and identify any issues that it had starting the container. You may want to review konlet-startup and docker units specifically, e.g. sudo journalctl --unit=konlet-startup and eyeball whether it starts a container (hopefully your container [ID]).

Another thing to try is to ss the VM's (tcp listening) ports. One of these should match your container:

sudo ss --tcp --listening --processes

Update

PROJECT=...

ZONE=...

gcloud compute instances create-with-container test \

--container-image=gcr.io/kuar-demo/kuard-amd64:blue \

--image-family=cos-stable \

--image-project=cos-cloud \

--machine-type=f1-micro \

--zone=${ZONE} \

--project=${PROJECT}

gcloud compute ssh ${INSTANCE} \

--zone=${ZONE} \

--project=${PROJECT}

On the VM:

docker container ls --format="{{.ID}}\t{{.Image}}"

521d11ace93b gcr.io/kuar-demo/kuard-amd64:blue

11ae194b354e gcr.io/stackdriver-agents/stackdriver-logging-agent:1.8.9

curl \

--silent \

--output /dev/null \

--write-out '%{response_code}' \

localhost:8080

200 # Success

# Matches `kuard` container

sudo journalctl --unit=konlet-startup

Starting a container with ID: 521d11ace93b...

# http-alt == 8080

sudo ss --tcp --listening --processes

Local Address:Port Process

*:http-alt users:(("kuard",pid=893,fd=3))

# Using pid from ss command

ps aux | grep 893

893 /kuard