I have replaced a GTX 1080TI graphics card with a GTX A5000 in a desktop machine and reinstalled Ubuntu to upgraded from 16.04 to 20.04 in order to meet requirements. But now I can't retrain or predict with our current model; When loading the model, Keras hangs for a very long time and all predicted results are NaN values. We use Keras 2.2.4 with tensorflow 2.1.0 and Cuda 10.1.243, which I installed using Conda and I have tried with different drivers.

If I put the old GTX 1080 TI card back in to the machine the code works fine.

Any idea of what can be wrong - can it be the case that the A5000 does not support the same models as an old 1080TI card?

CodePudding user response:

Ok, I can confirm that this setup works on the GTX A5000

- CUDA: 11.6.0

- Tensorflow: 2.7.0

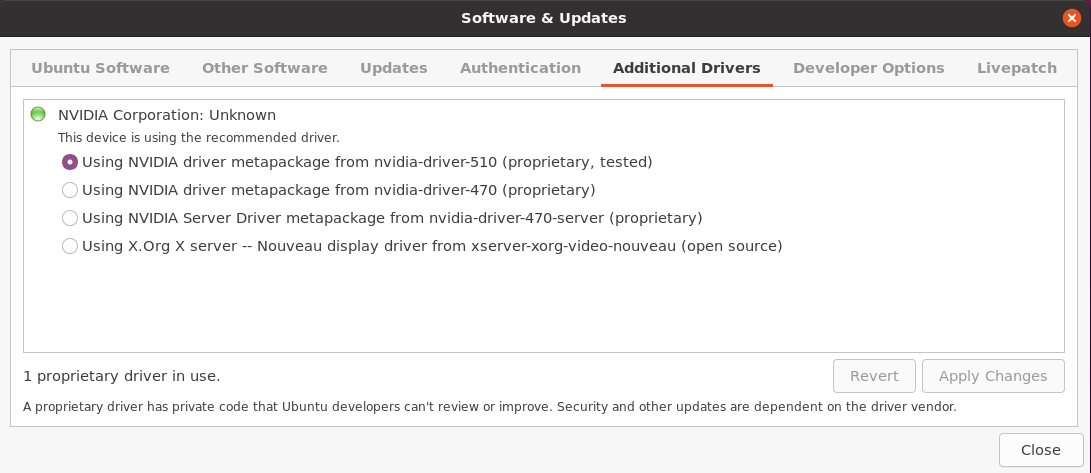

Driver Version: 510.47.03

Thanks to @talonmies for his comment.