So I'm preparing for a technical interview, and one of my practice questions is the Kth smallest number. I know that I can do a sort for O(n * log(n)) time and use a heap for O(n * log(k)). However I also know I can partition it (similar to quicksort) for an average case of O(n).

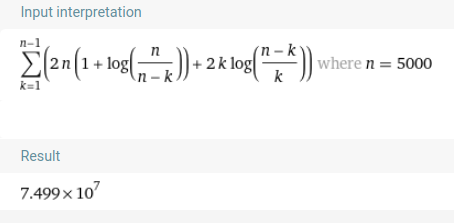

The actual calculated average time complexity should be:

I've double checked this math using WolframAlpha, and it agrees.

So I've coded my solution, and then I calculated the actual average time complexity on random data sets. For small values of n, it's pretty close. For example n=5 might give me an actual of around 6.2 when I expect around 5.7. This slightly more error is consistent.

This only gets worse as I increase the value of n. For example, for n=5000, I get around 15,000 for my actual average time complexity, when it should be slightly less than 10,000.

So basically, my question is where are these extra iterations coming from? Is my code wrong, or is it my math? My code is below:

import java.util.Arrays;

import java.util.Random;

public class Solution {

static long tc = 0;

static void swap(int[] arr, int i, int j) {

int temp = arr[i];

arr[i] = arr[j];

arr[j] = temp;

}

static int kMin(int[] arr, int k) {

arr = arr.clone();

int pivot = pivot(arr);

if(pivot > k) {

return kMin(Arrays.copyOfRange(arr, 0, pivot), k);

} else if(pivot < k) {

return kMin(Arrays.copyOfRange(arr, pivot 1, arr.length), k - pivot - 1);

}

return arr[k];

}

static int pivot(int[] arr) {

Random rand = new Random();

int pivot = rand.nextInt(arr.length);

swap(arr, pivot, arr.length - 1);

int i = 0;

for(int j = 0; j < arr.length - 1; j ) {

tc ;

if(arr[j] < arr[arr.length - 1]) {

swap(arr, i, j);

i ;

}

}

swap(arr, i, arr.length - 1);

return i;

}

public static void main(String args[]) {

int iterations = 10000;

int n = 5000;

for(int j = 0; j < iterations; j ) {

Random rd = new Random();

int[] arr = new int[n];

for (int i = 0; i < arr.length; i ) {

arr[i] = rd.nextInt();

}

int k = rd.nextInt(arr.length - 1);

kMin(arr, k);

}

System.out.println("Actual: " tc / (double)iterations);

double expected = 2.0 * n - 2.0 - (Math.log(n) / Math.log(2));

System.out.println("Expected: " expected);

}

}

CodePudding user response:

As you and others have pointed out in the comments, your calculation assumed that the array was split in half on each iteration by the random pivot, which is incorrect. This uneven splitting has a significant impact: when the element you're trying to select is the actual median, for instance, the expected size of the array after one random pivot choice is 75% of the original, since you'll always choose the larger of the two arrays.

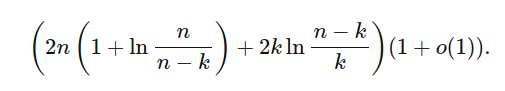

For an accurate estimate of the expected comparisons for each value of n and k, David Eppstein published an accessible analysis

This is a very close estimate for your values, even though this assumes no duplicates in the array.