I have a setup with multiple cameras that all point towards the same scene. All cameras are calibrated to the same world coordinate system (i.e.: I know the location of all the cameras with respect to the origin of the world coordinate system). In each image from the cameras, I will detect objects in the scene (segmentation). My goal is to count all objects in the scene and I do not want to count an object twice as it will appear in multiple images. This means that if I detect an object in image A and I detect an object in image B, then I should be able to confirm that this is the same object or not. It should be possible to do this using the 3D info I have due to my calibrated cameras. I was thinking of the following:

Voxel carving. I create silhouettes out of all images with the detected objects. I apply voxel carving and then count the unique number of clustered voxels I have. this will be the number of unique objects in the scene?

I also thought about for example taking the center of the object and then casting a ray from it into the 3D world, this for each camera and then detecting if the lines cross each other (from different cameras). But this would be very error-prone as the objects might have a slightly different size/shape in each image and the center might be off. Also, the locations of the cameras are not 100% exact, which will result in the ray being off.

What would be a good approach to tackle this issue?

CodePudding user response:

Do you only know "object", but no categories or identities, and no other image information other than a bounding box or mask? Then it's impossible.

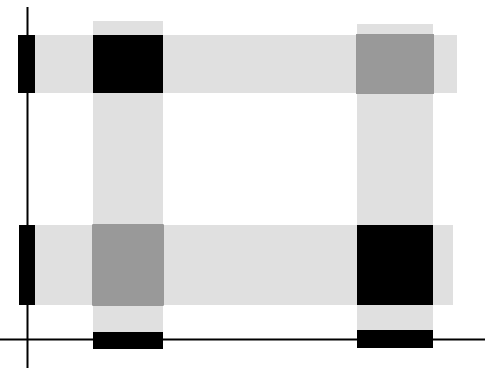

Consider a stark simplification because I don't feel like drawing viewing frustrums right now

Black boxes are real objects. Left and bottom axis are projections of those. Dark gray boxes would also be valid hypotheses of boxes, given these projections.

You can't tell where the boxes really are.

If you had something to disambiguate different object detections, then yes, it would be possible.

One very fine-detail variant of that would be block matching to obtain disparity maps (stereo vision). That's a special case of "Structure from Motion".

If your "objects" have texture, and you are willing to calculate point clouds, then you can do it.