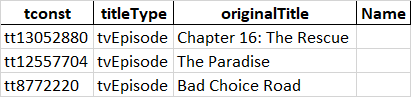

i'm writing a small script that reads from excel sheet the id of an episode and fills in it's corresponding series name, here's a following example of my excel sheet that would be used as input

my script would read the "tconst" value and use it to find the corrisponding episode on imdb and get the website title and use that to find the name of the series,

import pandas as pd

from urllib.request import urlopen

from bs4 import BeautifulSoup

import re

dataset_loc='C:\\Users\\Ghandy\\Documents\\Datasets\\Episodes with over 1k ratings 2020 Small.xlsx'

dataset= pd.read_excel(dataset_loc)

for tconst in dataset['tconst']:

url='https://www.imdb.com/title/{}/'.format(tconst)

soup = BeautifulSoup(urlopen(url),features="lxml")

dataset = dataset.append({"Name": re.findall(r'"([^"]*)"',soup.title.get_text())[0]}, ignore_index=True)

dataset.to_excel(dataset_loc,index=False)

I got a few problems with this code, first python keeps telling me to not use concat and instead use append, but all the answers on google and stackoverflow give examples with append and i don't know how to use concat exactly,

second, my data is being appened into a completely new and empty row, not next to the original data that i want, so in this example i would get "The Mandalorian" at row 4 instead of 2,

and finally third, i want to know if it's better to add the data one at a time or put them all in a temporary list variable and then add that all at the same time, and how would i go about doing that with concat?

CodePudding user response:

I can't really say what your problem with append and concat consists in -- everyone says use append and you use append as well, do you want to use concat instead? Here is a post on the difference between concat and append.

Append appends rows, you might want to use .at?

I would say this depends on how much data you already have and how much you are going to add. To have less overhead and copying around I would prefer to add directly to the dataframe, but if there is a lot happening between the url call and the adding to the df, the collected version could be better.

CodePudding user response:

thanks to @Stimmot using .at, the code would look like this now:

for index, tconst in enumerate(dataset['tconst']):

url='https://www.imdb.com/title/{}/'.format(tconst)

soup = BeautifulSoup(urlopen(url),features="lxml")

dataset.at[index,'Name']=re.findall(r'"([^"]*)"',soup.title.get_text())[0]

dataset.to_excel(dataset_loc)