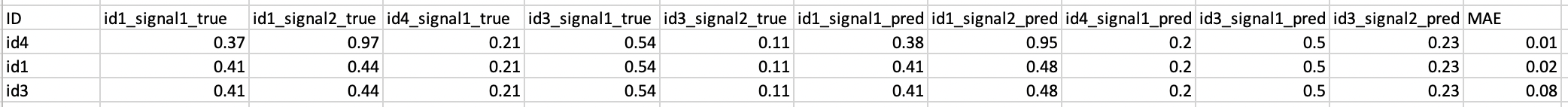

Below is a sample of pandas dataframe that I'm working with.

I want to calculate mean absolute error for each row but only considering relevant columns for values of ID column.

There may be 2 4,6 or 8 columns relevant to values of ID column. For example, relevant columns for 'id4' is 'id4_signal1_true' and 'id4_signal1_pred'. for 'id1' its 'id1_signal1_true', 'id1_signal2_true', 'id1_signal1_pred' and 'id1_signal2_pred'.

import pandas as pd

list = [['id4',0.37,0.97,0.21,0.54,0.11,0.38,0.95,0.2,0.5,0.23],

['id1',0.41,0.44,0.21,0.54,0.11,0.41,0.48,0.2,0.5,0.23],

['id3',0.41,0.44,0.21,0.54,0.11,0.41,0.48,0.2,0.5,0.23]]

df = pd.DataFrame(list, columns =['ID','id1_signal1_true','id1_signal2_true','id4_signal1_true','id3_signal1_true',

'id3_signal2_true','id1_signal1_pred','id1_signal2_pred','id4_signal1_pred',

'id3_signal1_pred','id3_signal2_pred'])

I want to calculate mae for each row only considering the specific relevant columns.

for example, for the first row, it should be like

mean(abs(id4_signal1_true-id4_signal1_pred))

for the second row, it should be

mean(abs('id1_signal1_true'-'id1_signal1_pred'),abs('id1_signal2_true'-'id1_signal2_pred'))

below is a screenshot of how the output looks like. MAE is the column which i want to get

I used the below code to solve this. this is working fine. But the only issue is i have around 2 million rows and this takes hours. i want to find a efficient way to do this. Highly appreciated your help on this

Attack = df

ID_MAE = []

for id in range(len(Attack['ID'])):

signals = np.array(Attack[Attack.columns[Attack.columns.str.contains(Attack.ID[id])]]) # select columns relevant to current ID

signal = signals[id] # select only the specific row (id)

no_of_signals = int(len(signal)/2) # identify number of signals

reshaped_arr = np.reshape(signal, (2,no_of_signals))

signal_true = reshaped_arr[0] # array for true values

signal_pred = reshaped_arr[1] # array for predicted values

# mae calculation

MAE = np.mean(np.abs(signal_true - signal_pred), axis=0)

ID_MAE.append(MAE)

df['MAE'] = ID_MAE

CodePudding user response:

Try:

- Split the columns into a three-level header

groupbyID and Signal and get the MAE- Select the correct MAE for each row

- Collapse the multi-level header back to a single level.

df = df.set_index("ID").rename_axis(None)

df.columns = df.columns.str.split("_",expand=True)

df = df.rename_axis(["ID","Signal","Type"],axis=1).sort_values(["ID","Signal"],axis=1)

MAE = df.groupby(["ID","Signal"], axis=1).diff().abs().groupby("ID", axis=1).mean()

df.columns = df.columns.map("_".join)

df["MAE"] = df.index.to_series().apply(lambda x: MAE.at[x,x])

>>> df

id1_signal1_true id1_signal1_pred ... id4_signal1_pred MAE

id4 0.37 0.38 ... 0.2 0.01

id1 0.41 0.41 ... 0.2 0.02

id3 0.41 0.41 ... 0.2 0.08

[3 rows x 11 columns]

CodePudding user response:

import numpy as np

df2 = df.set_index('ID').apply(lambda x: (np.array(list(map(lambda t: t[1], sorted(x[(ser:=pd.Series(x.index.to_list()))[ser.str.startswith(x.name)]].to_dict().items())))).reshape(-1,2)), axis=1)

df2.apply(lambda arr: np.array([abs(a[0] - a[1]) for a in arr]).mean())

output:

ID

id4 0.01

id1 0.02

id3 0.08

dtype: float64

Try it, if it is good, I will edit and explain ... :)

UPDATE:

or you can:

df2 = df.apply(lambda x: (np.array(list(map(lambda t: t[1], sorted(x[(ser:=pd.Series(x.index.to_list()))[ser.str.startswith(x.ID)]].to_dict().items())))).reshape(-1,2)), axis=1)

df["MAE"] = df2.apply(lambda arr: np.array([abs(a[0] - a[1]) for a in arr]).mean())